Apache NiFi User Guide

Introduction

Apache NiFi is a dataflow system based on the concepts of flow-based programming. It supports powerful and scalable directed graphs of data routing, transformation, and system mediation logic. NiFi has a web-based user interface for design, control, feedback, and monitoring of dataflows. It is highly configurable along several dimensions of quality of service, such as loss-tolerant versus guaranteed delivery, low latency versus high throughput, and priority-based queuing. NiFi provides fine-grained data provenance for all data received, forked, joined cloned, modified, sent, and ultimately dropped upon reaching its configured end-state.

See the System Administrator’s Guide for information about system requirements, installation, and configuration. Once NiFi is installed, use a supported web browser to view the User Interface (UI).

Browser Support

| Browser | Version |

|---|---|

| Chrome | Current and Current - 1 |

| FireFox | Current |

| Microsoft Edge | Current |

Current and Current - 1 indicates that the UI is supported in the current stable release of that browser and the preceding one. For instance, if the current stable release is 45.X then the officially supported versions will be 45.X and 44.X.

Current indicates that the UI is supported in the current stable release of that browser.

The supported browser versions are driven by the capabilities the UI employs and the dependencies it uses. UI features will be developed and tested against the supported browsers. Any problem using a supported browser should be reported to Apache NiFi.

Unsupported Browsers

While the UI may run successfully in unsupported browsers, it is not actively tested against them. Additionally, the UI is designed as a desktop experience and is not currently supported in mobile browsers.

NOTE

Data Integration Platform does not support with Internet Explorer.

Viewing the UI in Variably Sized Browsers

In most environments, all of the UI is visible in your browser. However, the UI has a responsive design that allows you to scroll through screens as needed, in smaller sized browsers or tablet environments.

In environments where your browser width is less than 800 pixels and the height less than 600 pixels, portions of the UI may become unavailable.

Terminology

DataFlow Manager: A DataFlow Manager (DFM) is a NiFi user who has permissions to add, remove, and modify components of a NiFi dataflow.

FlowFile: The FlowFile represents a single piece of data in NiFi. A FlowFile is made up of two components: FlowFile Attributes and FlowFile Content. Content is the data that is represented by the FlowFile. Attributes are characteristics that provide information or context about the data; they are made up of key-value pairs. All FlowFiles have the following Standard Attributes:

-

uuid: A unique identifier for the FlowFile

-

filename: A human-readable filename that may be used when storing the data to disk or in an external service

-

path: A hierarchically structured value that can be used when storing data to disk or an external service so that the data is not stored in a single directory

Processor: The Processor is the NiFi component that is used to listen for incoming data; pull data from external sources; publish data to external sources; and route, transform, or extract information from FlowFiles.

Relationship: Each Processor has zero or more Relationships defined for it. These Relationships are named to indicate the result of processing a FlowFile. After a Processor has finished processing a FlowFile, it will route (or “transfer”) the FlowFile to one of the Relationships. A DFM is then able to connect each of these Relationships to other components in order to specify where the FlowFile should go next under each potential processing result.

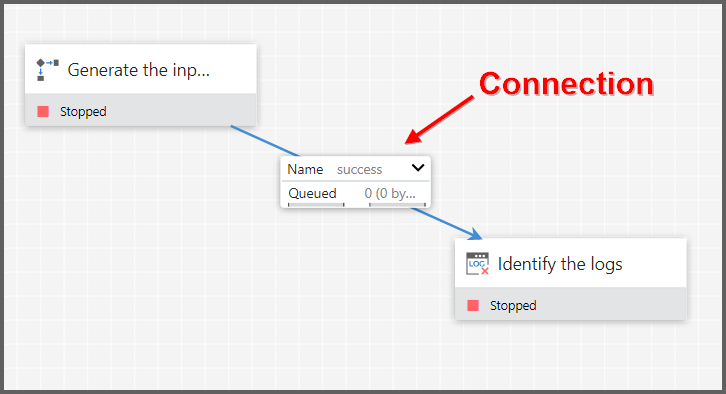

Connection: A DFM creates an automated dataflow by dragging components from the Components part of the NiFi toolbar to the canvas and then connecting the components together via Connections. Each connection consists of one or more Relationships. For each Connection that is drawn, a DFM can determine which Relationships should be used for the Connection. This allows data to be routed in different ways based on its processing outcome. Each connection houses a FlowFile Queue. When a FlowFile is transferred to a particular Relationship, it is added to the queue belonging to the associated Connection.

Controller Service: Controller Services are extension points that, after being added and configured by a DFM in the User Interface, will start up when NiFi starts up and provide information for use by other components (such as processors or other controller services). A common Controller Service used by several components is the StandardSSLContextService. It provides the ability to configure keystore and/or truststore properties once and reuse that configuration throughout the application. The idea is that, rather than configure this information in every processor that might need it, the controller service provides it for any processor to use as needed.

Reporting Task: Reporting Tasks run in the background to provide statistical reports about what is happening in the NiFi instance. The DFM adds and configures Reporting Tasks in the User Interface as desired. Common reporting tasks include the ControllerStatusReportingTask, MonitorDiskUsage reporting task, MonitorMemory reporting task, and the StandardGangliaReporter.

Funnel: A funnel is a NiFi component that is used to combine the data from several Connections into a single Connection.

Process Group: When a dataflow becomes complex, it often is beneficial to reason about the dataflow at a higher, more abstract level. NiFi allows multiple components, such as Processors, to be grouped together into a Process Group. The NiFi User Interface then makes it easy for a DFM to connect together multiple Process Groups into a logical dataflow, as well as allowing the DFM to enter a Process Group in order to see and manipulate the components within the Process Group.

Port: Dataflows that are constructed using one or more Process Groups need a way to connect a Process Group to other dataflow components. This is achieved by using Ports. A DFM can add any number of Input Ports and Output Ports to a Process Group and name these ports appropriately.

Remote Process Group: Just as data is transferred into and out of a Process Group, it is sometimes necessary to transfer data from one instance of NiFi to another. While NiFi provides many different mechanisms for transferring data from one system to another, Remote Process Groups are often the easiest way to accomplish this if transferring data to another instance of NiFi.

Bulletin: The NiFi User Interface provides a significant amount of monitoring and feedback about the current status of the application. In addition to rolling statistics and the current status provided for each component, components are able to report Bulletins. Whenever a component reports a Bulletin, a bulletin icon is displayed on that component. System-level bulletins are displayed on the Status bar near the top of the page. Using the mouse to hover over that icon will provide a tool-tip that shows the time and severity (Debug, Info, Warning, Error) of the Bulletin, as well as the message of the Bulletin. Bulletins from all components can also be viewed and filtered in the Bulletin Board Page, available in the header.

Template: Often times, a dataflow is comprised of many sub-flows that could be reused. NiFi allows DFMs to select a part of the dataflow (or the entire dataflow) and create a Template. This Template is given a name and can then be dragged onto the canvas just like the other components. As a result, several components may be combined together to make a larger building block from which to create a dataflow. These templates can also be exported as XML and imported into another NiFi instance, allowing these building blocks to be shared.

flow.xml.gz: Everything the DFM puts onto the NiFi User Interface canvas is written, in real time, to one file called the flow.xml.gz. This file is located in the nifi/conf directory by default. Any change made on the canvas is automatically saved to this file, without the user needing to click a “save” button. In addition, NiFi automatically creates a backup copy of this file in the archive directory when it is updated. You can use these archived files to rollback flow configuration. To do so, stop NiFi, replace flow.xml.gz with a desired backup copy, then restart NiFi. In a clustered environment, stop the entire NiFi cluster, replace the flow.xml.gz of one of nodes, and restart the node. Remove flow.xml.gz from other nodes. Once you confirmed the node starts up as a one-node cluster, start the other nodes. The replaced flow configuration will be synchronized across the cluster. The name and location of flow.xml.gz, and auto archive behavior are configurable. See the System Administrator’s Guide for further details.

NiFi User Interface

The NiFi User Interface (UI) provides mechanisms for creating automated dataflows, as well as visualizing, editing, monitoring, and administering those dataflows. The UI can be broken down into several segments, each responsible for different functionality of the application. This section provides screenshots of the application and highlights the different segments of the UI. Each segment is discussed in further detail later in the document.

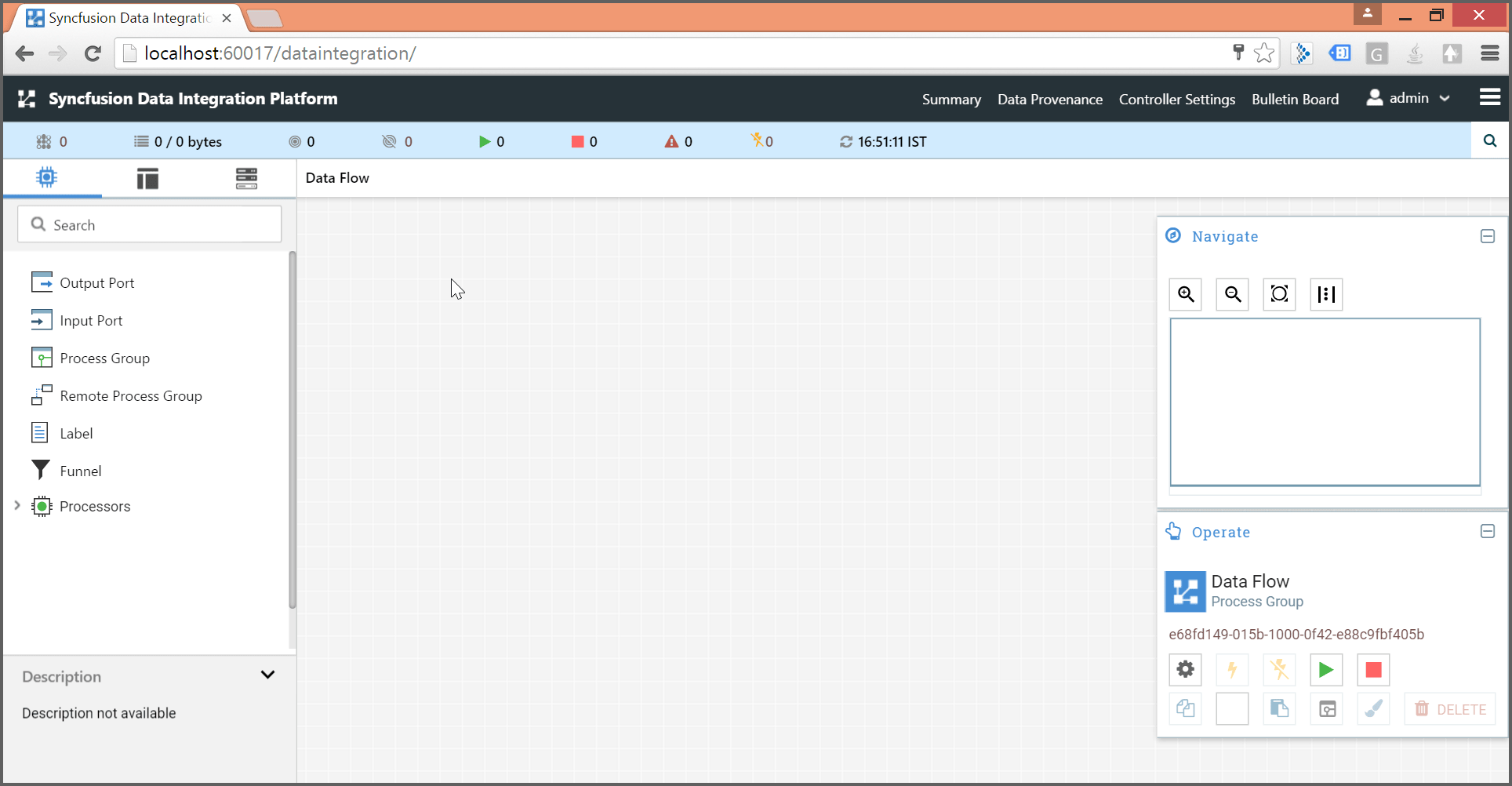

When the application is started, the user is able to navigate to the User Interface by going to the default address http://<hostname>:60017/dataintegration in a web browser if the user installed Data Integration Platform without UMS. If the user installed Data Integration Platform with UMS, then the user is able to navigate to the User Interface by going to the default address https://<hostname>:60017/dataintegration in a web browser. There are no permissions configured by default, so anyone is able to view and modify the dataflow. For information on securing the system, see the Systems Administrator guide.

When a DFM navigates to the UI for the first time, a blank canvas is provided on which a dataflow can be built:

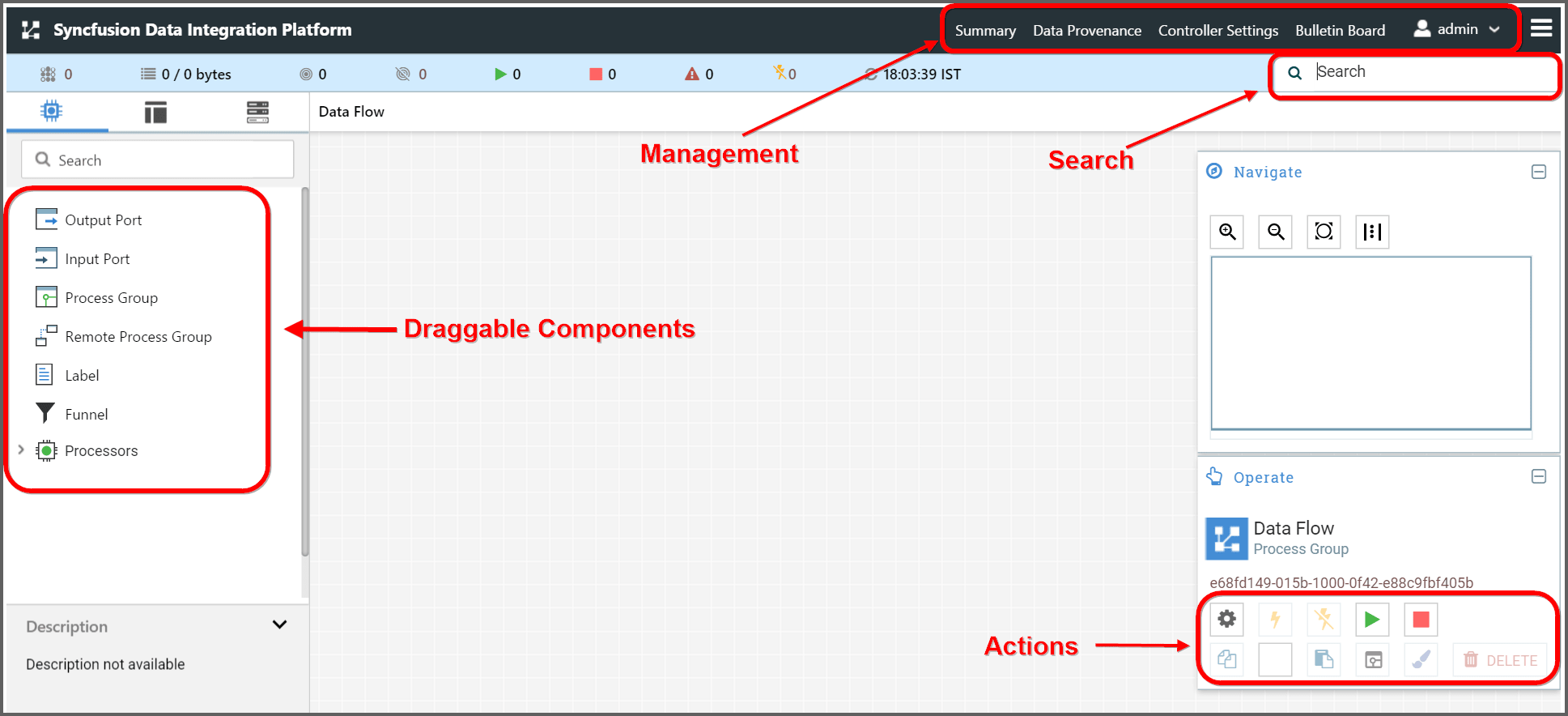

Toolbar:

The Components Toolbar runs across left portion of your screen under processor tab.

It consists of the components you can drag onto the canvas to build your dataflow. Each component is described in more detail in Building a Dataflow.

Status Bar:

The Status Bar runs top portion of your screen. The Status bar provides information about how many Processors exist on the canvas in each state (Stopped, Running, Invalid, Disabled), how many Remote Process Groups exist on the canvas in each state (Transmitting, Not Transmitting), the number of threads that are currently active in the flow, the amount of data that currently exists in the flow, and the timestamp at which all of this information was last refreshed.

Additionally, if the instance of NiFi is clustered, the Status bar shows how many nodes are in the cluster and how many are currently connected.

Operate Palette:

The Operate Palette sits to the right-hand side of the screen.

It consists of buttons that are used by DFMs to manage the flow, as well as by administrators who manage user access and configure system properties, such as how many system resources should be provided to the application.

Search Box:

On the right side of the canvas is Search, and the Global Menu.

You can use Search to easily find components on the canvas and can to search by component name, type, identifier, configuration properties, and their values.

Global Menu:

The Global Menu contain options that allow you to manipulate existing components on the canvas:

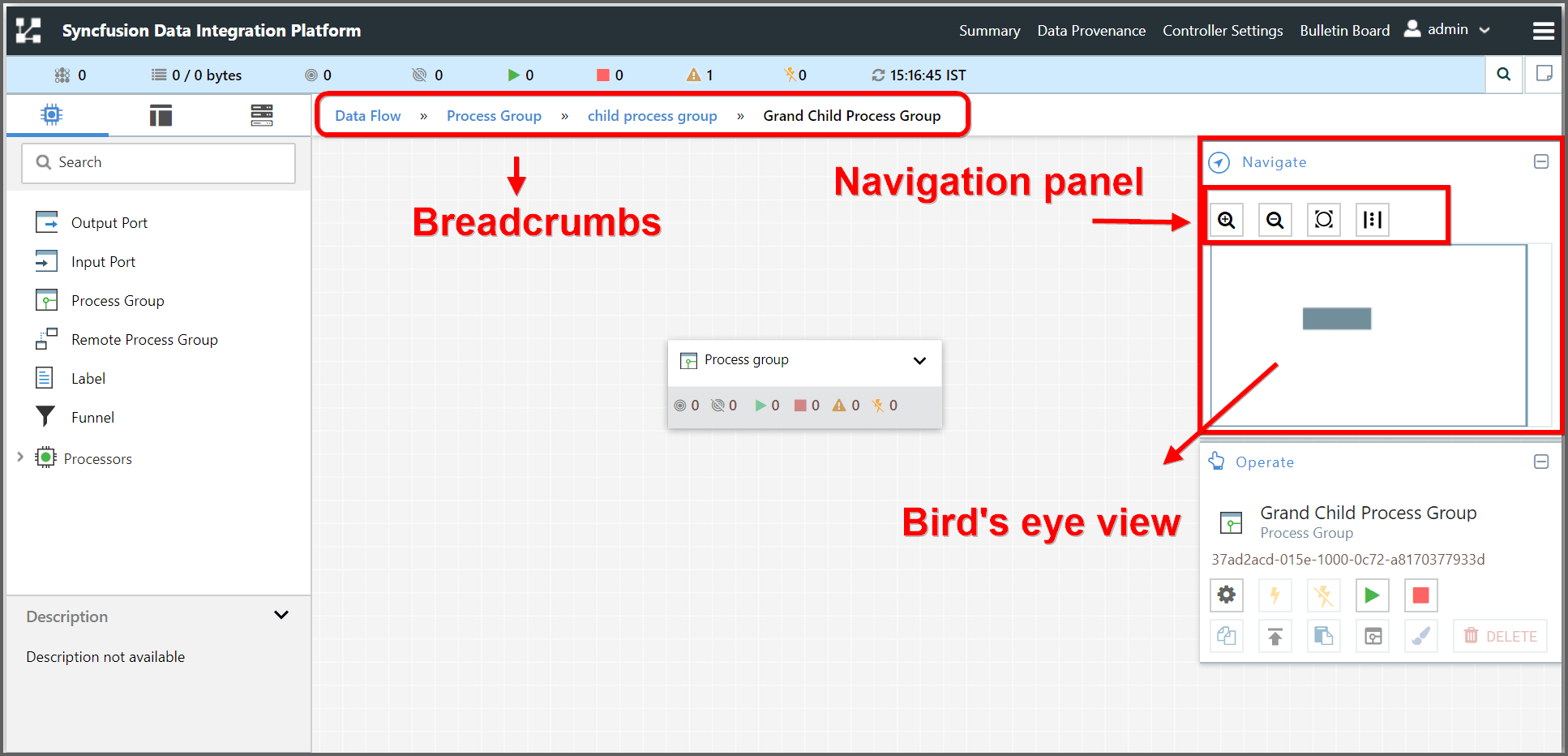

Additionally, the UI has allows has some features that allow you to easily navigate around the canvas.

Navigate Panel:

You can use the Navigate Palette to pan around the canvas, and to zoom in and out. The “Birds Eye View” of the dataflow provides a high-level view of the dataflow and allows you to pan across large portions of the dataflow. You can also find breadcrumbs along the top of the screen.

Breadcrumbs:

As you navigate into and out of Process Groups, the breadcrumbs show the depth in the flow, and each Process Group that you entered to reach this depth. Each of the Process Groups listed in the breadcrumbs is a link that will take you back up to that level in the flow.

Accessing the UI with Multi-Tenant Authorization

Multi-tenant authorization enables multiple groups of users (tenants) to command, control, and observe different parts of the dataflow, with varying levels of authorization. When an authenticated user attempts to view or modify a NiFi resource, the system checks whether the user has privileges to perform that action. These privileges are defined by policies that you can apply system wide or to individual components. What this means from a Dataflow Manager perspective is that once you have access to the NiFi canvas, a range of functionality is visible and available to you, depending on the privileges assigned to you.

The available global access policies are:

| Policy | Privilege |

|---|---|

| view the UI | Allows users to view the UI |

| access the controller | Allows users to view and modify the controller including reporting tasks, Controller Services, and nodes in the cluster |

| query provenance | Allows users to submit a provenance search and request even lineage |

| access restricted components | Allows users to create/modify restricted components assuming otherwise sufficient permissions |

| access all policies | Allows users to view and modify the policies for all components |

| access users/groups | Allows users view and modify the users and user groups |

| retrieve site-to-site details | Allows other NiFi instances to retrieve Site-To-Site details |

| view system diagnostics | Allows users to view System Diagnostics |

| proxy user requests | Allows proxy machines to send requests on the behalf of others |

| access counters | Allows users to view and modify counters |

The available component-level access policies are:

| Policy | Privilege |

|---|---|

| view the component | Allows users to view component configuration details |

| modify the component | Allows users to modify component configuration details |

| view the data | Allows users to view metadata and content for this component through provenance data and flowfile queues in outbound connection |

| modify the data | Allows users to empty flowfile queues in outbound connections and to submit replays |

| view the policies | Allows users to view the list of users who can view and modify a component |

| modify the policies | Allows users to modify the list of users who can view and modify a component |

| retrieve data via site-to-site | Allows a port to receive data from NiFi instances |

| send data via site-to-site | Allows a port to send data from NiFi instances |

If you are unable to view or modify a NiFi resource, contact your System Administrator or see Configuring Users and Access Policies in the System Administrator’s Guide for more information.

Logging In

If NiFi is configured to run securely, users will be able to request access to the DataFlow. For information on configuring NiFi to run securely, see the System Administrator’s Guide. If NiFi supports anonymous access, users will be given access accordingly and given an option to log in.

Clicking the login link will open the log in page. If the user is logging in with their username/password they will be presented with a form to do so. If NiFi is not configured to support anonymous access and the user is logging in with their username/password, they will be immediately sent to the login form bypassing the canvas.

Building a DataFlow

A DFM is able to build an automated dataflow using the NiFi User Interface (UI). Simply drag components from the toolbar to the canvas, configure the components to meet specific needs, and connect the components together.

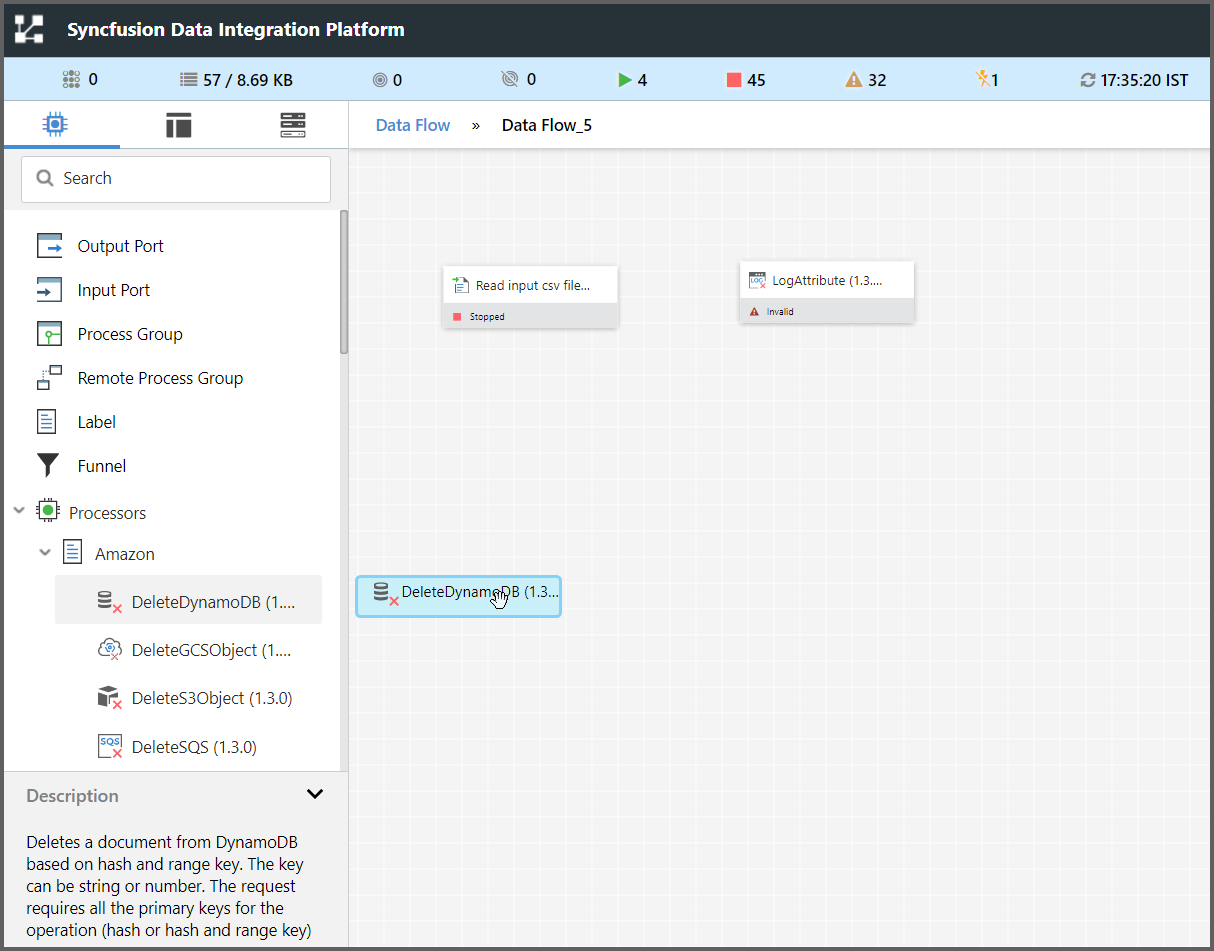

Adding Components to the Canvas

The User Interface section above outlined the different segments of the UI and pointed out a Components tab. This section looks at each of the Components in that tab. You can select the component and drag and drop the component into the canvas area.

Processor:

Processor:

The Processor is the most commonly used component, as it is responsible for data ingress, egress, routing, and manipulating. There are many different types of Processors.

In fact, this is a very common Extension Point in NiFi, meaning that many vendors may implement their own Processors to perform whatever functions are necessary for their use case.

In the left corner of the Components tab, the user is able to filter the list based on the Processor Type. Processors are listed depends up on the Tags.

Restricted components will be marked with a Restricted icon next to their name. These are components that can be used to execute arbitrary unsanitized code provided by the operator through the NiFi REST API/UI or can be used to obtain or alter data on the NiFi host system using the NiFi OS credentials. These components could be used by an otherwise authorized NiFi user to go beyond the intended use of the application, escalate privilege, or could expose data about the internals of the NiFi process or the host system. All of these capabilities should be considered privileged, and admins should be aware of these capabilities and explicitly enable them for a subset of trusted users.

Before a user is allowed to create and modify restricted components they must be granted access to restricted components. Refer to multi-tenant documentation.

Clicking the Add button or double-clicking on a Processor Type will add the selected Processor to the canvas at the location that it was dropped.

IMPORTANT

For any component added to the canvas, it is possible to select it with the mouse and move it anywhere on the canvas. Also, it is possible to select multiple items at once by either holding down the Shift key and selecting each item or by holding down the Shift key and dragging a selection box around the desired components.

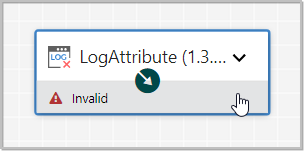

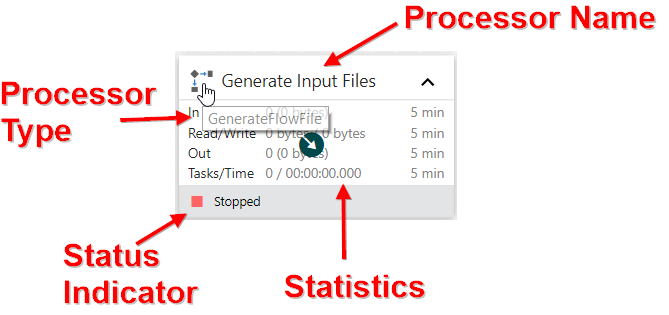

Once you have dragged a Processor onto the canvas,the processor is in collapsed view.

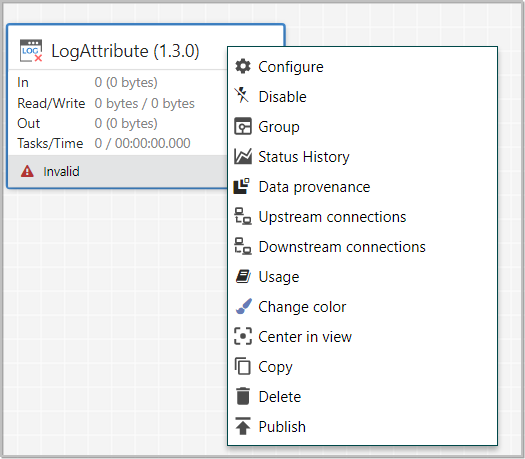

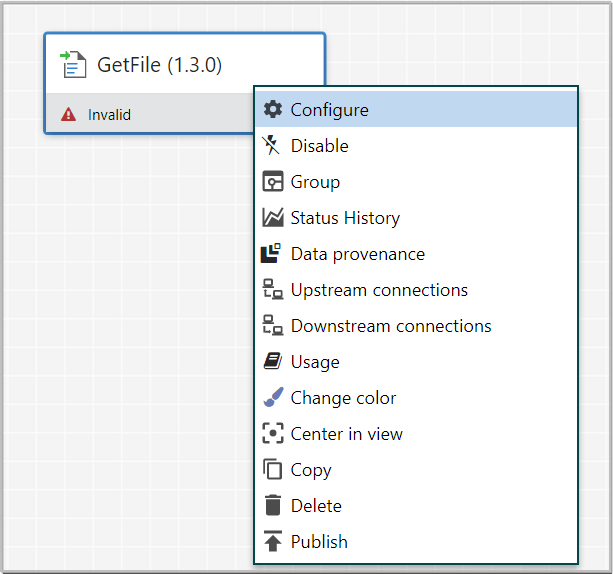

You can expand the processor by clicking on the down arrow ( ). You can configure the processor by double clicking on the processor or you can interact with it by right-clicking on the Processor and selecting an option from the context menu.

). You can configure the processor by double clicking on the processor or you can interact with it by right-clicking on the Processor and selecting an option from the context menu.

The following options are available:

-

Configure: This option allows the user to establish or change the configuration of the Processor. (See Configuring a Processor.)

-

Start or Stop: This option allows the user to start or stop a Processor; the option will be either Start or Stop, depending on the current state of the Processor.

-

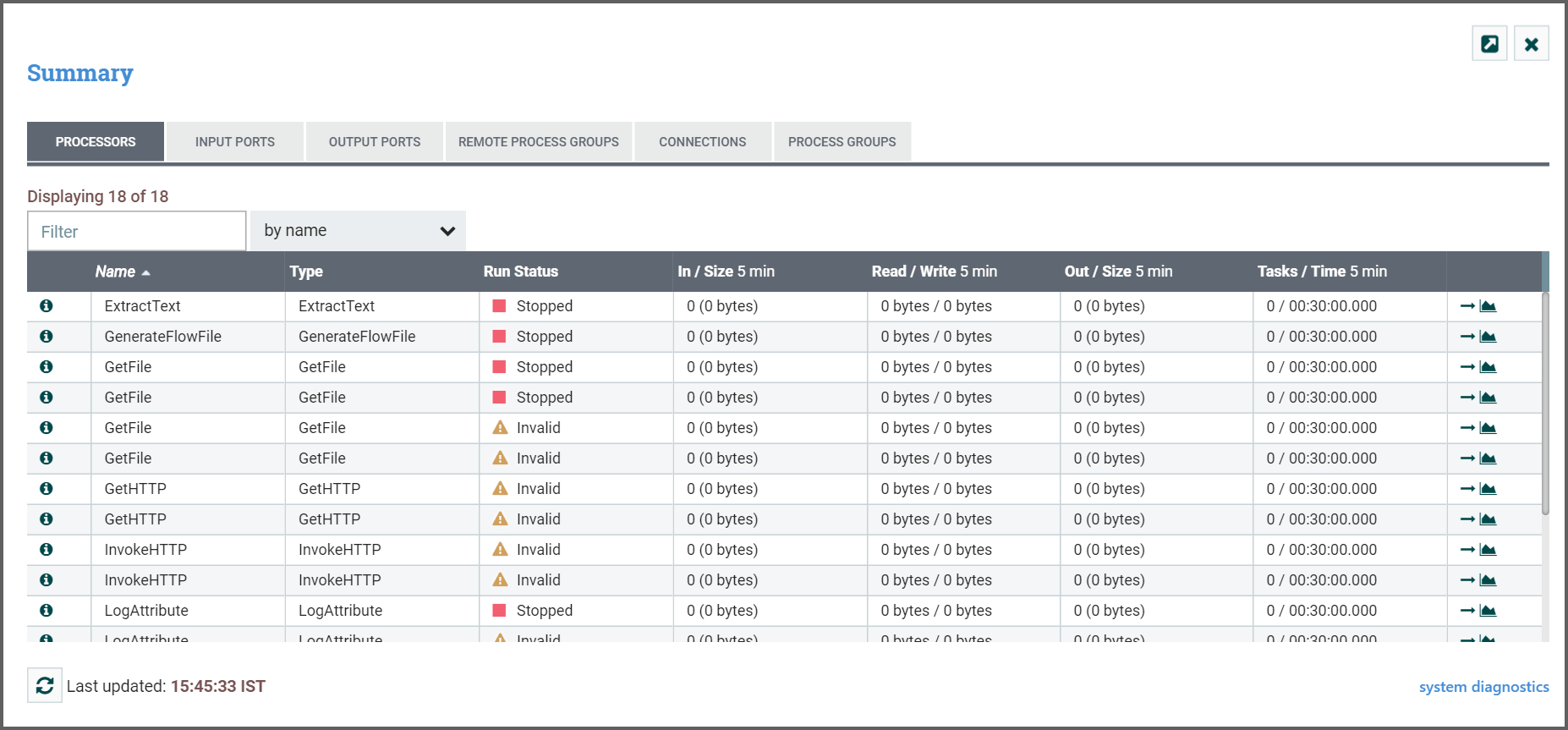

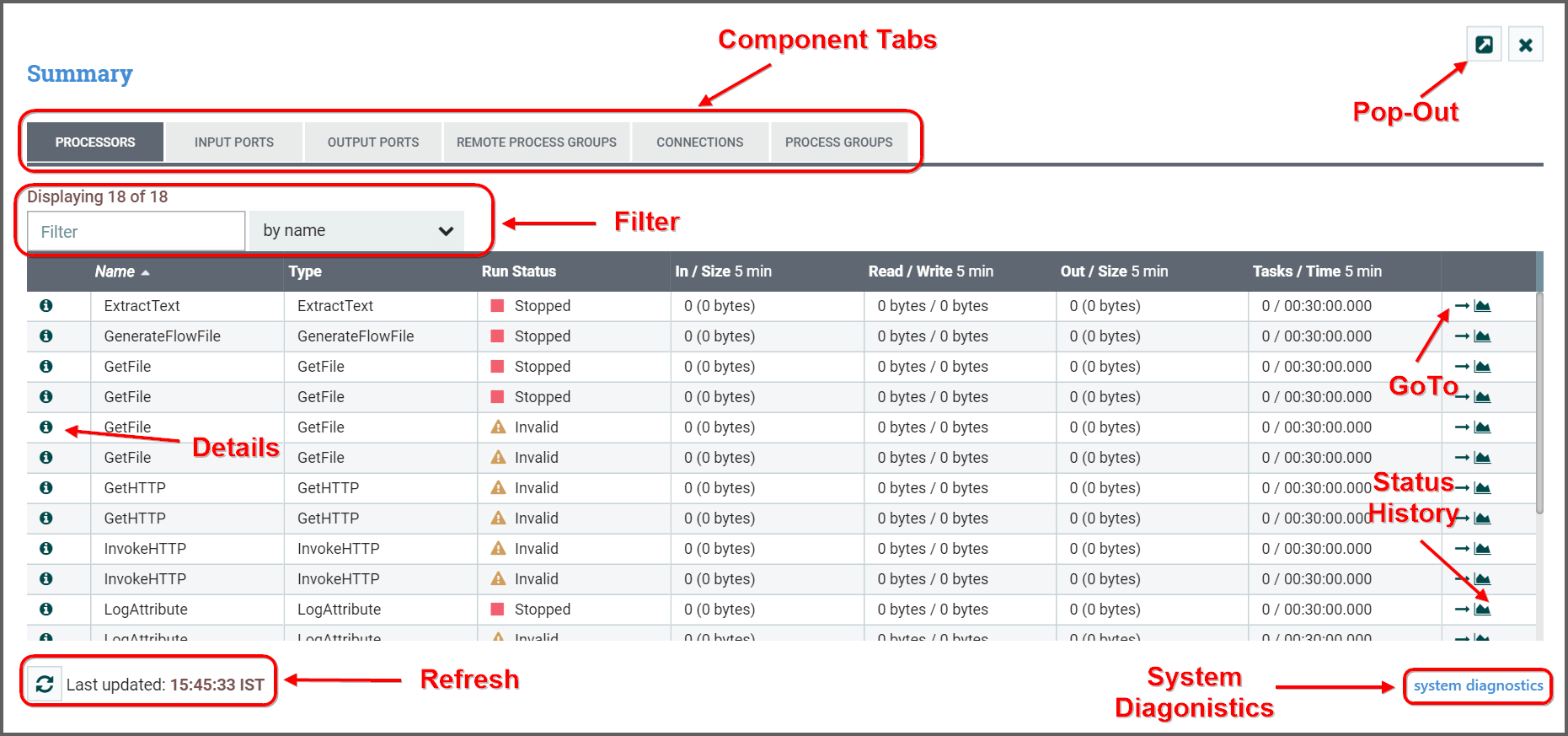

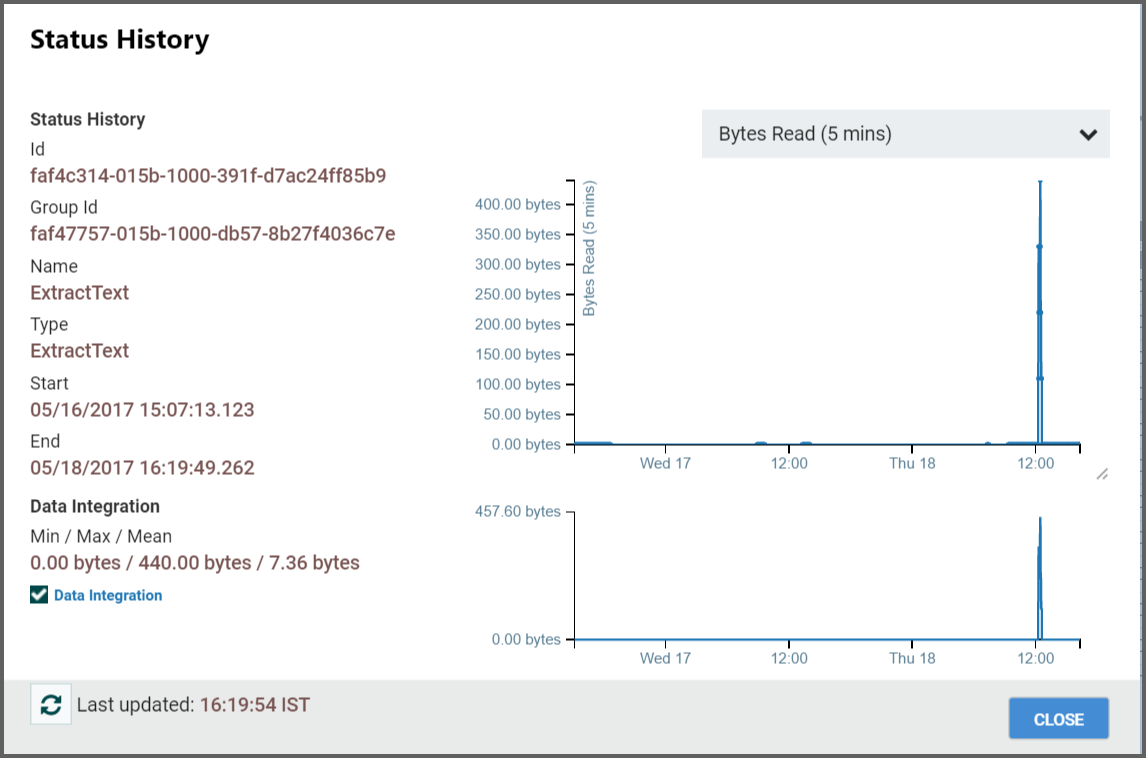

Status History: This option opens a graphical representation of the Processor’s statistical information over time.

-

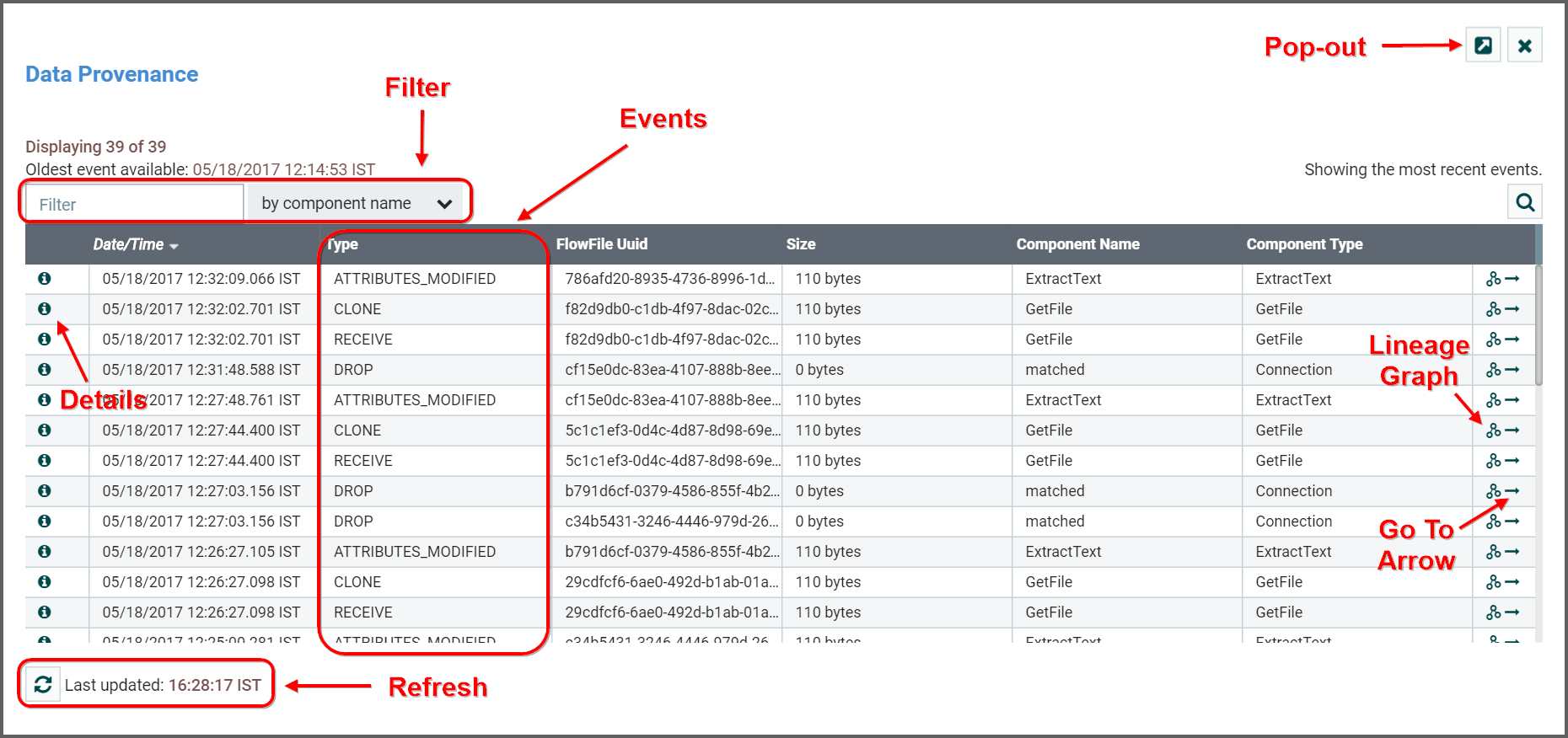

Data provenance: This option displays the NiFi Data Provenance table, with information about data provenance events for the FlowFiles routed through that Processor.

-

Upstream connections: This option allows the user to see and “jump to” upstream connections that are coming into the Processor. This is particularly useful when processors connect into and out of other Process Groups.

-

Downstream connections: This option allows the user to see and “jump to” downstream connections that are going out of the Processor. This is particularly useful when processors connect into and out of other Process Groups.

-

Usage: This option takes the user to the Processor’s usage documentation.

-

Change color: This option allows the user to change the color of the Processor, which can make the visual management of large flows easier.

-

Center in view: This option centers the view of the canvas on the given Processor.

-

Copy: This option places a copy of the selected Processor on the clipboard, so that it may be pasted elsewhere on the canvas by right-clicking on the canvas and selecting Paste. The Copy/Paste actions also may be done using the keystrokes

Ctrl-C(Command-C) andCtrl-V(Command-V). -

Publish: This option allows the DFM to publish a Processor.

-

Delete: This option allows the DFM to delete a Processor from the canvas.

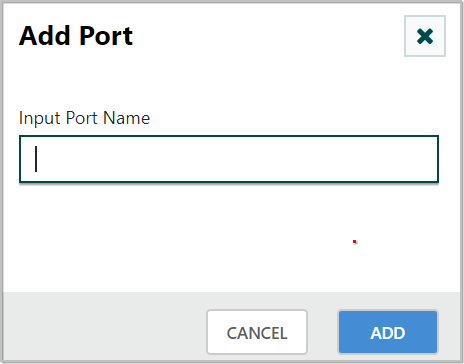

Input Port:

Input Port:

Ports provide a mechanism for transferring data into a Process Group. When an Input Port is dragged onto the canvas, the DFM is prompted to name the Port.

All Ports within a Process Group must have unique names.When a user initially navigates to the NiFi page, the user is placed in the Root Process Group.

If the Input Port is dragged onto the Root Process Group, the Input Port provides a mechanism to receive data from remote instances of NiFi via Site-to-Site.

In this case, the Input Port can be configured to restrict access to appropriate users, if NiFi is configured to run securely. For information on configuring NiFi to run securely, see the System Administrator’s Guide.

Output Port:

Output Port:

Output Ports provide a mechanism for transferring data from a Process Group to destinations outside of the Process Group. When an Output Port is dragged onto the canvas, the DFM is prompted to name the Port. All Ports within a Process Group must have unique names.

If the Output Port is dragged onto the Root Process Group, the Output Port provides a mechanism for sending data to remote instances of NiFi via Site-to-Site.

In this case, the Port acts as a queue. As remote instances of NiFi pull data from the port, that data is removed from the queues of the incoming Connections.

If NiFi is configured to run securely, the Output Port can be configured to restrict access to appropriate users. For information on configuring NiFi to run securely, see the [System Administrator’s Guide](admin-guide.

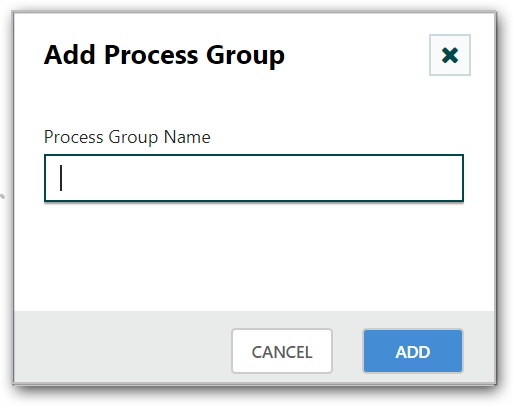

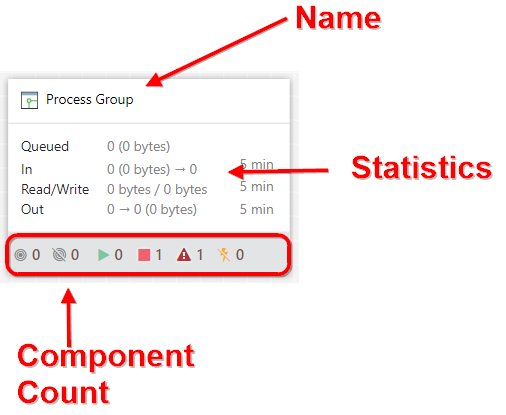

Process Group:

Process Group:

Process Groups can be used to logically group a set of components so that the dataflow is easier to understand and maintain. When a Process Group is dragged onto the canvas, the DFM is prompted to name the Process Group. All Process Groups within the same parent group must have unique names.

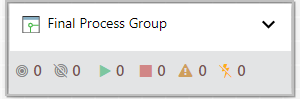

The Process Group will then be nested within that parent group.Once you have dragged a Process Group onto the canvas, the processor is in collapsed view.

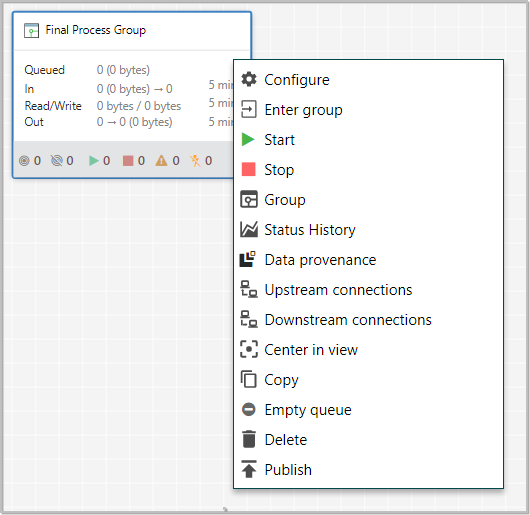

You can expand the processor by clicking on the down arrow ( ). You can interact with it by right-clicking on the Process Group and selecting an option from context menu.

). You can interact with it by right-clicking on the Process Group and selecting an option from context menu.

The following options are available:

-

Configure: This option allows the user to establish or change the configuration of the Process Group.

-

Enter group: This option allows the user to enter the Process Group. It is also possible to double-click on the Process Group to enter it.

-

Start: This option allows the user to start a Process Group.

-

Stop: This option allows the user to stop a Process Group.

-

Status History: This option opens a graphical representation of the Process Group’s statistical information over time.

-

Upstream connections: This option allows the user to see and “jump to” upstream connections that are coming into the Process Group.

-

Downstream connections: This option allows the user to see and “jump to” downstream connections that are going out of the Process Group.

-

Center in view: This option centers the view of the canvas on the given Process Group.

-

Copy: This option places a copy of the selected Process Group on the clipboard, so that it may be pasted elsewhere on the canvas by right-clicking on the canvas and selecting Paste. The Copy/Paste actions also may be done using the keystrokes Ctrl-C (Command-C) and Ctrl-V (Command-V).

-

Delete: This option allows the DFM to delete a Process Group.

-

Empty Queue: This option allows the DFM to clear the queue of FlowFiles that may be waiting to be processed. This option can be especially useful during testing, when the DFM is not concerned about deleting data from the queue. When this option is selected, users must confirm that they want to delete the data in the queue.

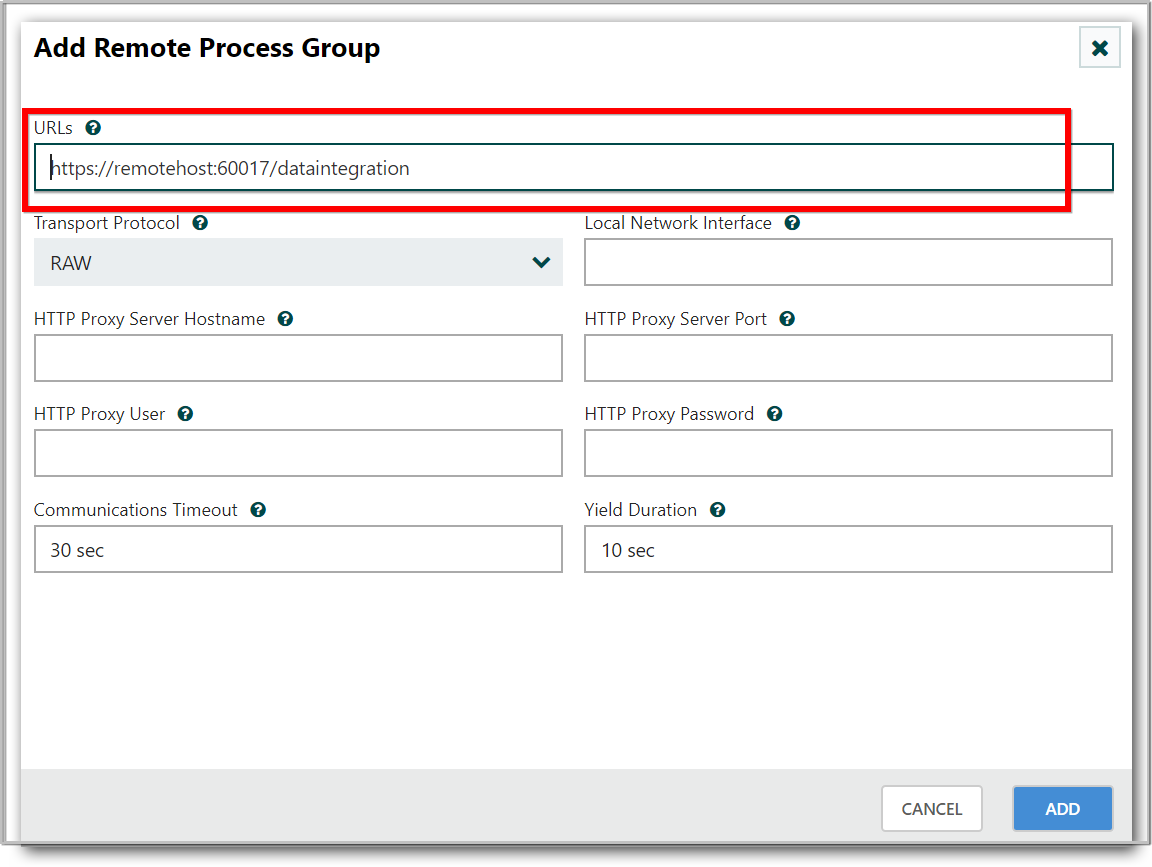

Remote Process Group:

Remote Process Group:

Remote Process Groups appear and behave similar to Process Groups. However, the Remote Process Group (RPG) references a remote instance of NiFi. When an RPG is dragged onto the canvas, rather than being prompted for a name, the DFM is prompted for the URL of the remote NiFi instance.

If the remote NiFi is a clustered instance, the URL that should be used is the URL of the remote instance’s NiFi Cluster Manager (NCM).When data is transferred to a clustered instance of NiFi via an RPG, the RPG will first connect to the remote instance’s NCM to determine which nodes are in the cluster and how busy each node is.

This information is then used to load balance the data that is pushed to each node. The remote NCM is then interrogated periodically to determine information about any nodes that are dropped from or added to the cluster and to recalculate the load balancing based on each node’s load. For more information, see the section on Site-to-Site.

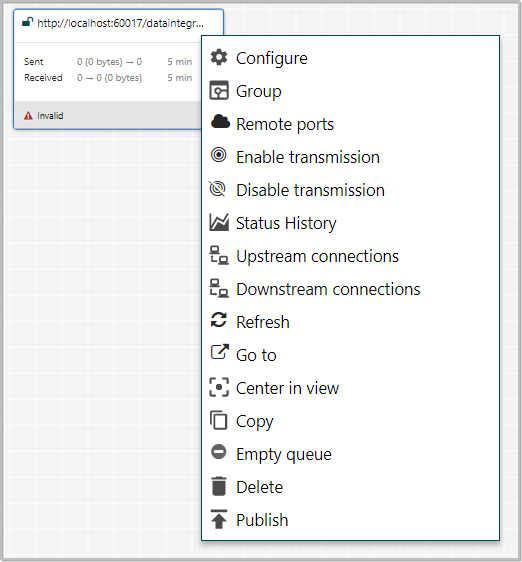

Once a Remote Process Group has been dragged onto the canvas, the user may interact with it by right-clicking on the Remote Process Group and selecting an option from context menu. The options available to you from the context menu vary, depending on the privileges assigned to you.

The following options are available:

-

Configure: This option allows the user to establish or change the configuration of the Remote Process Group.

-

Remote Ports: This option allows the user to see input ports and/or output ports that exist on the remote instance of NiFi that the Remote Process Group is connected to. Note that if the Site-to-Site configuration is secure, only the ports that the connecting NiFi has been given accessed to will be visible.

-

Enable transmission: Makes the transmission of data between NiFi instances active. (See Remote Process Group Transmission )

-

Disable transmission: Disables the transmission of data between NiFi instances.

-

Status History: This option opens a graphical representation of the Remote Process Group’s statistical information over time.

-

Upstream connections: This option allows the user to see and “jump to” upstream connections that are coming into the Remote Process Group.

-

Downstream connections: This option allows the user to see and “jump to” downstream connections that are going out of the Remote Process Group.

-

Refresh: This option refreshes the view of the status of the remote NiFi instance.

-

Go to: This option opens a view of the remote NiFi instance in a new tab of the browser. Note that if the Site-to-Site configuration is secure, the user must have access to the remote NiFi instance in order to view it.

-

Center in view: This option centers the view of the canvas on the given Remote Process Group.

-

Copy: This option places a copy of the selected Process Group on the clipboard, so that it may be pasted elsewhere on the canvas by right-clicking on the canvas and selecting Paste. The Copy/Paste actions also may be done using the keystrokes Ctrl-C (Command-C) and Ctrl-V (Command-V).

-

Delete: This option allows the DFM to delete a Remote Process Group from the canvas.

Funnel:

Funnel:

Funnels are used to combine the data from many Connections into a single Connection. This has two advantages.

First, if many Connections are created with the same destination, the canvas can become cluttered if those Connections have to span a large space. By funneling these Connections into a single Connection, that single Connection can then be drawn to span that large space instead.

Secondly, Connections can be configured with FlowFile Prioritizers. Data from several Connections can be funneled into a single Connection, providing the ability to Prioritize all of the data on that one Connection, rather than prioritizing the data on each Connection independently.

Label:

Label:

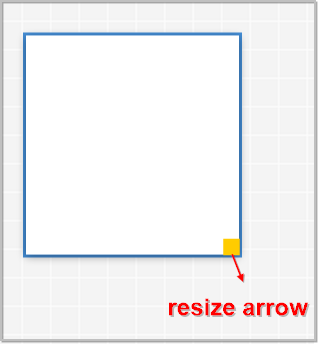

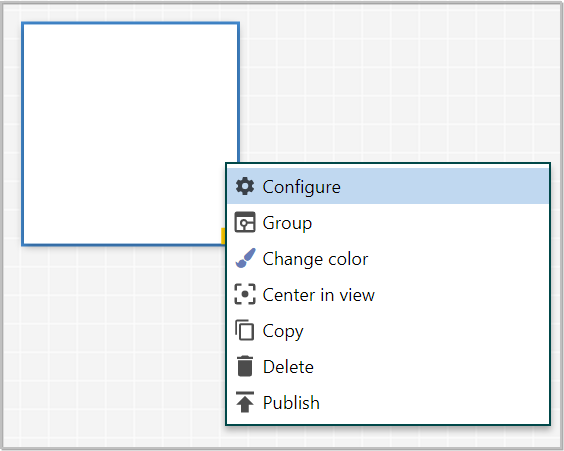

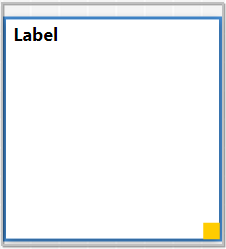

Labels are used to provide documentation to parts of a dataflow. When a Label is dropped onto the canvas, it is created with a default size.

The Label can then be resized by dragging the handle in the bottom-right corner. The Label has no text when initially created.

The text of the Label can be added by right-clicking on the Label and choosing Configure

Add Label Value and apply the changes, now the label will be shown with text as below,

Configuring a Processor

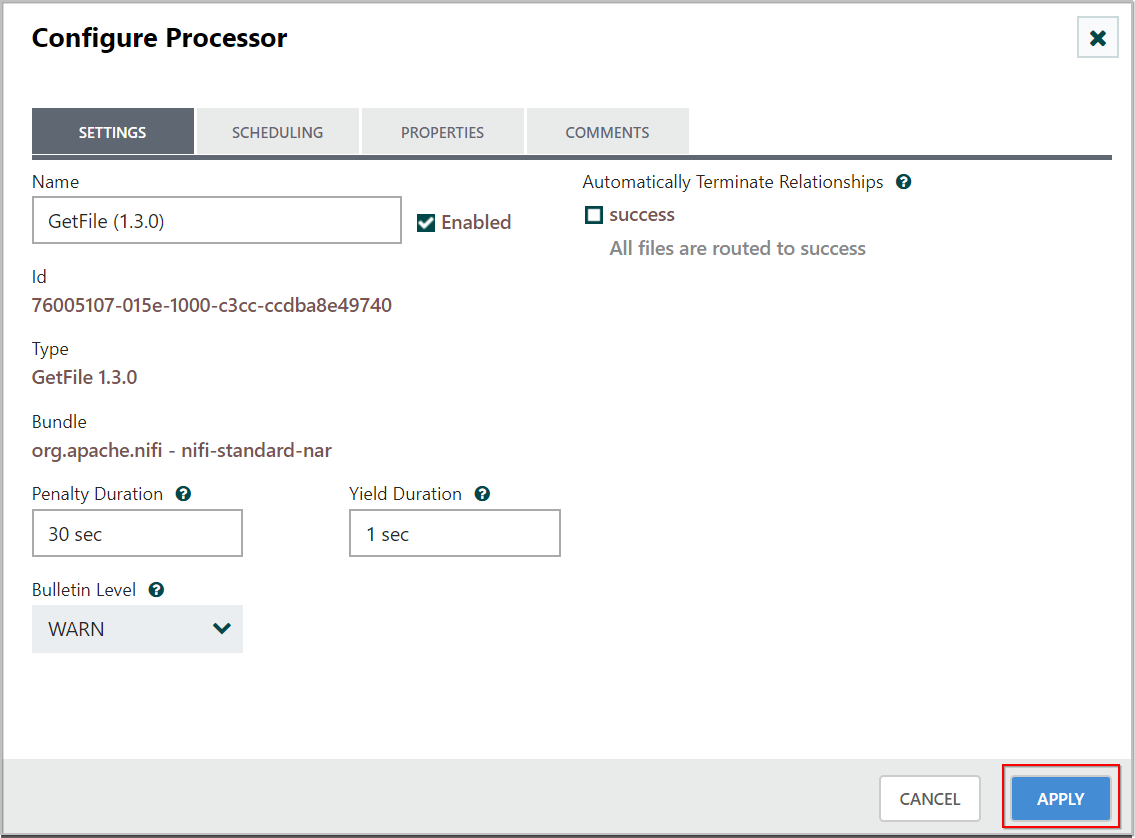

To configure a processor, right-click on the Processor and select the Configure option from the context menu or double click the processor.

The configuration dialog is opened with four different tabs, each of which is discussed below.

Once you have finished configuring the Processor, you can apply the changes by clicking the Apply button or cancel all changes by clicking the Cancel button.

NOTE

Note that after a Processor has been started, the context menu shown for the Processor no longer has a

Configureoption but rather has aView Configurationoption.

Processor configuration cannot be changed while the Processor is running. You must first stop the Processor and wait for all of its active tasks to complete before configuring the Processor again.

Settings Tab

The first tab in the Processor Configuration dialog is the Settings tab:

This tab contains several different configuration items.

First, it allows the DFM to change the name of the Processor. The name of a Processor by default is the same as the Processor type. Next to the Processor Name is a checkbox, indicating whether the Processor is Enabled. When a Processor is added to the canvas, it is enabled. If the Processor is disabled, it cannot be started.

The disabled state is used to indicate that when a group of Processors is started, such as when a DFM starts an entire Process Group, this (disabled) Processor should be excluded.

Below the Name configuration, the Processor’s unique identifier(Id) is displayed along with the Processor’s type. These values cannot be modified.

Next are two dialogs for configuring ‘Penalty duration’ and ‘Yield duration’. During the normal course of processing a piece of data (a FlowFile), an event may occur that indicates that the data cannot be processed at this time but the data may be processable at a later time. When this occurs, the Processor may choose to Penalize the FlowFile. This will prevent the FlowFile from being Processed for some period of time.

For example, if the Processor is to push the data to a remote service, but the remote service already has a file with the same name as the filename that the Processor is specifying, the Processor may penalize the FlowFile. The ‘Penalty duration’ allows the DFM to specify how long the FlowFile should be penalized. The default value is 30 seconds.

Similarly, the Processor may determine that some situation exists such that the Processor can no longer make any progress, regardless of the data that it is processing. For example, if a Processor is to push data to a remote service and that service is not responding, the Processor cannot make any progress. As a result, the Processor should ‘yield,’ which will prevent the Processor from being scheduled to run for some period of time. That period of time is specified by setting the ‘Yield duration.’The default value is 1 second.

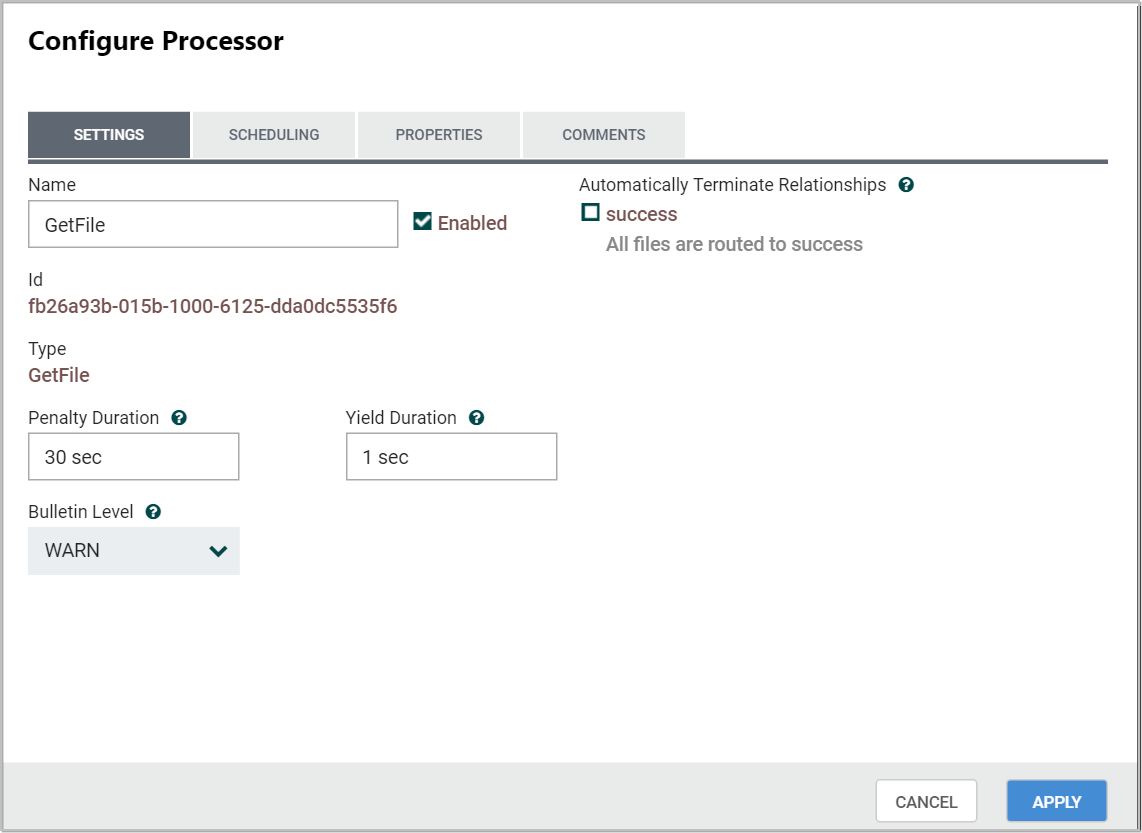

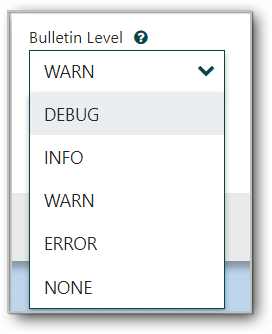

Bulletin level:

The last configurable option on the left-hand side of the Settings tab is the Bulletin level.

Whenever the Processor writes to its log, the Processor also will generate a Bulletin. This setting indicates the lowest level of Bulletin that should be shown in the User Interface.

By default, the Bulletin level is set to WARN, which means it will display all warning and error-level bulletins.

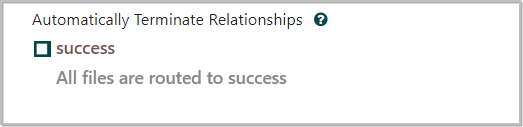

Auto-terminate relationships:

The right-hand side of the Settings tab contains an ‘Auto-terminate relationships’ section. Each of the Relationships that is defined by the Processor is listed here, along with its description.

In order for a Processor to be considered valid and able to run, each Relationship defined by the Processor must be either connected to a downstream component or auto-terminated. If a Relationship is auto-terminated, any FlowFile that is routed to that Relationship will be removed from the flow and its processing considered complete.

Any Relationship that is already connected to a downstream component cannot be auto-terminated. The Relationship must first be removed from any Connection that uses it. Additionally, for any Relationship that is selected to be auto-terminated, the auto-termination status will be cleared (turned off) if the Relationship is added to a Connection.

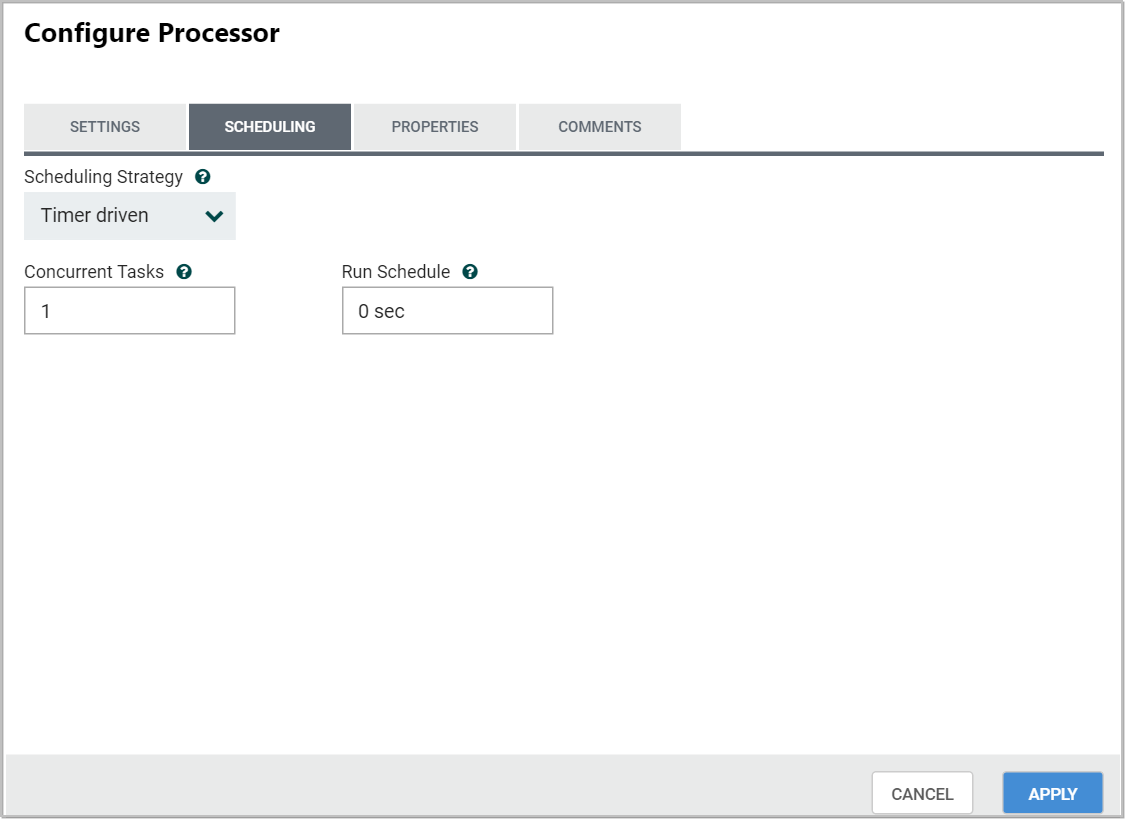

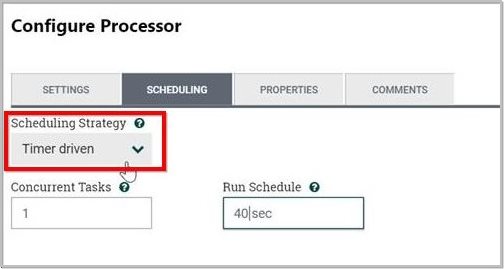

Scheduling Tab

The second tab in the Processor Configuration dialog is the Scheduling Tab:

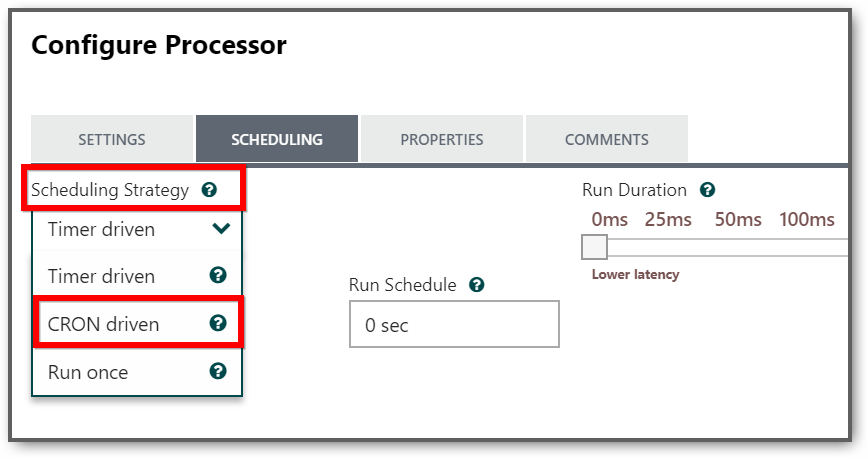

The first configuration option is the Scheduling Strategy. There are three options for scheduling components:

-

Timer driven: This is the default mode. The Processor will be scheduled to run on a regular interval. The interval at which the Processor is run is defined by the ‘Run schedule’ option (see below).

In run schedule, we can set seconds, minutes, hours and days as follows.

| Time Interval | Run Schedule |

| Seconds | 5 sec |

| Minutes | 30 min |

| Hours | 2 hr |

| Day | 1 day |

-

Event driven: When this mode is selected, the Processor will be triggered to run by an event, and that event occurs when FlowFiles enter Connections feeding this Processor. This mode is currently considered experimental and is not supported by all Processors. When this mode is selected, the ‘Run schedule’ option is not configurable, as the Processor is not triggered to run periodically but as the result of an event. Additionally, this is the only mode for which the ‘Concurrent tasks’ option can be set to 0. In this case, the number of threads is limited only by the size of the Event-Driven Thread Pool that the administrator has configured.

-

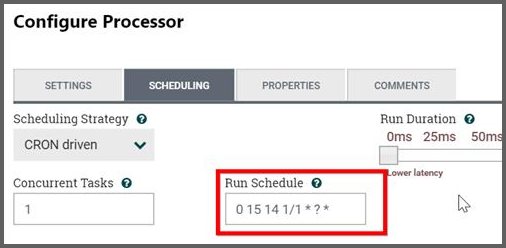

CRON driven: While using the CRON driven scheduling mode, the Processor is scheduled to run periodically, like Timer driven scheduling mode. However, the CRON driven mode provides more flexibility at the expense of increasing the complexity of the configuration. This value is made up of six fields, each separated by a space. These fields include:

- Seconds

- Minutes

- Hours

- Day of a Month

- Month

- Day of a Week

- Year

Value for these fields can be a number, range, and increment.

-

Number: Specifies one or more valid value. You can enter more than one value using a comma separated list. For example, “* 0,10,20 * ? * * *” will schedule the processor to run at every second, at 00, 10, and 20 minutes, of every hour.

-

Range: Specifies a range using the <number>-<number> syntax. For example, “0-5 * * ? * * *” will schedule the processor to run at every second between 00 and 05 seconds, of every minute.

-

Increment: Specifies an increment using <start value>/<increment> syntax. For example, “0/10 20 * ? * * *” will schedule the processor to run at every 10 seconds starting at 00 seconds, at 20 minutes, of every hour.

You should also be aware of several valid special characters given as follows:

-

*: Indicates that all values are valid for that field. For example, “* * * ? * * *” will schedule the processor to run at every second.

-

?: Indicates that no value is specified. This special character is valid in the Days of Month and Days of Week field. For example, “* 20 10 ? * * *” will schedule the processor to run at every second at 10:20 am.

-

L: You can append L to one of the Day of Week values to specify the last occurrence of this day in the month. For example, 1L indicates the last Sunday of the month. “* 20 10 ? * 1L *” will schedule the processor to run at every second at 10:20am, the last Sunday of the month, every month.

For example,

- The string “0 0 13 * * ?” indicates that you want to schedule the processor to run at 1:00 PM every day.

- The string “0 20 14 ? * MON-FRI” indicates that you want to schedule the processor to run at 2:20 PM every Monday through Friday.

- The string “0 15 10 ? * 6L 2011-2017” indicates that you want to schedule the processor to run at 10:15 AM, on the last Friday of every month, between 2011 and 2017.

For additional information and examples, refer to the Cron Trigger Tutorial in the Quartz documentation.

Run once: In this mode the Processor will run once and then automatically stop. This is convenient when developing and debugging flows,

or when building “visual scripts” for ad-hoc process integration or iterative analytics.

For example, individual processors set to Run once' can be selected on the canvas with a shift-click. Then clicking Start’ on the Operate Palette

will start those processors which will run once and stop. Then one can modify processing parameters and repeat.

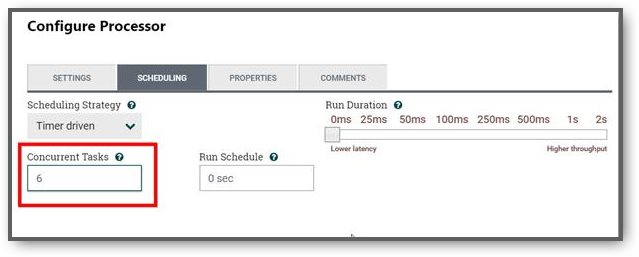

Parallel Processing: The Scheduling Tab provides a configuration option named ‘Concurrent tasks.’ This controls how many threads the Processor will use. Said a different way, this controls how many FlowFiles should be processed by this Processor at the same time. Increasing this value will typically allow the Processor to handle more data in the same amount of time. However, it does this by using system resources that then are not usable by other Processors. This essentially provides a relative weighting of Processors — it controls how much of the system’s resources should be allocated to this Processor instead of other Processors. This field is available for most Processors. There are, however, some types of Processors that can only be scheduled with a single Concurrent task.

Run schedule: The “Run schedule” dictates how often the Processor should be scheduled to run. The valid values for this field depend on the selected Scheduling Strategy (see above). If using the Event driven Scheduling Strategy, this field is not available. When using the Timer driven Scheduling Strategy, this value is a time duration specified by a number followed by a time unit. For example, 1 second or 5 mins. The default value of 0 sec means that the Processor should run as often as possible as long as it has data to process. This is true for any time duration of 0, regardless of the time unit (i.e.,0 sec,0 mins,0 days). For an explanation of values that are applicable for the CRON driven Scheduling Strategy, see the description of the CRON driven Scheduling Strategy itself.

When configured for clustering, an Execution setting will be available. This setting is used to determine which node(s) the Processor will be scheduled to execute. Selecting All Nodes will result in this Processor being scheduled on every node in the cluster. Selecting Primary Node will result in this Processor being scheduled on the Primary Node only.

The right-hand side of the tab contains a slider for choosing the ‘Run duration.’ This controls how long the Processor should be scheduled to run each time that it is triggered. On the left-hand side of the slider, it is marked ‘Lower latency’ while the right-hand side is marked ‘Higher throughput.’ When a Processor finishes running, it must update the repository in order to transfer the FlowFiles to the next Connection. Updating the repository is expensive, so the more work that can be done at once before updating the repository, the more work the Processor can handle (Higher throughput). However, this means that the next Processor cannot start processing those FlowFiles until the previous Process updates this repository. As a result, the latency will be longer (the time required to process the FlowFile from beginning to end will be longer). As a result, the slider provides a spectrum from which the DFM can choose to favor Lower Latency or Higher Throughput.

Properties Tab

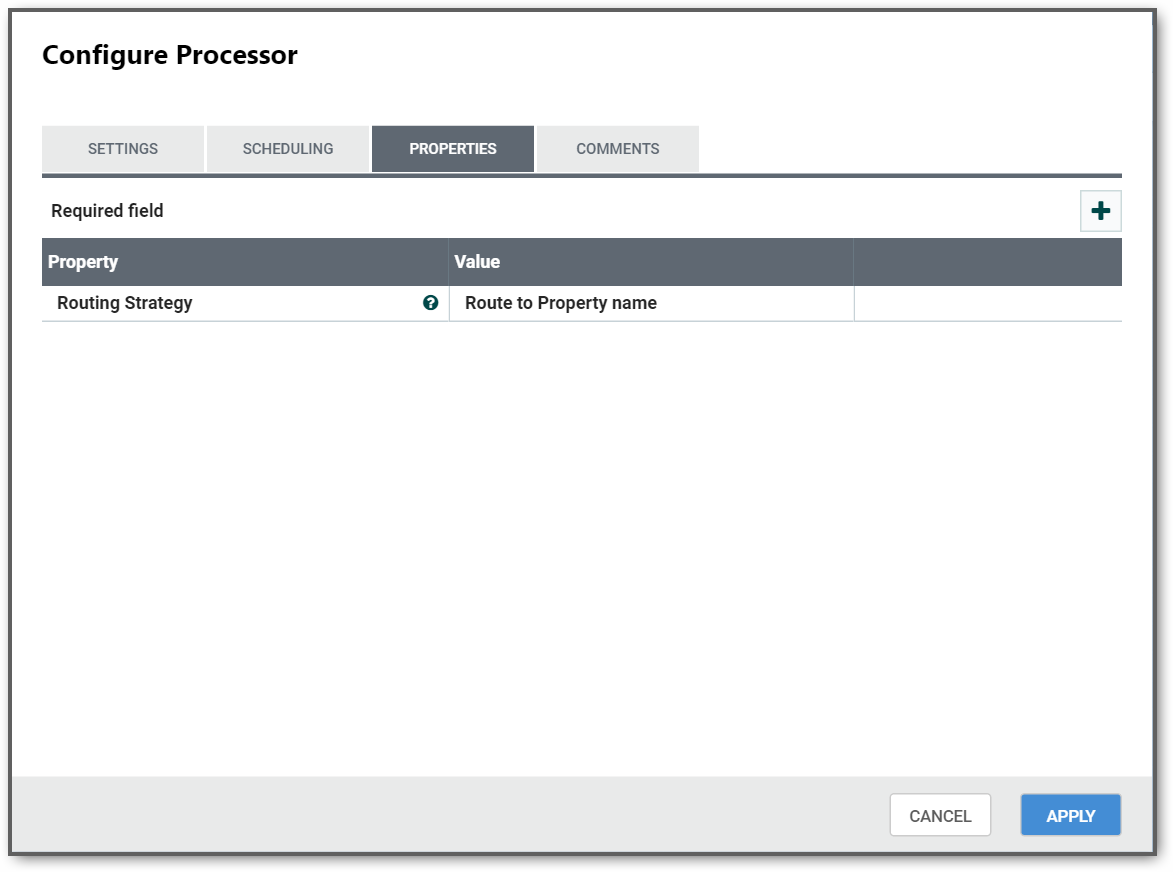

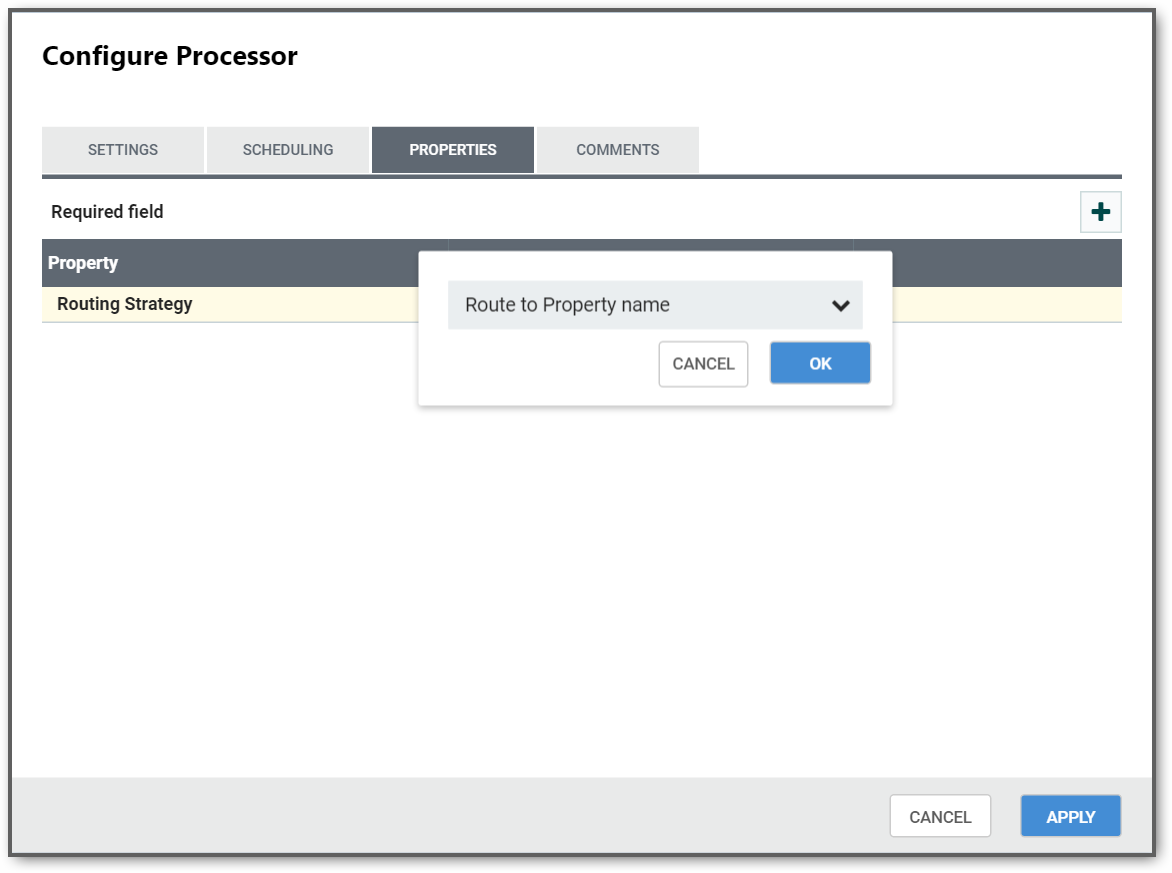

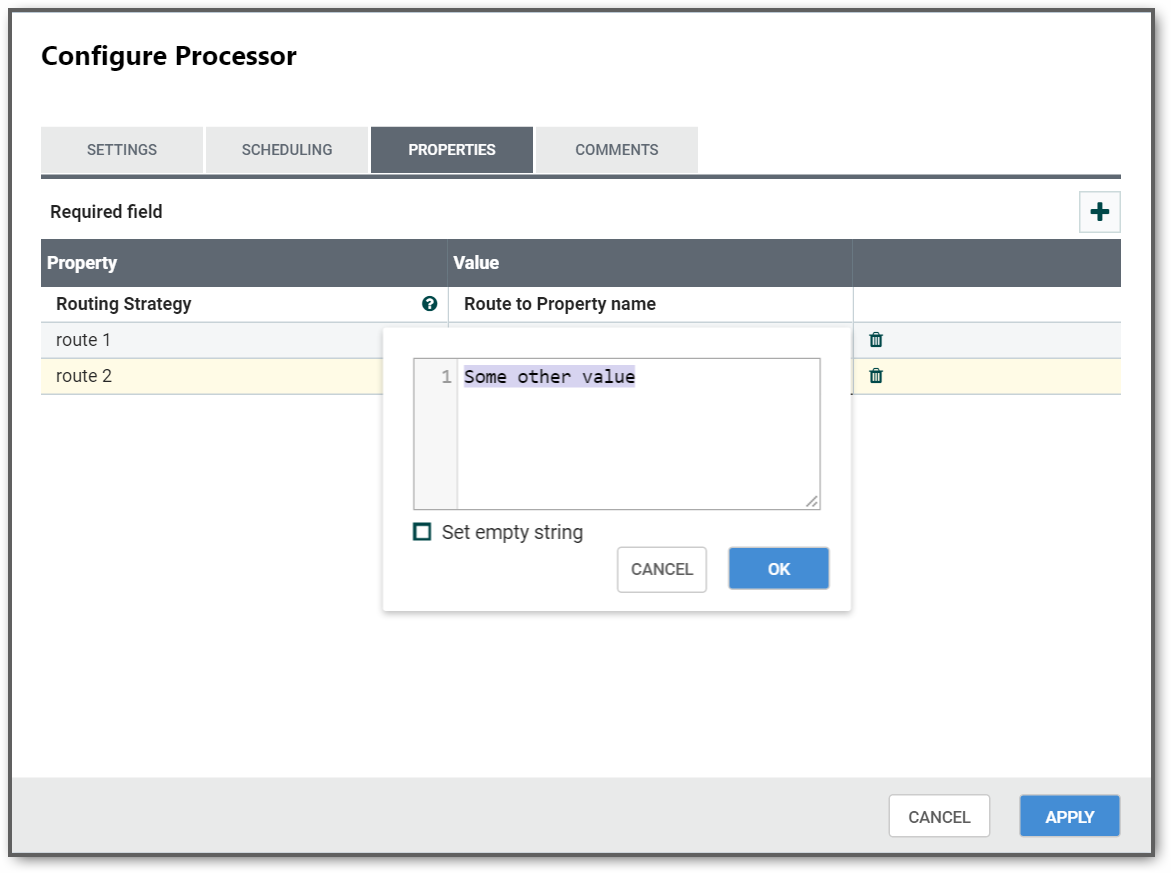

The Properties Tab provides a mechanism to configure Processor-specific behavior. There are no default properties. Each type of Processor must define which Properties make sense for its use case. Below, we see the Properties Tab for a RouteOnAttribute Processor:

This Processor, by default, has only a single property: ‘Routing Strategy.’ The default value is ‘Route on Property name.’ Next to the name of this property is a small question-mark symbol (  ). This help symbol is seen in other places throughout the User Interface, and it indicates that more information is available. Hovering over this symbol with the mouse will provide additional details about the property and the default value, as well as historical values that have been set for the Property.

). This help symbol is seen in other places throughout the User Interface, and it indicates that more information is available. Hovering over this symbol with the mouse will provide additional details about the property and the default value, as well as historical values that have been set for the Property.

Clicking on the value for the property will allow a DFM to change the value. Depending on the values that are allowed for the property, the user is either provided a drop-down from which to choose a value or is given a text area to type a value:

In the top-right corner of the tab is a button for adding a New Property. Clicking this button will provide the DFM with a dialog to enter the name and value of a new property. Not all Processors allow User-Defined properties. In processors that do not allow them, the Processor becomes invalid when User-Defined properties are applied. RouteOnAttribute, however, does allow User-Defined properties. In fact, this Processor will not be valid until the user has added a property.

NOTE

Note that after a User-Defined property has been added, an icon will appear on the right-hand side of that row (

). Clicking this button will remove the User-Defined property from the Processor.

Some processors also have an Advanced User Interface (UI) built into them. For example, the UpdateAttribute processor has an Advanced UI. To access the Advanced UI, click the Advanced button that appears at the bottom of the Configure Processor window. Only processors that have an Advanced UI will have this button.

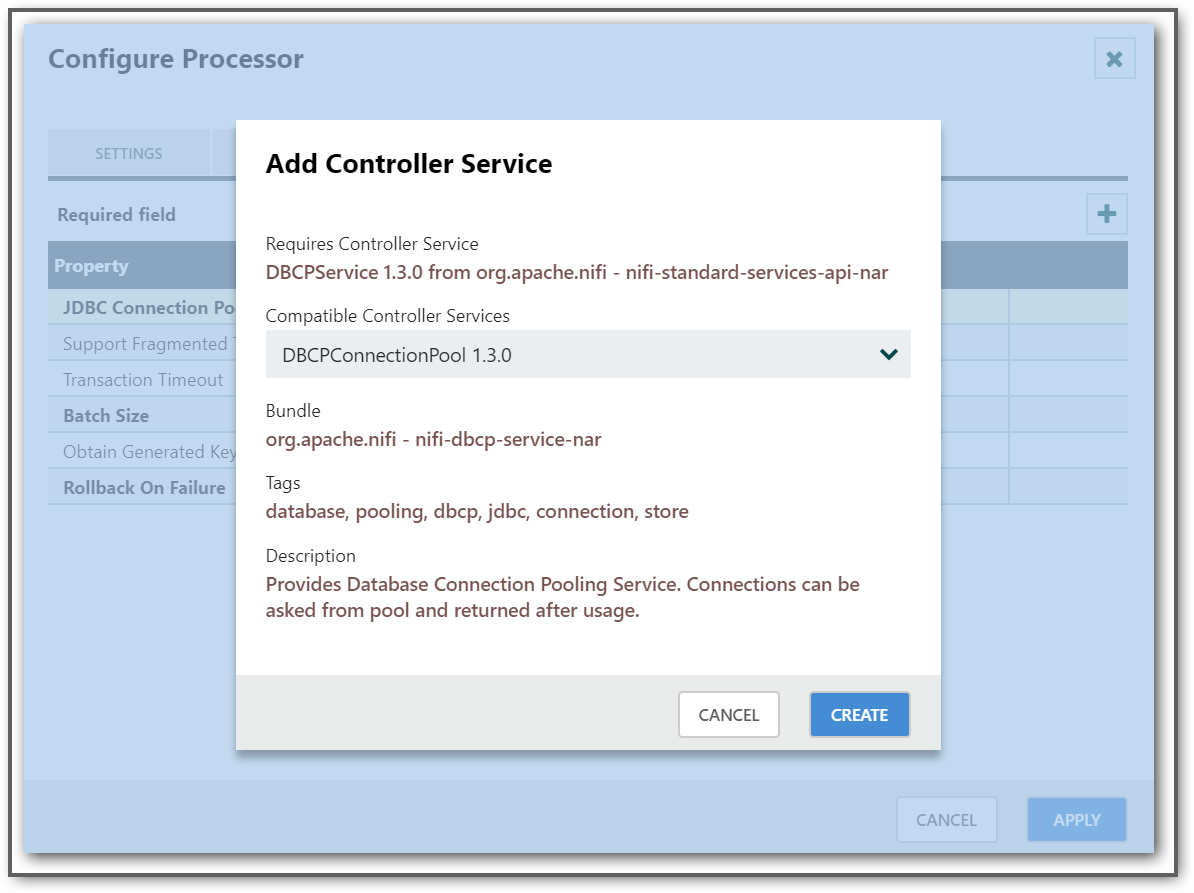

Some processors have properties that refer to other components, such as Controller Services, which also need to be configured. For example, the GetHTTP processor has an SSLContextService property, which refers to the StandardSSLContextService controller service. When DFMs want to configure this property but have not yet created and configured the controller service, they have the option to create the service on the spot, as depicted in the image below. For more information about configuring Controller Services, see the Controller Services and Reporting Tasks section.

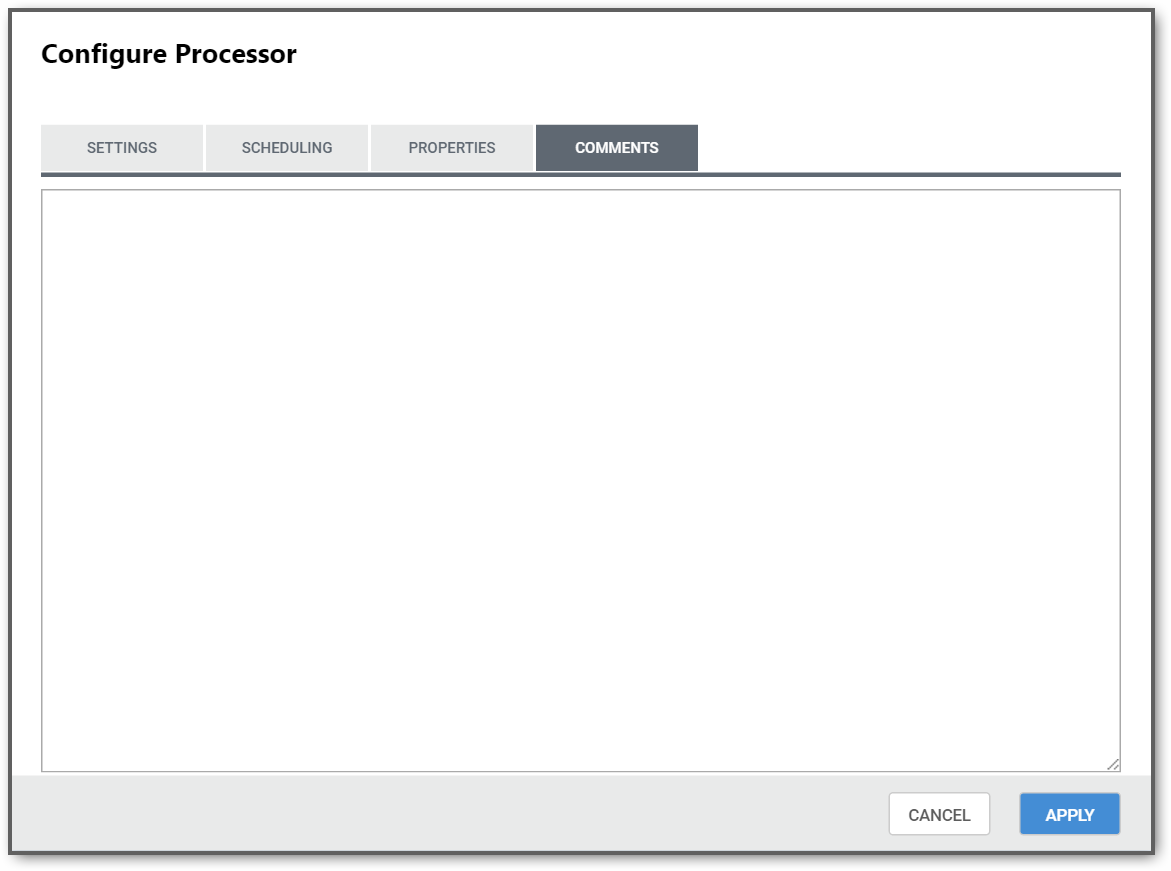

Comments Tab

The last tab in the Processor configuration dialog is the Comments tab. This tab simply provides an area for users to include whatever comments are appropriate for this component. Use of the Comments tab is optional:

Additional Help

The user may access additional documentation about each Processor’s usage by right-clicking on the Processor and then selecting ‘Usage’ from the context menu. Alternatively, clicking the ‘Help’ link in the top-right corner of the User Interface will provide a Help page with all of the documentation, including usage documentation for all the Processors that are available. Clicking on the desired Processor in the list will display its usage documentation.

Creating Custom Processor

Perquisite:

- Maven 3.3.x or later

- Java-8 or later

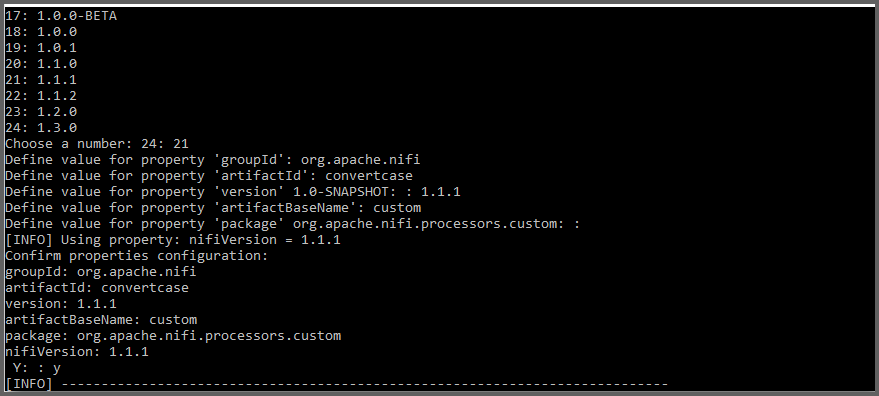

Creating maven archetype

Step 1: Create a directory where you want to create a custom processor project say

Drive:\CustomProcessor

Step 2: Run the following command in command prompt on the created directory

mvn archetype:generate

Step 3: You will be asked for more parameters while generating archetype,

Choose a number or apply filter (format: [groupId:]artifactId, case sensitive contains): 990: nifi

Choose archetype:

1: remote -> org.apache.nifi:nifi-processor-bundle-archetype (-)

2: remote -> org.apache.nifi:nifi-service-bundle-archetype (-)

Choose a number or apply filter (format: [groupId:]artifactId, case sensitive contains): : 1

Choose org.apache.nifi:nifi-processor-bundle-archetype version:

1: 0.0.2-incubating

2: 0.1.0-incubating

3: 0.2.0-incubating

4: 0.2.1

5: 0.3.0

6: 0.4.0

7: 0.4.1

8: 0.5.0

9: 0.5.1

10: 0.6.0

11: 0.6.1

12: 0.7.0

13: 0.7.1

14: 0.7.2

15: 0.7.3

16: 0.7.4

17: 1.0.0-BETA

18: 1.0.0

19: 1.0.1

20: 1.1.0

21: 1.1.1

22: 1.1.2

23: 1.2.0

24: 1.3.0

Choose a number: 24: 21

Define value for property 'groupId': : org.apache.nifi

Define value for property 'artifactId': : convertcase

Define value for property 'version': 1.0-SNAPSHOT: : 1.1.

Define value for property 'artifactBaseName': : custom

Define value for property 'package': org.apache.nifi.processors.custom: :

[INFO] Using property: nifiVersion = 1.1.1

Confirm properties configuration:

groupId: org.apache.nifi

artifactId: convertcase

version: 1.1.1

artifactBaseName: custom

package: org.apache.nifi.processors.custom

nifiVersion: 1.1.1

Y: : y

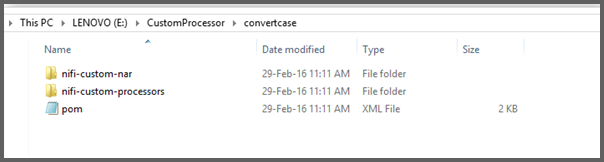

Step 4: Now you can find the project files under Drive:\CustomProcessor\convertcase

Step 5: Open the project in NetBeans or Eclipse

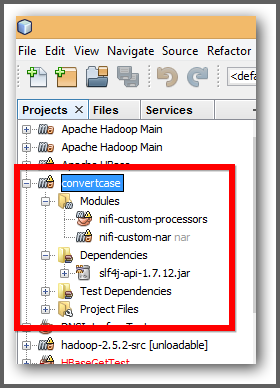

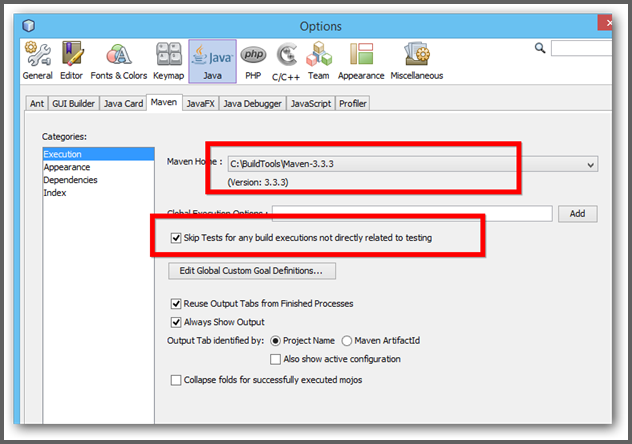

Step 6: Change Maven Home in NetBeans, Open tools->options->Java->Maven and do as following

Step 7: You can see two modules in that,

a. nifi-custom-processors

b. nifi-custom-nar

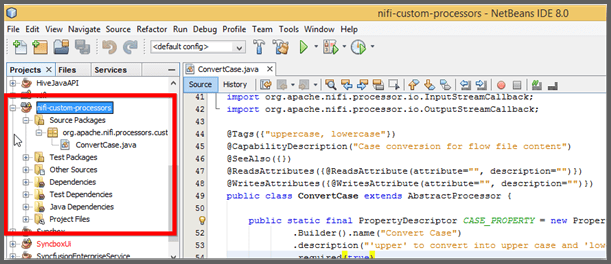

Step 8: Open the nifi-custom-processors project

Step 9: You can find MyProcessor.java, Change the name as per your requirement.

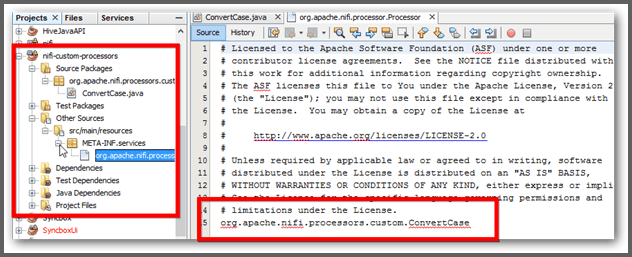

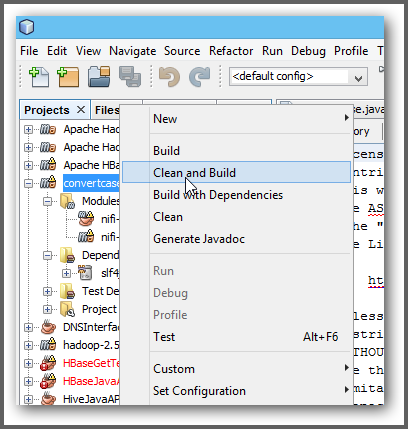

Step 10: Change the same under nifi-custom-processors/Other Sources/src/main/resources/META-INF.services/org.apache.nifi.processor.Processor

Step 11: Now build the project to generate the required jar and nar file

Step 12: Once you build successfully, you can find the nar file under

Drive:\CustomProcessor\convertcase\nifi-custom-nar\target\ nifi-custom-nar-1.1.1.nar

Step 13: Copy the nar file into “/syncfusion_location/SDK/NIFI/lib” and restart the Data Integration service

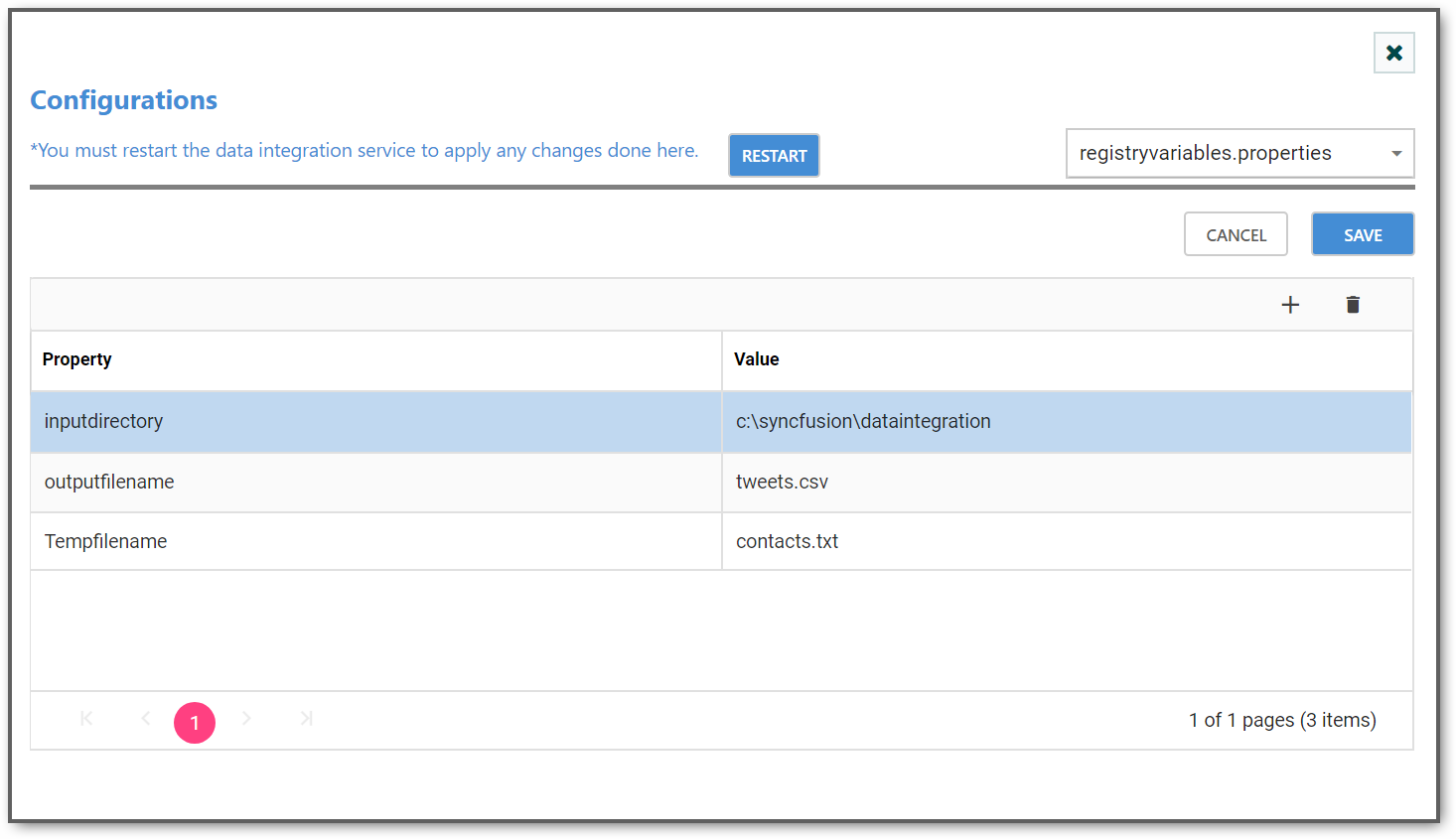

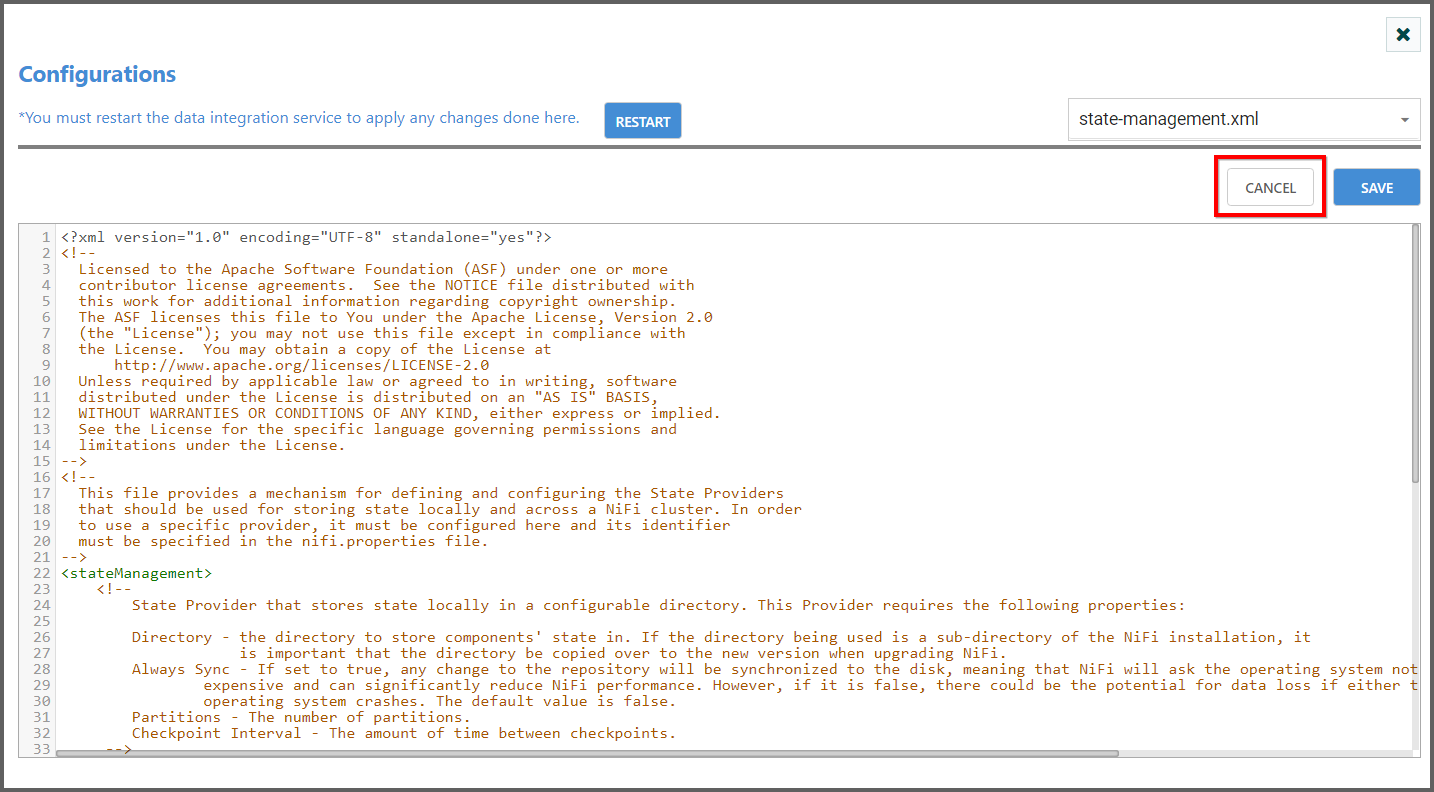

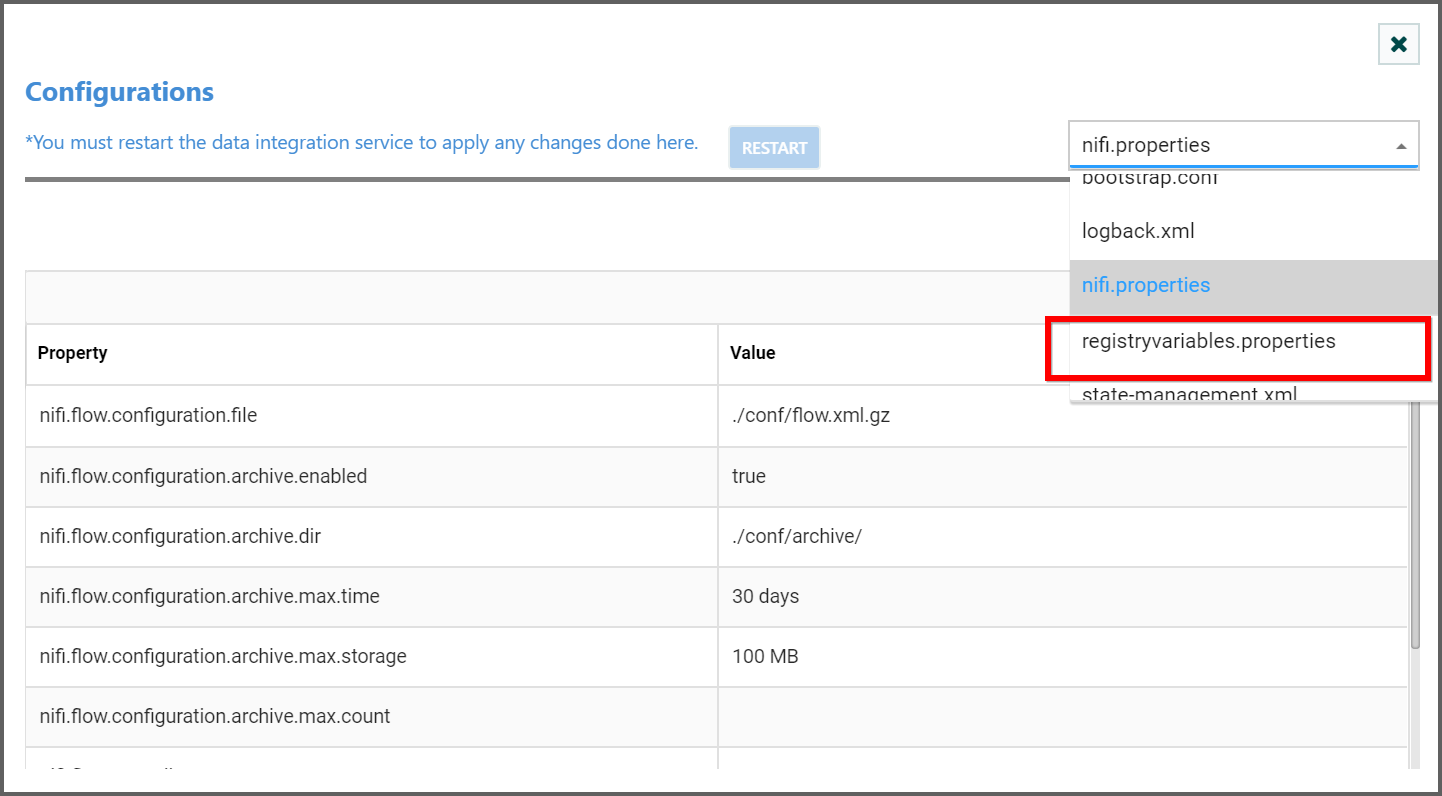

Configurations

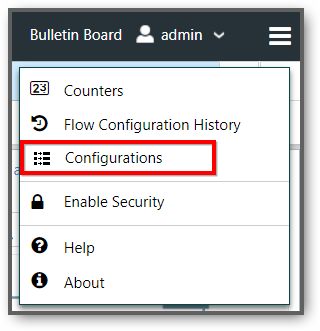

You can edit the Data Integration Configuration files from the header menu. To edit the configuration file, click Configurations from header menu.

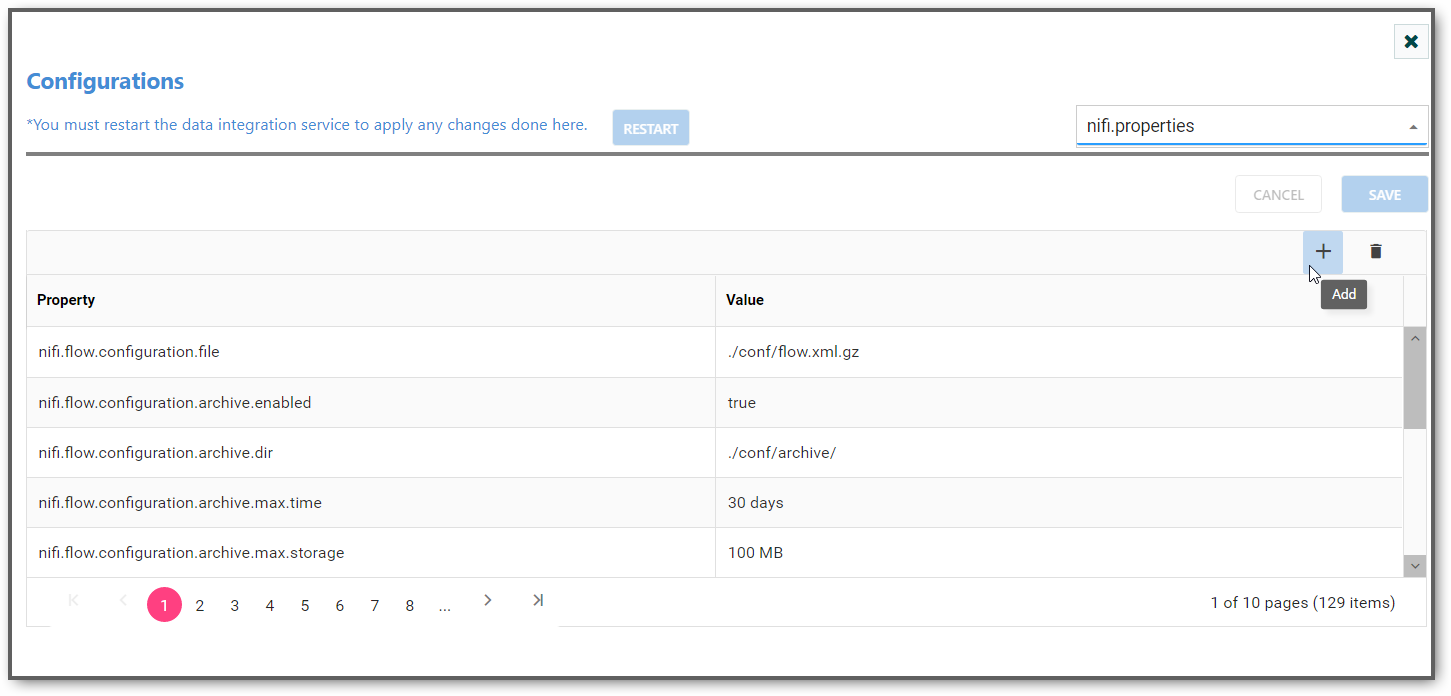

Data Integration have some set of files which helps to increase the Data Integration efficiency and throughput if the user can change the properties according to their machine specification. Below are the Data Integration Configuration files.

-

nifi.properties

-

zookeeper.properties

-

bootstrap.conf

-

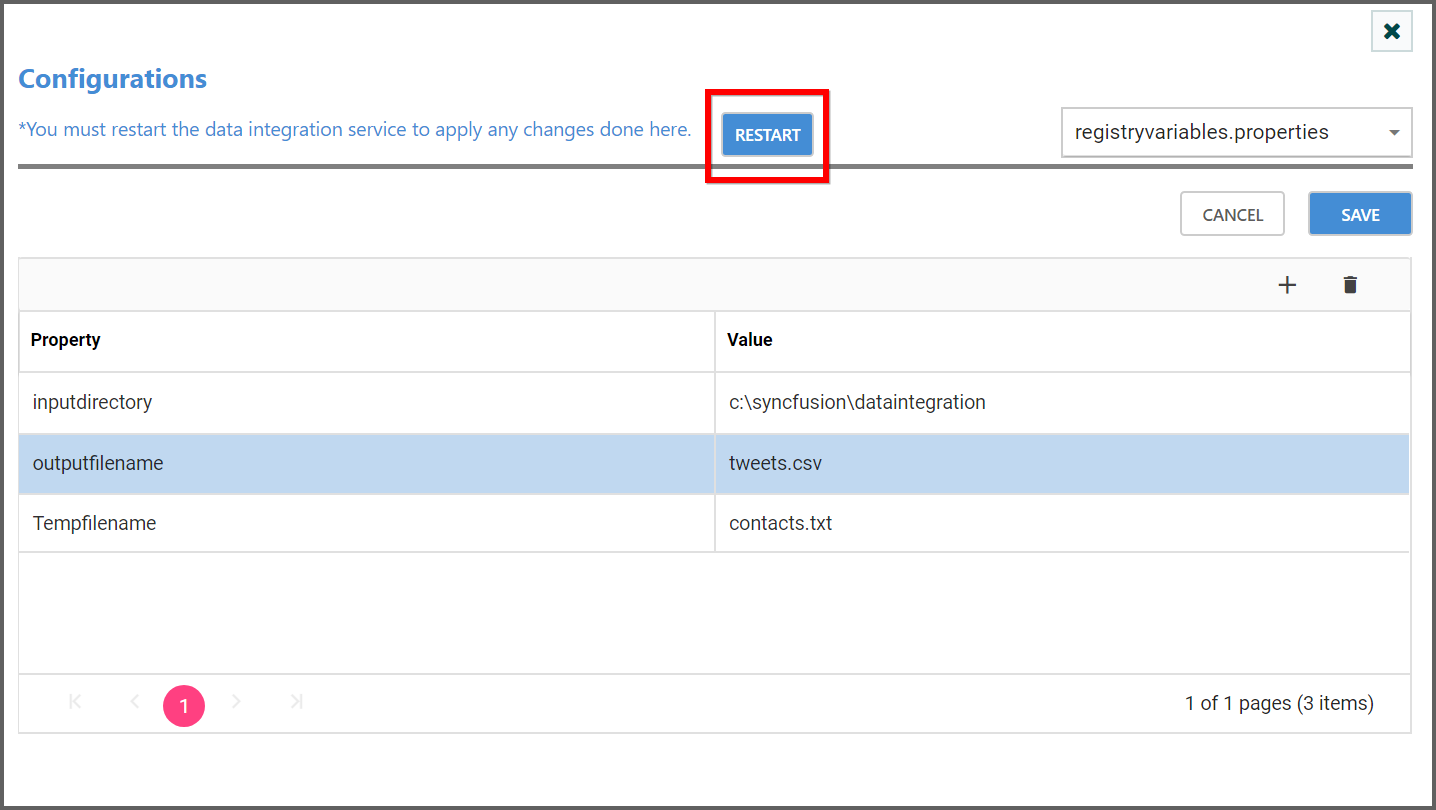

registryvariables.properties

-

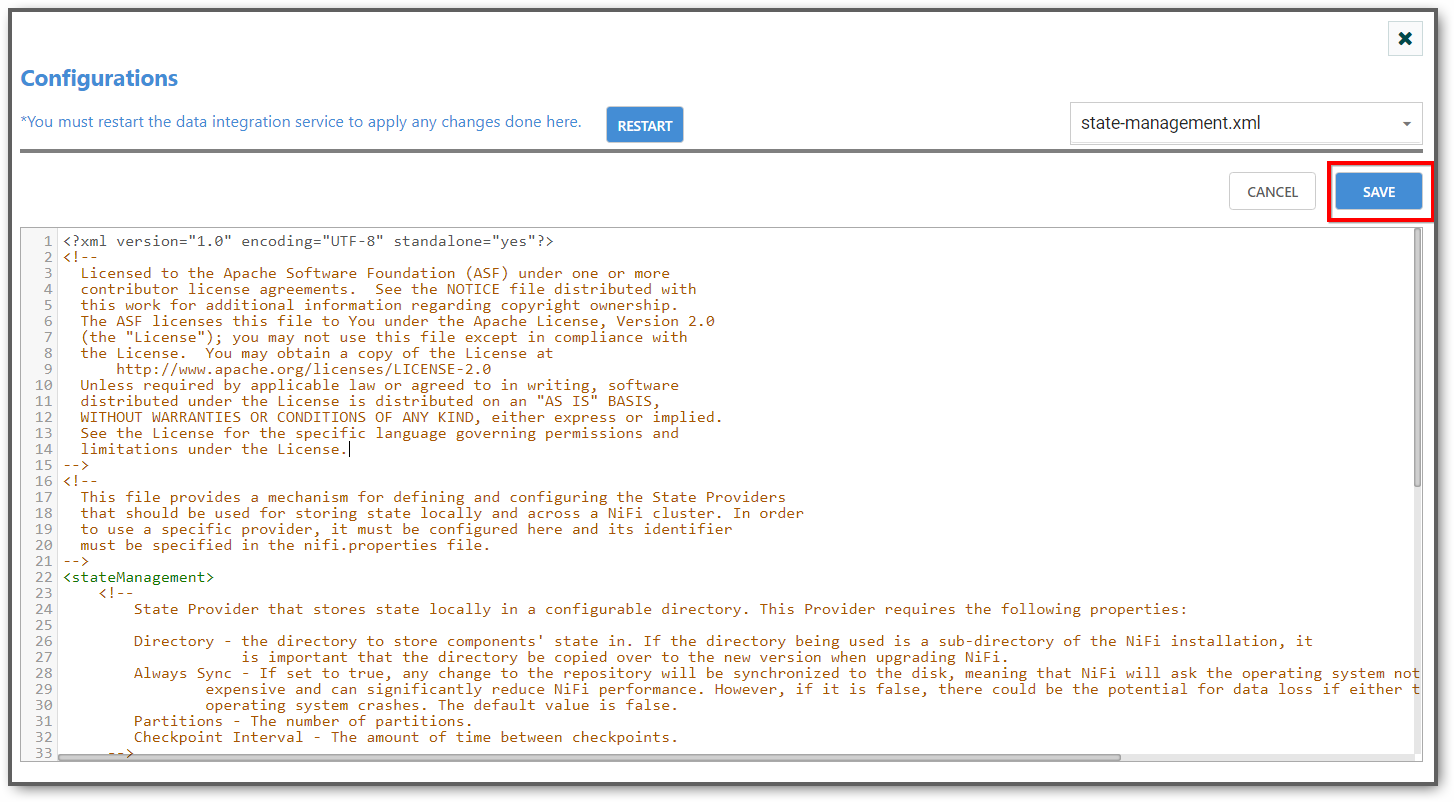

state-management.xml

-

logback.xml

-

bootstrap-notification-services.xml

You can select the file from dropdown and configure the file. XML files are opened with XML editor where you can edit the XML file. In configuration dialog you can add, edit and delete the property.You can add new property and their value by clicking on add icon ( ). Similarly we can the delete the property by clicking on delete icon (

). Similarly we can the delete the property by clicking on delete icon ( ). Also you can edit the existing property by double-clicking on the row. After making changes to the file, restart data integration platform in order for the changes to take effect.

). Also you can edit the existing property by double-clicking on the row. After making changes to the file, restart data integration platform in order for the changes to take effect.

Add:

- You can add new property using add button in the grid.

- Save the added property by clicking outside the editor.

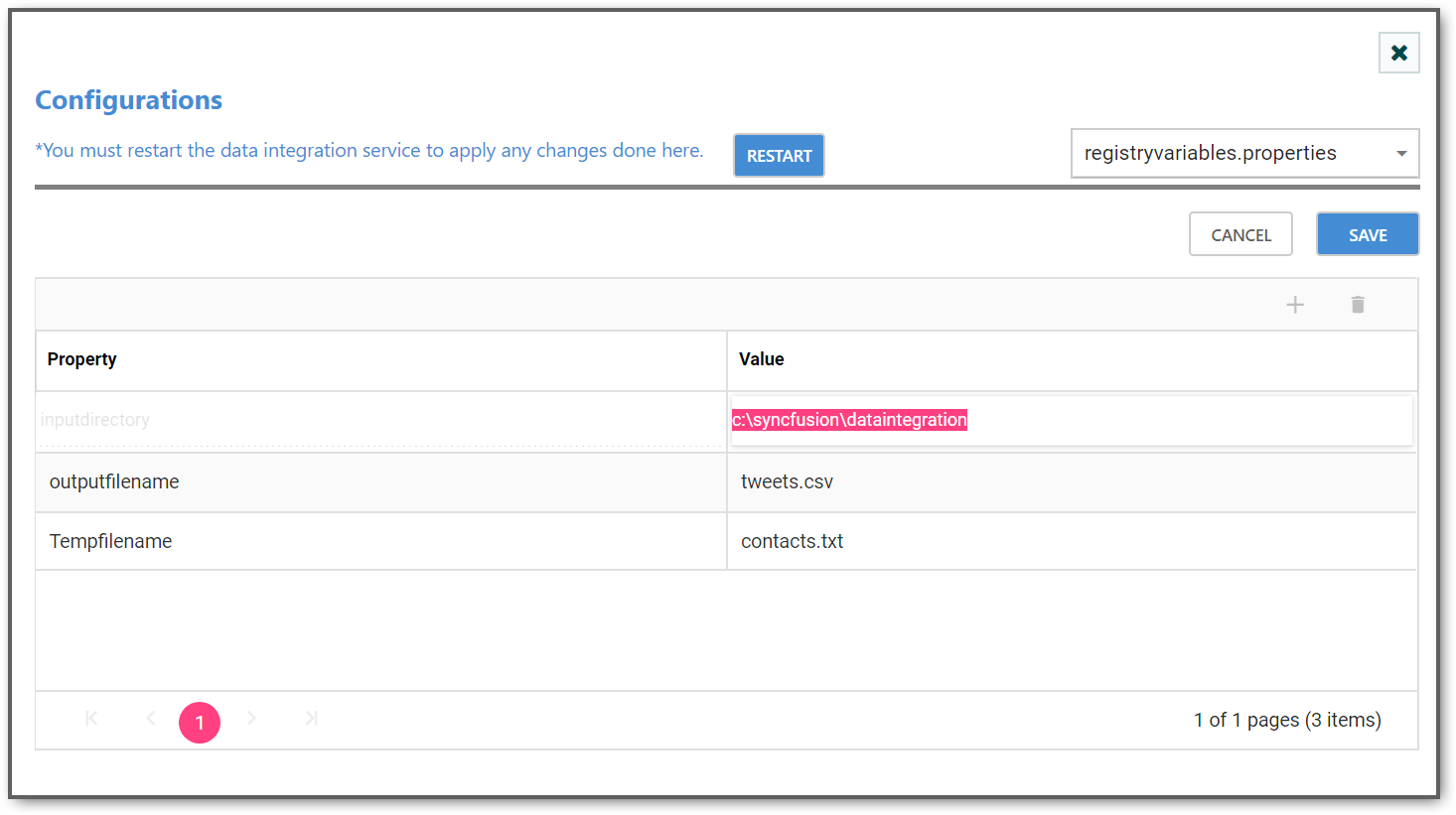

Edit:

- Edit Custom property by double click on the property to be edited.

- Save the property by clicking outside the editor.

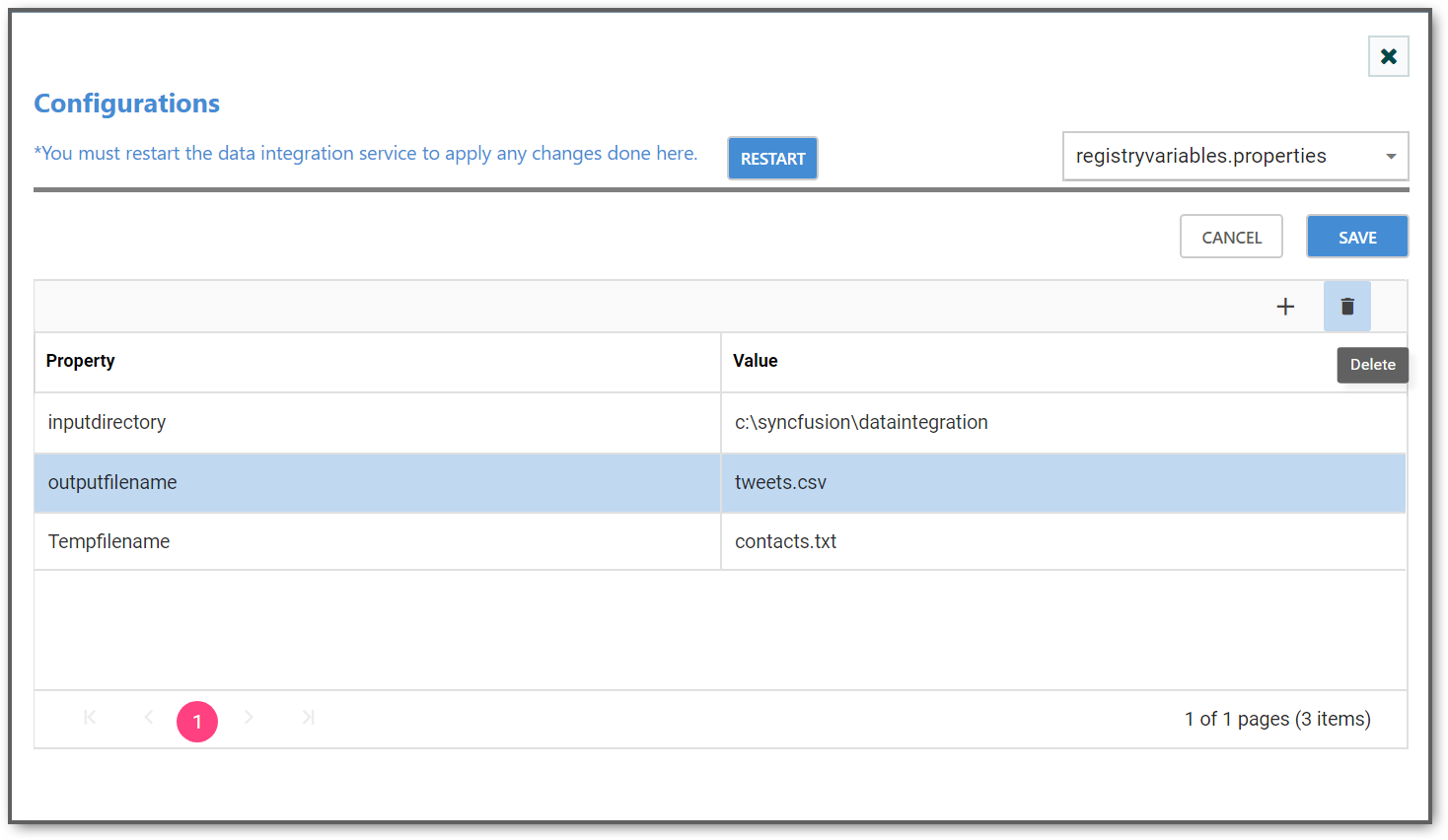

Delete:

- Select the custom property to be deleted and click delete button.

- Save the property by clicking outside the editor.

You can save the file by clicking on save button and cancel the changes by clicking on cancel button.

After making changes to the file, restart data integration service in order for the changes to take effect. You can click on restart button to restart the service.

IMPORTANT

You must restart the data integration service to apply any changes done here.

Registry Variables

You can use Data Integration Expression Language to reference FlowFile attributes, compare them to other values, and manipulate their values when you are creating and configuring dataflows.

In addition to using FlowFile attributes, system properties, and environment properties within Expression Language, you can also define custom properties for Expression Language use. Defining custom properties gives you more flexibility in handling and processing dataflows. You can also create custom properties for connection, server, and service properties, for easier dataflow configuration.

To create custom properties for use with Expression Language, identify one or more sets of key/value pairs, and give them to your system administrator.

Data Integration properties have resolution precedence of which you should be aware when creating custom properties:

-

Processor-specific attributes

-

FlowFile properties

-

FlowFile attributes

-

From variable registry:

-

User defined properties (custom properties)

-

System properties

-

Operating System environment variables

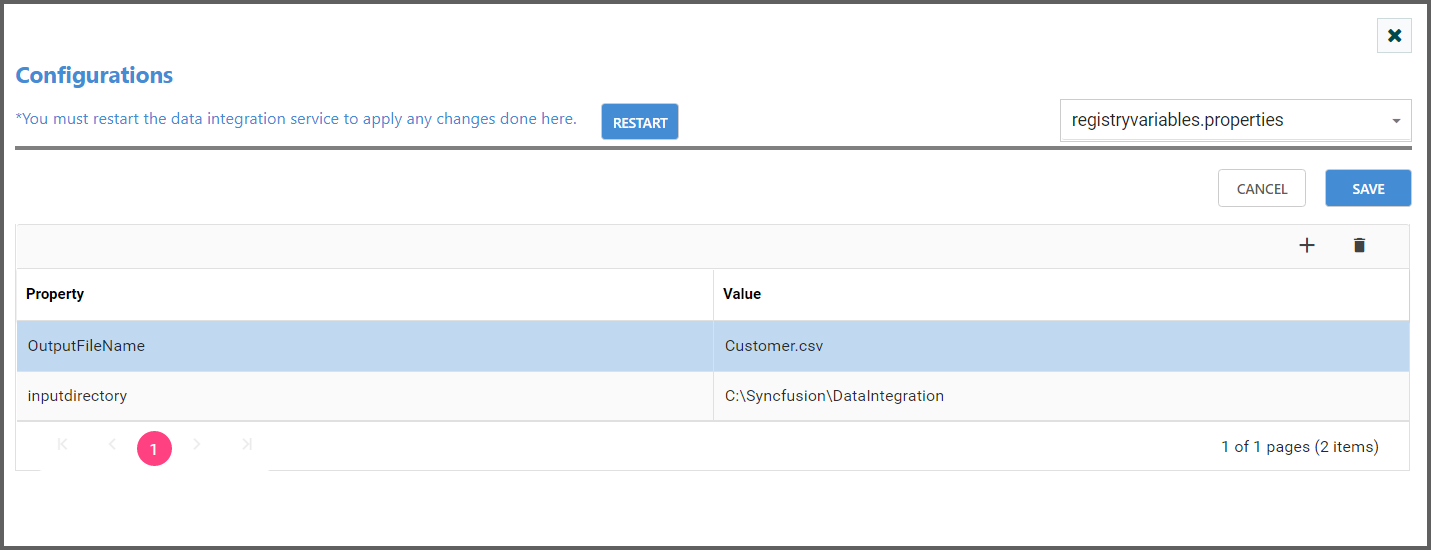

-

To configure custom properties, select the registryvariables.properties file. Here you can add custom property and their value.

Save the file and restart the data integration service once the changes have done.

For more information on Expression Language, see the Expression Language Guide. For information on how to define custom properties, see the System Administrator’s Guide.

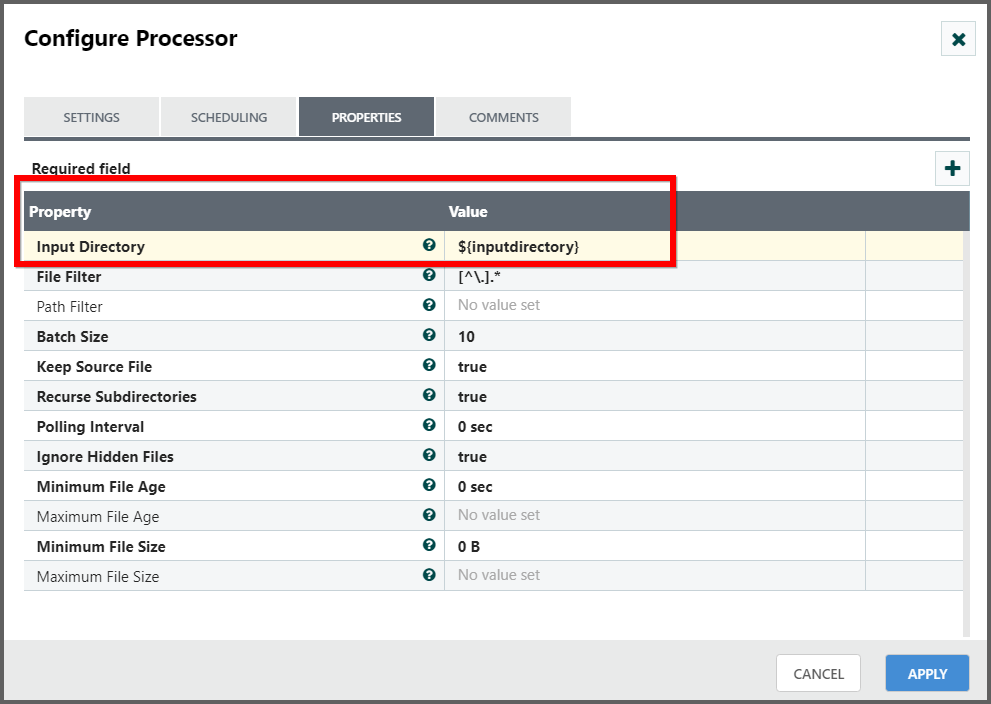

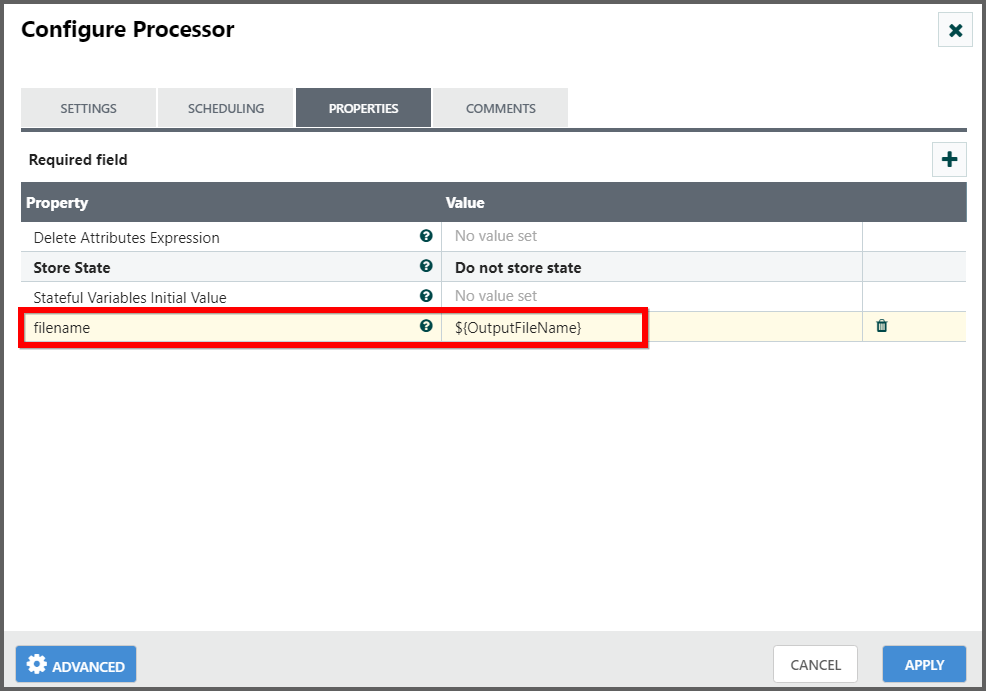

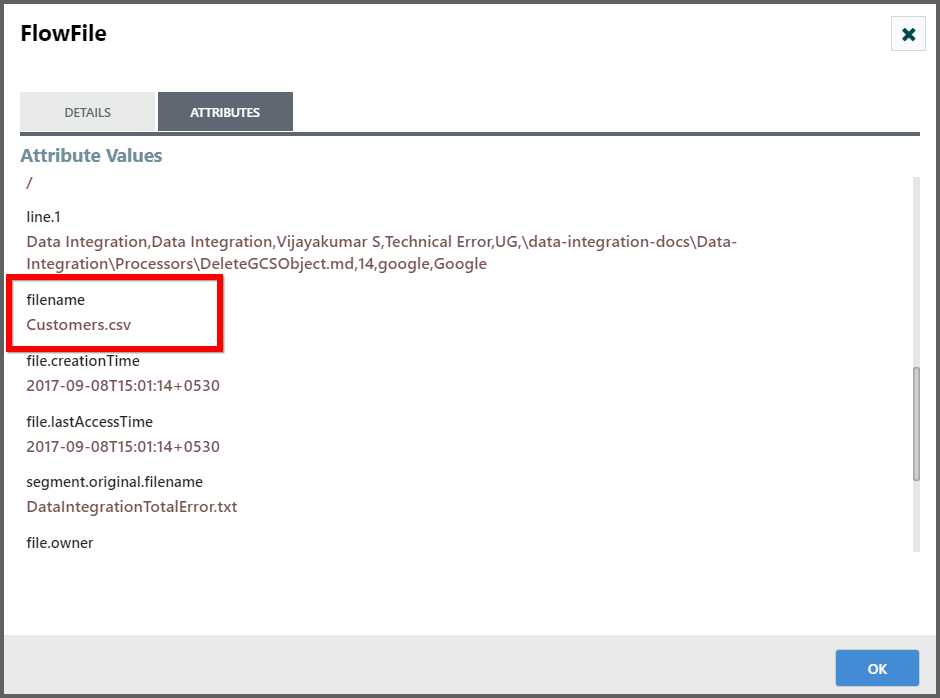

You can use the registry variables in the form of expression language in the data integration processors. The below registry variables have the property and value which is used in the processor as expression language.

-

inputdirectory=C:\Syncfusion\DataIntegration

-

OutputFileName=Customers.csv

Here the inputdirectory registry variable used in expression language ${inputdirectory} in the get file processor, which fetches the input file from the specified directory (i.e “C:\Syncfusion\DataIntegration”).

Similarly here OutputFileName registry variable used in expression language as ${OutputFileName} in the update attribute processor, which assigns the filename to the incoming flowfile (i.e Customers.csv).

Controller Services

Controller Services are available for reporting tasks, processors, and other services to utilize for configuration or task execution. You can use the NiFi UI to add Controller Services for either reporting tasks or dataflows.

Your ability to view and add Controller Services is dependent on the roles and privileges assigned to you. If you do not have access to one or more Controller Services, you are not able to see or access it in the UI. Roles and privileges can be assigned on a global or Controller Service-specific basis.

Controller Services are not reporting task or dataflow specific. You have access to the full set of available Controller Services whether you are adding it for a reporting task or a dataflow.

Adding Controller Settings for Reporting Tasks

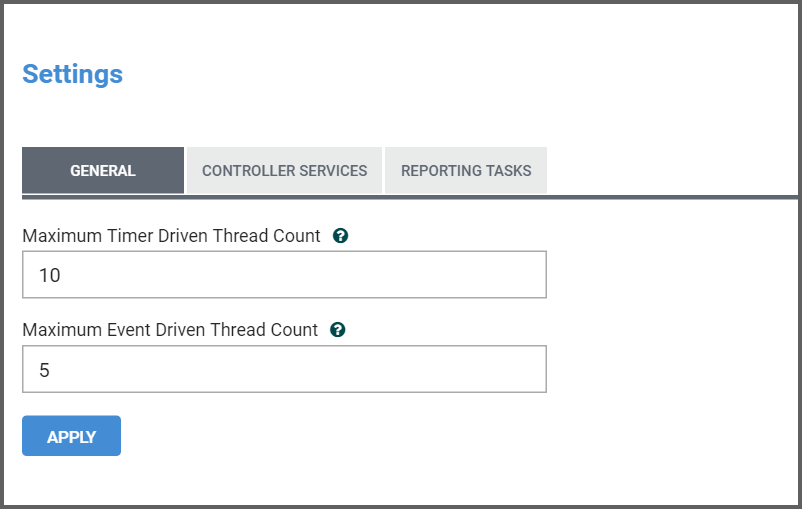

To add a Controller Service for a reporting task, select Controller Settings from the Management tab. This displays the NiFi Settings window.

The NiFi Settings window has three tabs across the top: General, Controller Services, and Reporting Tasks. The General tab is for settings that pertain to general information about the NiFi instance. For example, here, the DFM can provide a unique name for the overall dataflow, as well as comments that describe the flow. Be aware that this information is visible to any other NiFi instance that connects remotely to this instance (using Remote Process Groups, a.k.a., Site-to-Site).

The General tab also provides settings for the overall maximum thread counts of the instance.

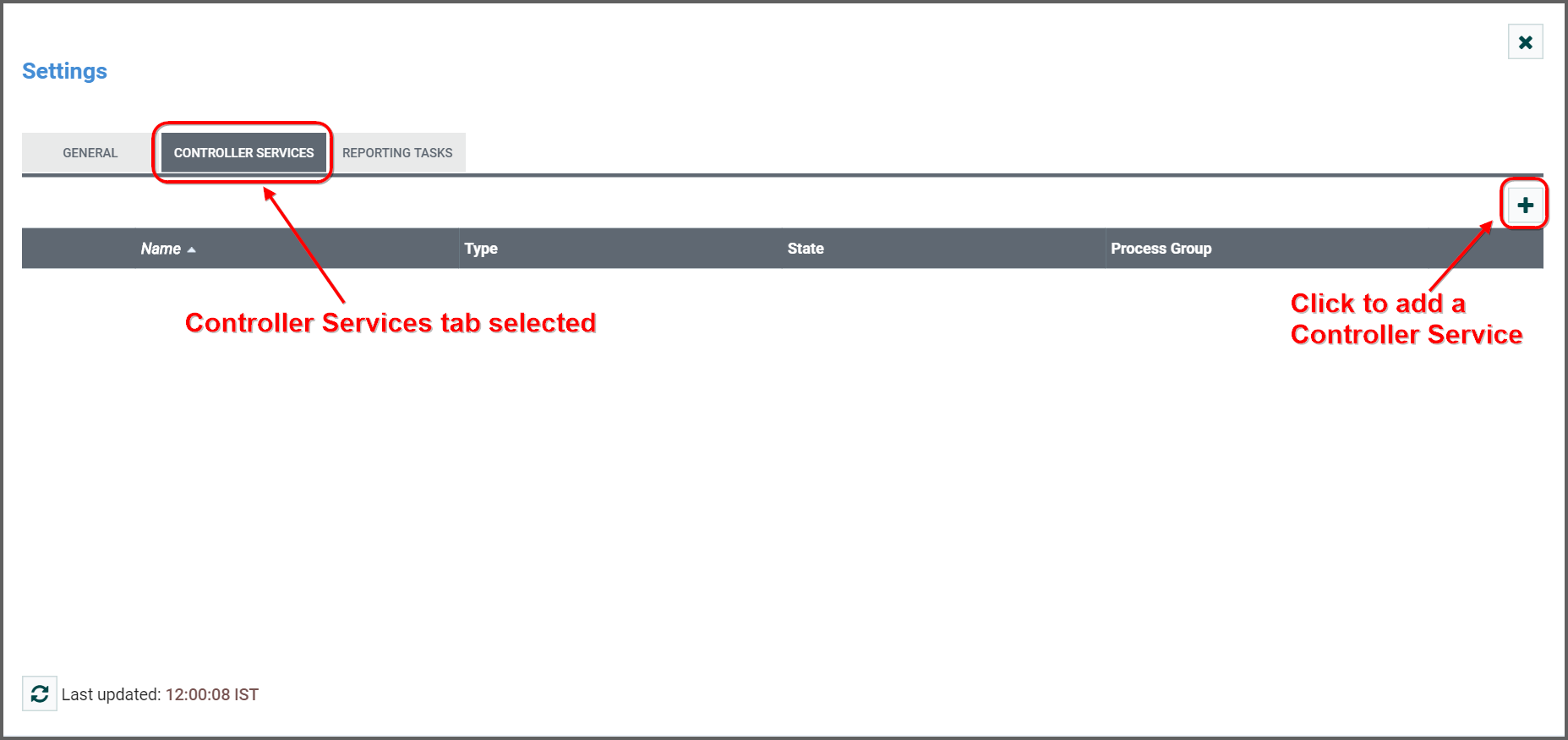

To the right of the General tab is the Controller Services tab. From this tab, the DFM may click the “+” button in the upper-right corner to create a new Controller Service.

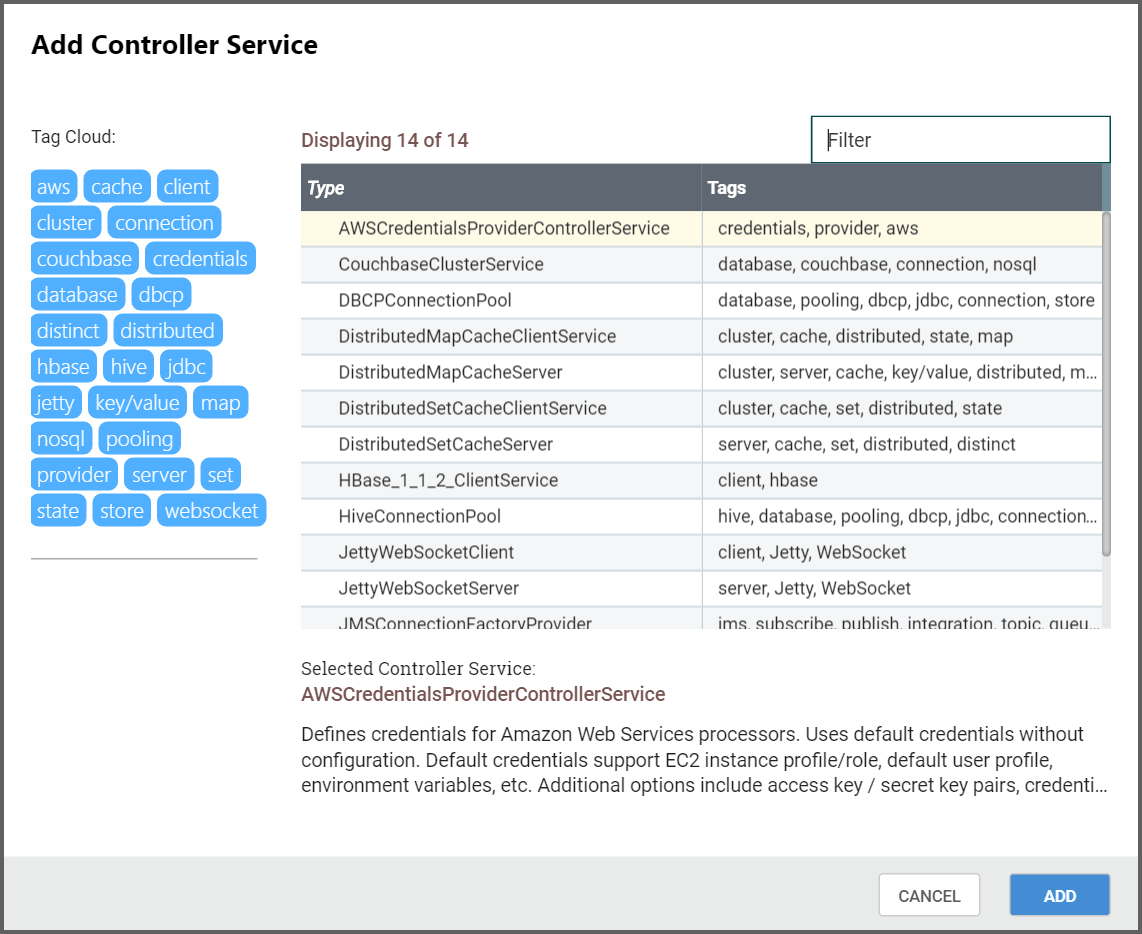

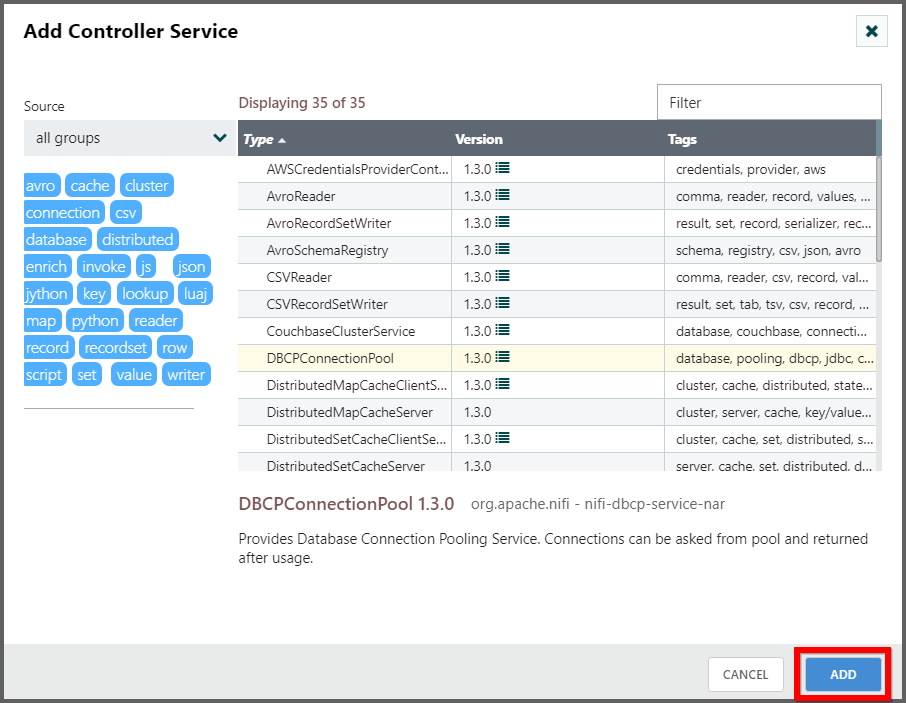

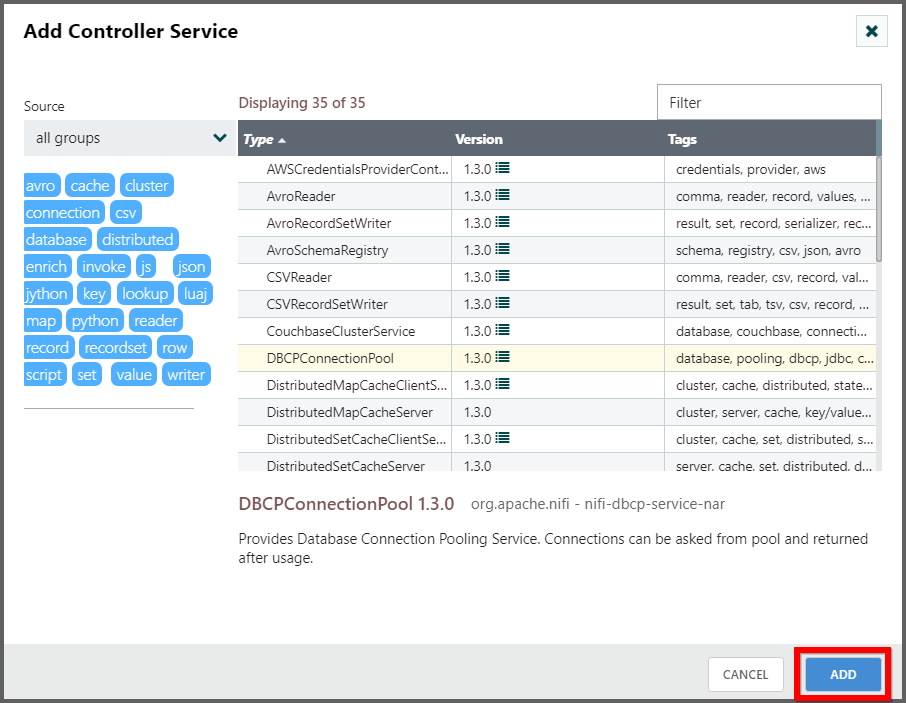

The Add Controller Service window opens. This window is similar to the Add Processor window. It provides a list of the available Controller Services on the right and a tag cloud, showing the most common category tags used for Controller Services, on the left. The DFM may click any tag in the tag cloud in order to narrow down the list of Controller Services to those that fit the categories desired. The DFM may also use the Filter field at the top of the window to search for the desired Controller Service. Upon selecting a Controller Service from the list, the DFM can see a description of the the service below. Select the desired controller service and click Add, or simply double-click the name of the service to add it.

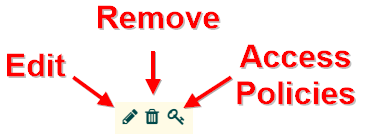

Once you have added a Controller Service, you can configure it by clicking the Edit button in the far-right column. Other buttons in this column include Remove and Access Policies.

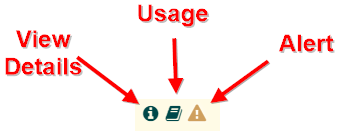

You can obtain information about Controller Services by clicking the Details, Usage, and Alerts buttons in the left-hand column.

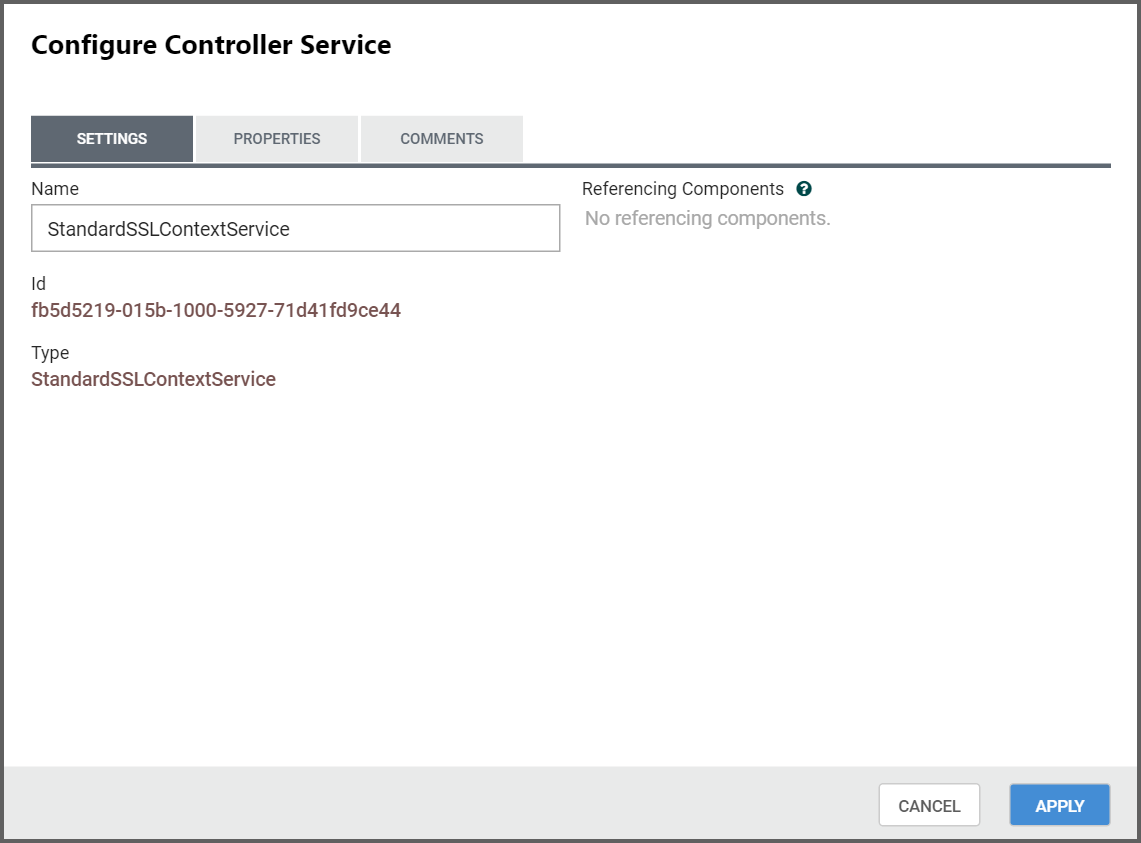

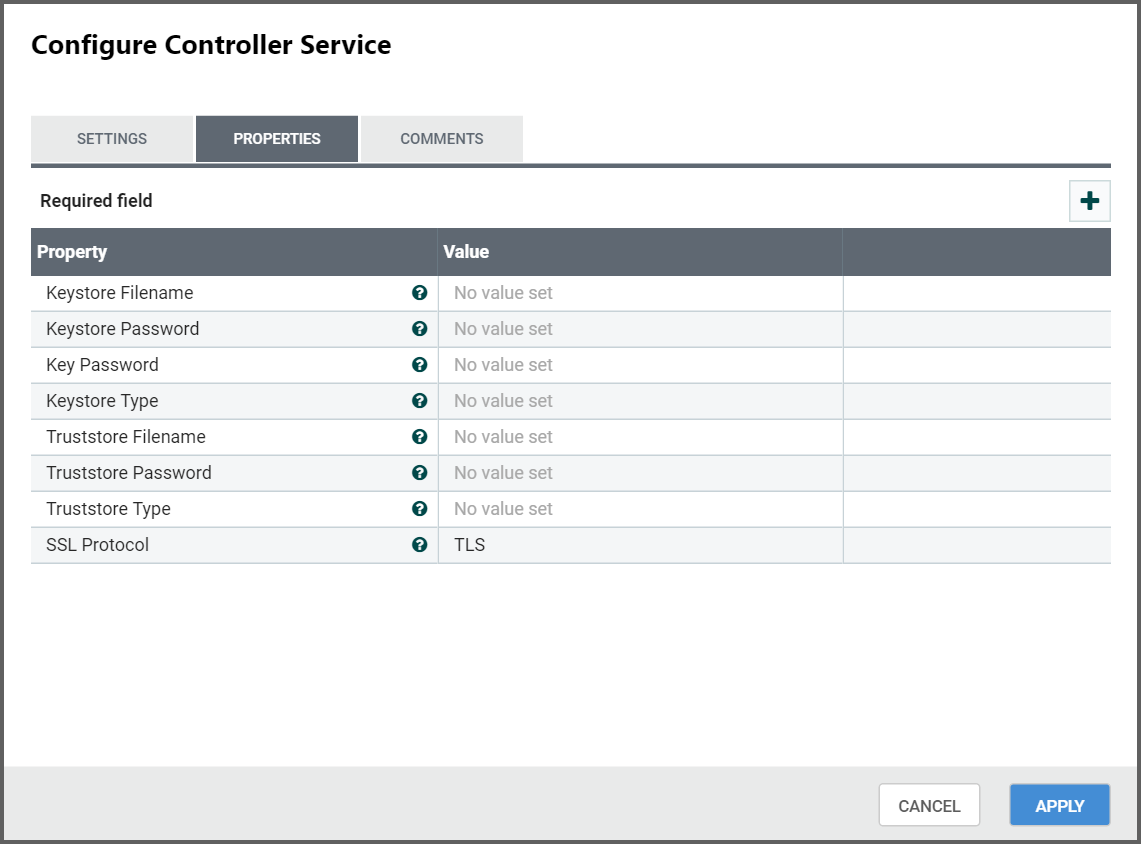

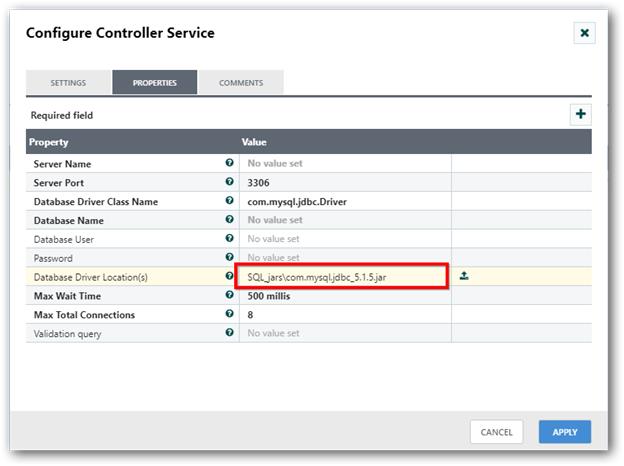

When the DFM clicks the Edit button, a Configure Controller Service window opens. It has three tabs: Settings, Properties, and Comments. This window is similar to the Configure Processor window. The Settings tab provides a place for the DFM to give the Controller Service a unique name (if desired). It also lists the UUID for the service and provides a list of other components (processors or other controller services) that reference the service.

The Properties tab lists the various properties that apply to the particular controller service. As with configuring processors, the DFM may hover the over the question mark icons to see more information about each property.

The Comments tab is just an open-text field, where the DFM may include comments about the service. After configuring a Controller Service, click the Apply button to apply the configuration and close the window, or click the Cancel button to cancel the changes and close the window.

Note that after a Controller Service has been configured, it must be enabled in order to run. Do this using the Enable button in the far-right column of the Controller Services tab of the Controller Settings window. Then, in order to modify an existing/running controller service, the DFM needs to stop/disable it (as well as all referencing processors, reporting tasks, and controller services). Rather than having to hunt down each component that is referenced by that controller service, the DFM has the ability to stop/disable them when disabling the controller service in question. Likewise, when enabling a controller service, the DFM has the option to start/enable all referencing processors, reporting tasks, and controller services.

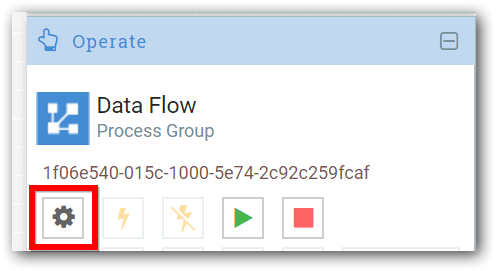

Adding Controller Services for Dataflows

To add a Controller Service for a dataflow, you can either right click a Process Group and select Configure, or click Configure from the Operate Palette. When you click Configure from the Operate Palette with nothing selected on your canvas, you add a Controller Service for your root Process Group. That Controller Service is then available to all nested Process Groups in your dataflow. When you select a Process Group on the canvas and then click Configure from either the Operate Palette or the Process Group context menu, you add a Controller Service only for use with the selected Process Group.

In either case, use the following steps to add a Controller Service:

Step 1: Click Configure, either from the Operate Palette, or from the Process Group context menu.

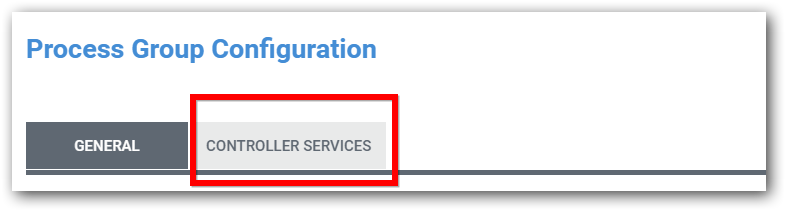

Step 2: From the Process Group Configuration page, select the Controller Services tab.

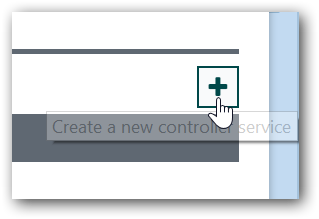

Step 3: Click the Add button to display the Add Controller Service dialog.

Step 4: Select the Controller Service you want to add, and click Add.

Step 5: Perform any necessary Controller Service configuration tasks by clicking the View Details icon (  ) in the left-hand column.

) in the left-hand column.

To Add DBCPConnectionPool Controller Service:

Step 1: Perform the steps mentioned above till step 4,In Add controller service window, select “DBCPConnectionPool” and click add.

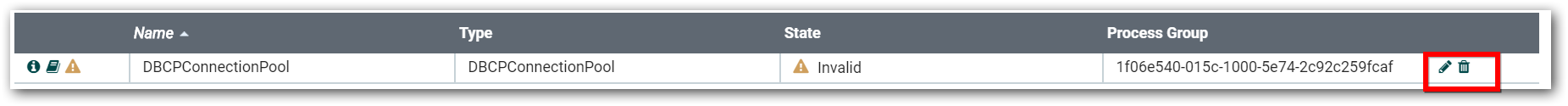

Step 2: In Process Group Configuration window, click on “EDIT” icon to configure the controller services.

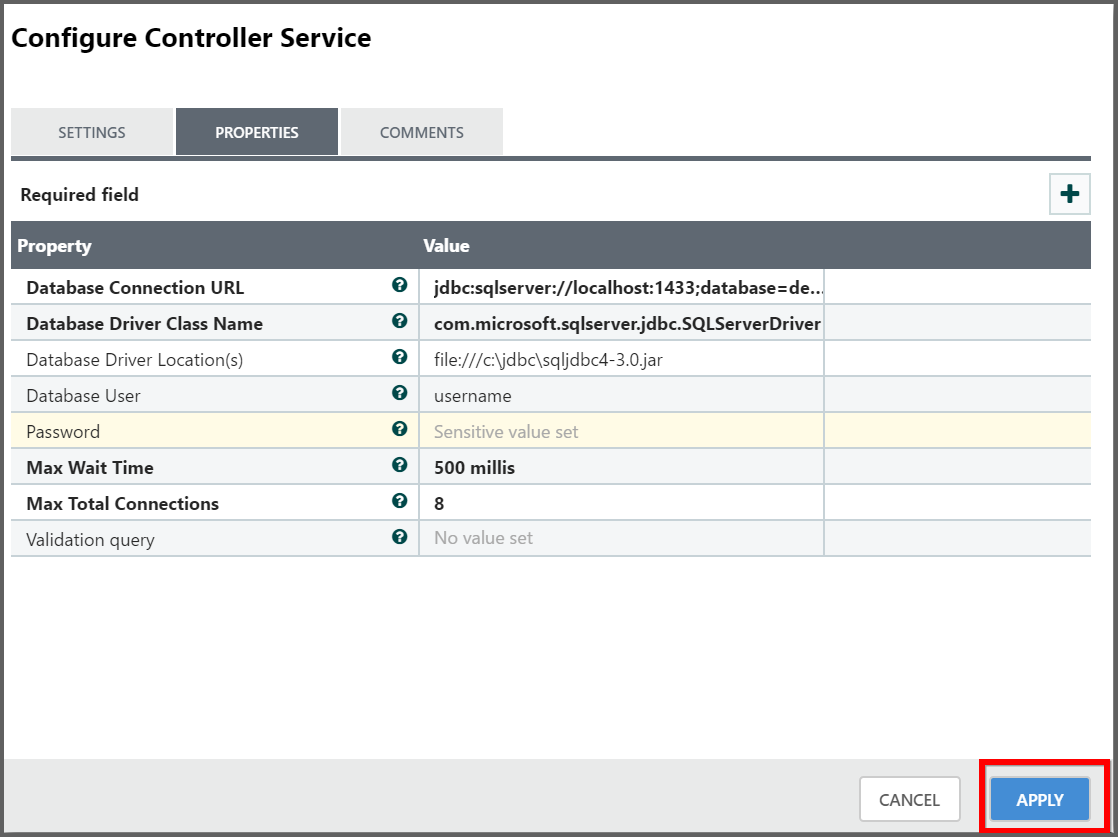

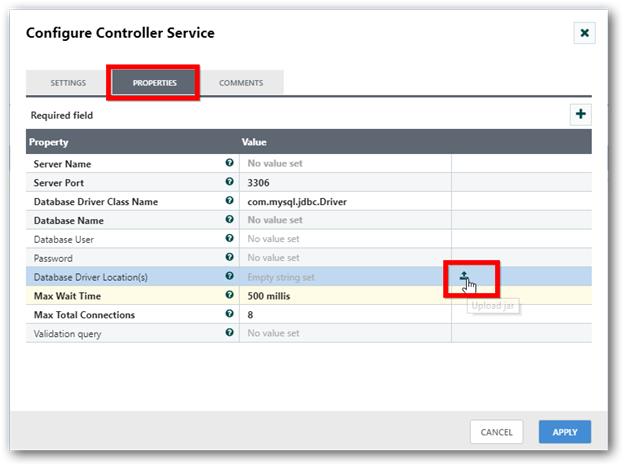

Step 3: Go to “Properties” tab in “Configure Controller Service” window and give the below details to connect with different data source.

| Field | DataSource | Format | Example |

| Database Connection URL | SQL server | JDBC:sqlserver://ipaddressorhostname:portno;databse=databasename | JDBC:sqlserver://localhost:1433;database=demo |

| Oracle | JDBC:oracle:thin:@{IP address}:portno:{ dbname } | JDBC:oracle:thin:@localhost:1521:demo | |

| MY SQL | JDBC:mysql://{IP address}:portno/{dbname} | JDBC:mysql://localhost:3306/demo | |

| Database Driver Class Name | SQL server | com.microsoft.sqlserver.jdbc.SQLServerDriver | SQL server - com.microsoft.sqlserver.jdbc.SQLServerDriver |

| Oracle | oracle.jdbc.driver.OracleDriver | Oracle - oracle.jdbc.driver.OracleDriver | |

| MY SQL | com.mysql.jdbc.driver | MySQL - com.mysql.jdbc.driver | |

| Database Drive Locations | SQL server | Database driver jar file path URL. Note: Download the JDBC jar and give the input directory path for the jar. Link to download SQL Server JAR -https://www.microsoft.com/en-in/download/details.aspx?id=11774 | file:///c:\jdbc\sqljdbc4-3.0.jar |

| Oracle | Database driver jar file path URL. Note: Download the JDBC jar and give the input directory path for the jar. Link to download Oracle Server JAR - http://www.oracle.com/technetwork/apps-tech/jdbc-112010-090769.html | file:///c:\jdbc\ojdbc6.jar | |

| MY SQL | Database driver jar file path URL. Note: Download the JDBC jar and give the input directory path for the jar. Link to download MySQL JAR – https://mvnrepository.com/artifact/mysql/mysql-connector-java/5.1.18 | file:///c:\jdbc\mysql-connector-java-5.1.18.jar | |

| Database User | Database user name | Username | |

| Password | Database Password | ****** | |

| Max Wait Time | The maximum amount of time that the pool will wait (when there are no available connections) for a connection to be returned before failing, or -1 to wait indefinitely. | 500 millis | |

| Max Total Connections | The maximum number of active connections that can be allocated from this pool at the same time, or negative for no limit. | 8 |

Once after entering the required details, click Apply

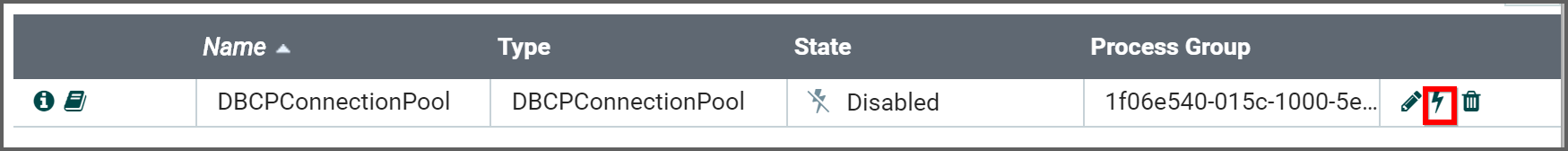

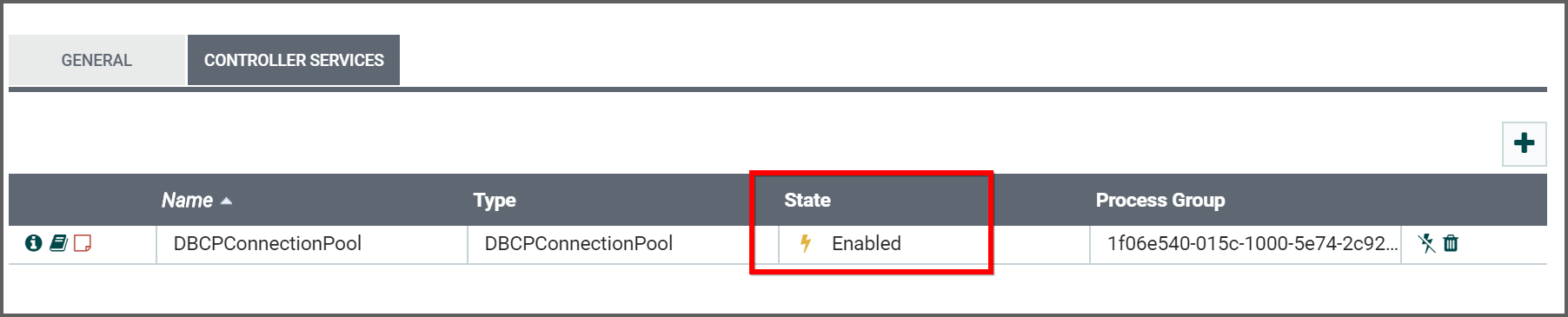

Step 4: Click on the Enable button as shown below.

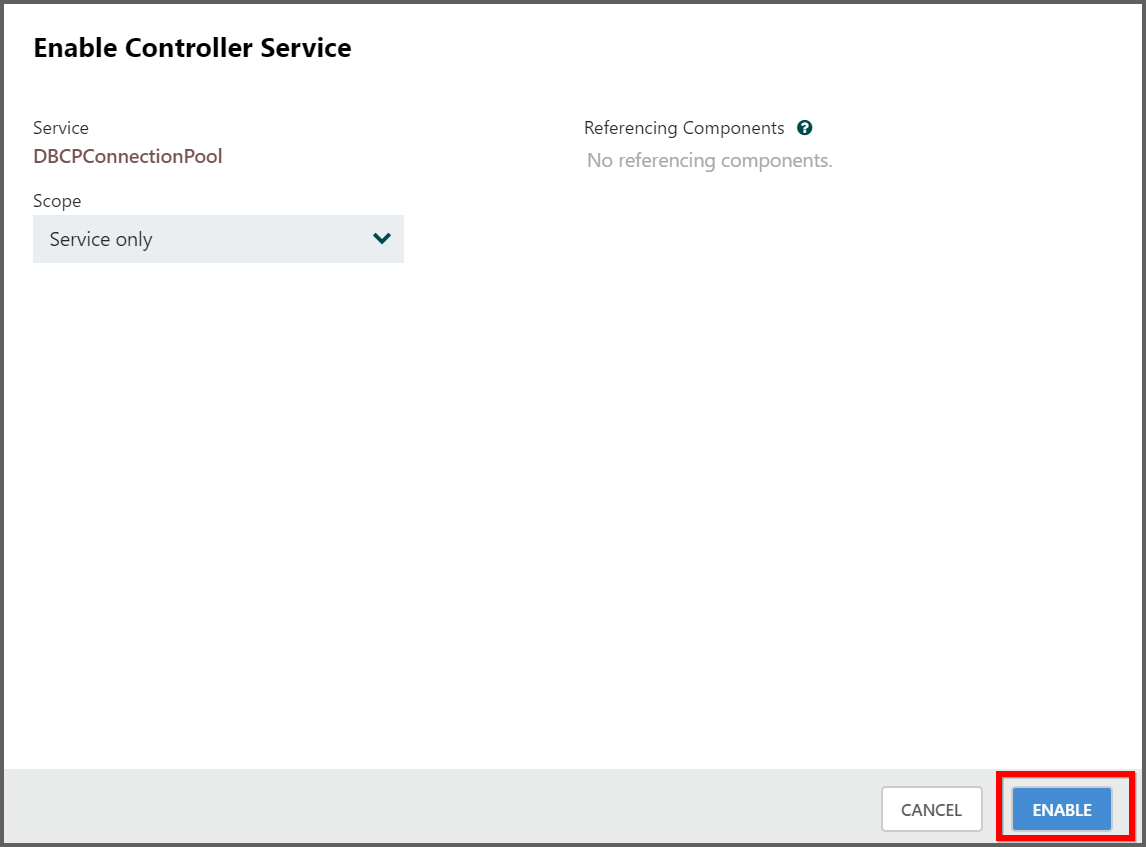

Step 5: After enabling “Controller Services” close the Process Group Configuration dialog.

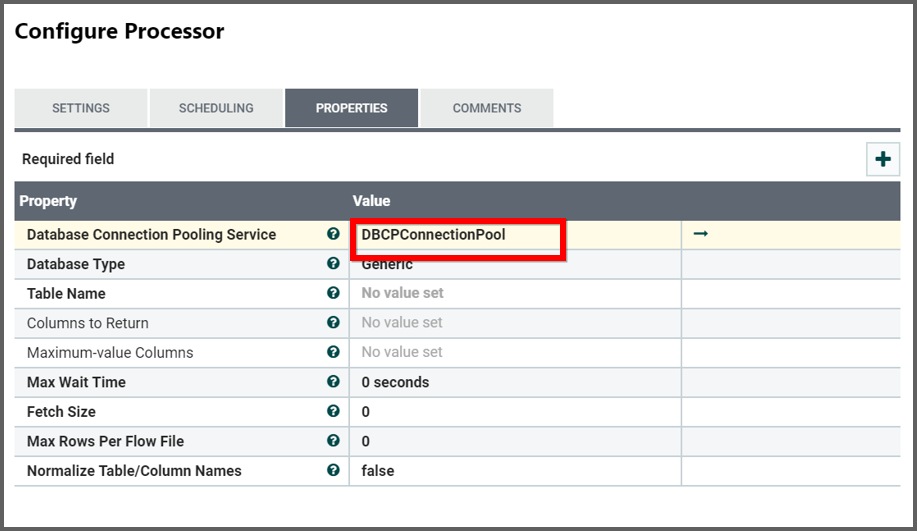

Finally, you have created “DBCPConnectionPool” for the data source, now you can use your created DBCPConnectionPool in your processors to connect with the required data source using configure option for processor.

Browse or upload jar file into database controller services

You must set the location path for database driver location after choosing the database controller services. Data Integration Platform provides download and upload jar feature by the following steps:

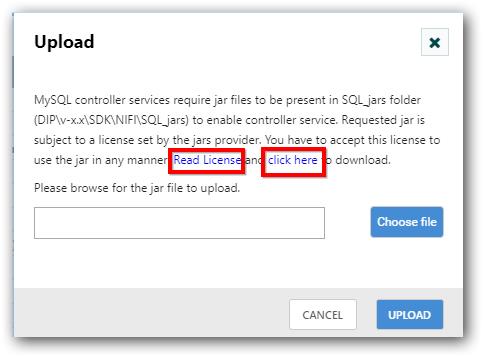

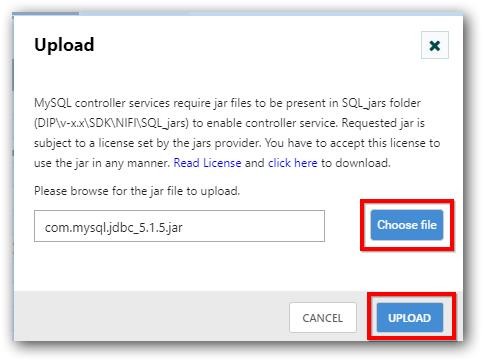

Step 1: Choose required database controller service and select upload jar option from the properties tab.

Step 2: Upload jar option will display the jar description with license agreement and download link in dialog box. Before downloading jar, go through the license agreement.Each controller services jar upload popup will show corresponding jar description, license, and download link.

Step3: Select choose file option to browse and choose the downloaded jar and upload the jar to the server by clicking upload.

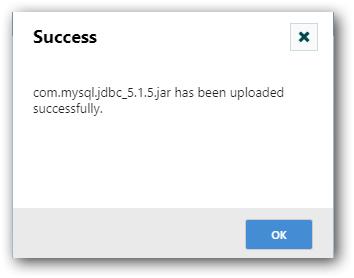

Step 4: Once the jar is uploaded successfully, it will show the success information message in dialog popup with corresponding jar name.

Step 5:The location path for the database driver is filled automatically after getting success message. Click apply with all other required fields in controller services.

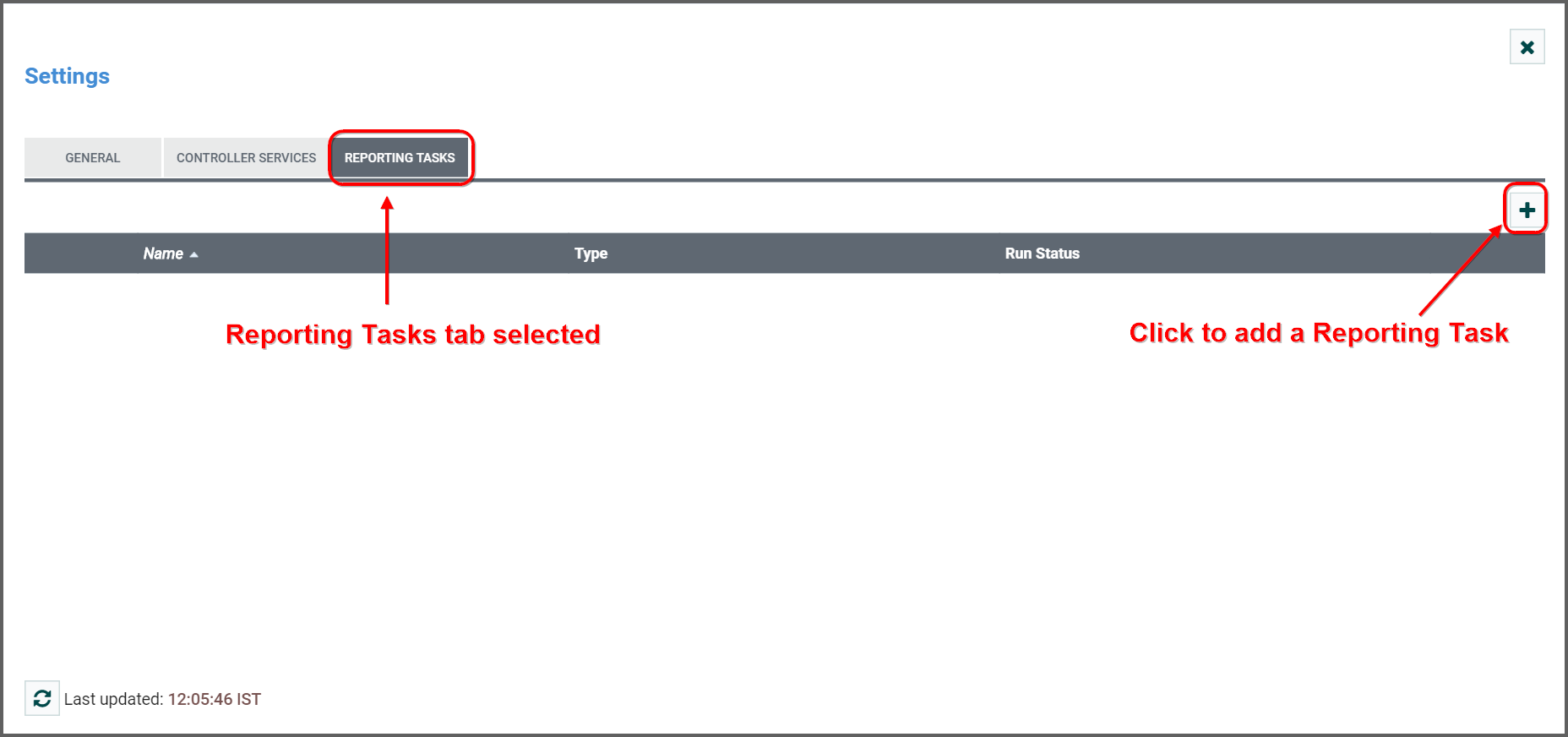

Reporting Tasks

The Reporting Tasks tab behaves similarly to the Controller Services tab. The DFM has the option to add Reporting Tasks and configure them in the same way as Controller Services.

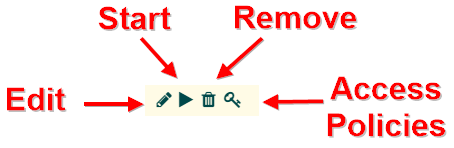

Once a Reporting Task has been added, the DFM may configure it by clicking the Edit (pencil icon) in the far-right column. Other buttons in this column include the Start button, Remove button, and Usage button, which links to the documentation for the particular Reporting Task.

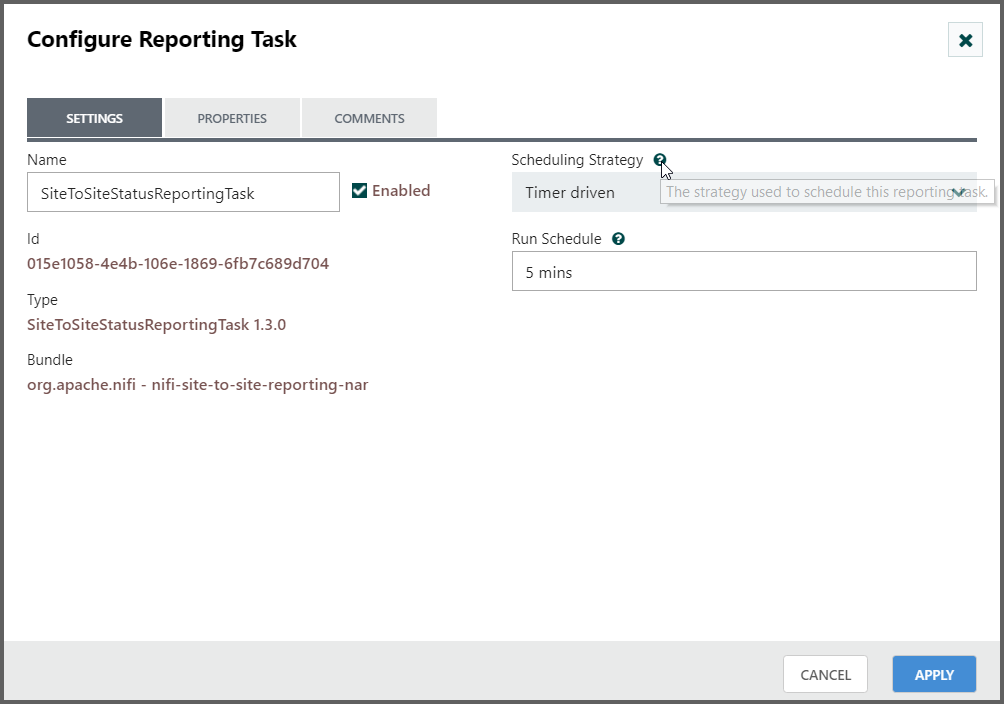

When the DFM clicks the Edit button, a Configure Reporting Task window opens. It has three tabs: Settings, Properties, and Comments. This window is also similar to the Configure Processor window. The Settings tab provides a place for the DFM to give the Reporting Task a unique name (if desired). It also lists a UUID for the Reporting Task and provides settings for the task’s Scheduling Strategy and Run Schedule (similar to the same settings in a processor). The DFM may hover the mouse over the question mark icons to see more information about each setting.

The Properties tab for a Reporting Task lists the properties that may be configured for the task. The DFM may hover the mouse over the question mark icons to see more information about each property.

The Comments tab is just an open-text field, where the DFM may include comments about the task. After configuring the Reporting Task, click the Apply button to apply the configuration and close the window, or click Cancel to cancel the changes and close the window.

When you want to run the Reporting Task, click the Start button in the far-right column of the Reporting Tasks tab.

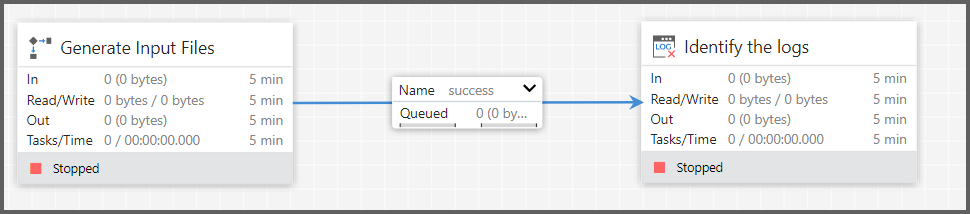

Connecting Components

Once processors and other components have been added to the canvas and configured, the next step is to connect them to one another so that NiFi knows what to do with each FlowFile after it has been processed. This is accomplished by creating a Connection between each component. When the user hovers the mouse over the center of a component, a new Connection icon (

) appears:

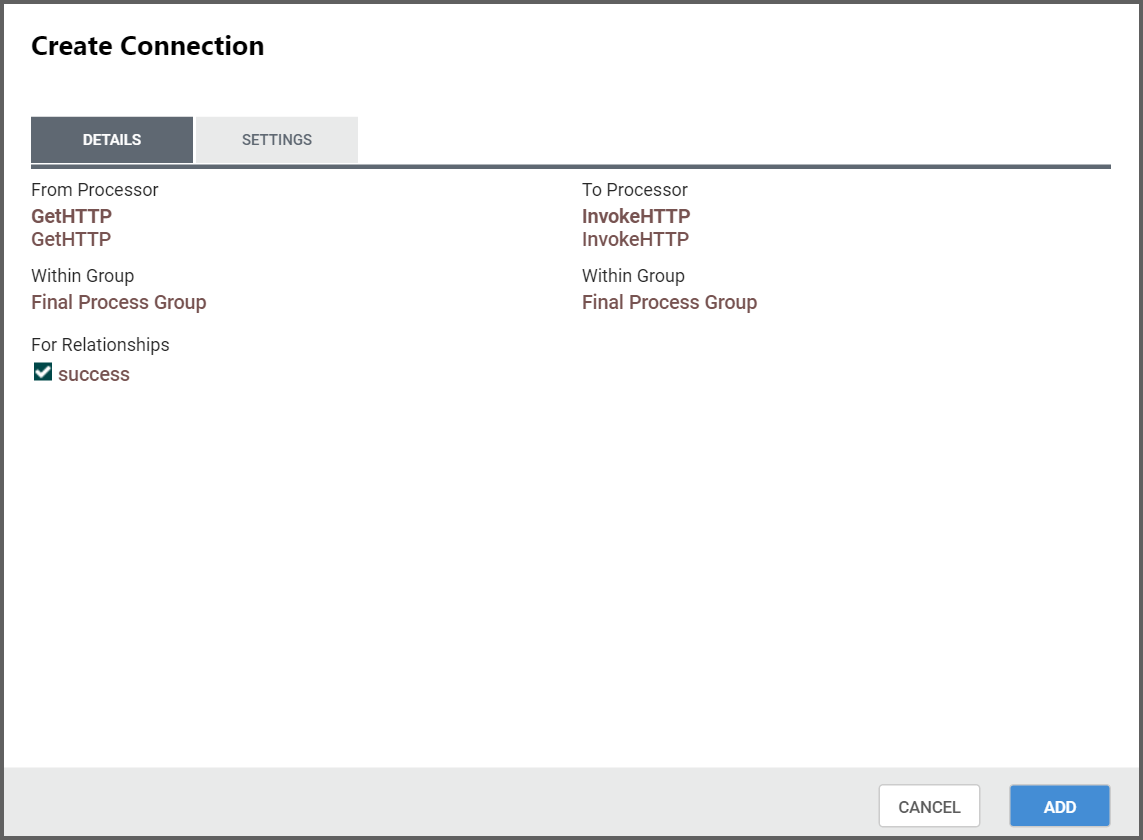

The user drags the Connection bubble from one component to another until the second component is highlighted. When the user releases the mouse, a ‘Create Connection’ dialog appears. This dialog consists of two tabs: ‘Details’ and ‘Settings’. They are discussed in detail below. Note that it is possible to draw a connection so that it loops back on the same processor. This can be useful if the DFM wants the processor to try to re-process FlowFiles if they go down a failure Relationship. To create this type of looping connection, simply drag the connection bubble away and then back to the same processor until it is highlighted. Then release the mouse and the same Create Connection dialog appears. The Create Connection dialog has tow tabs. You can configure the connection in the setting tab.

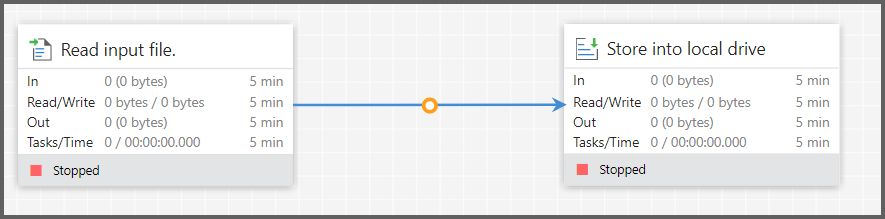

The connection established between two processor and the connection is in collapsed state.

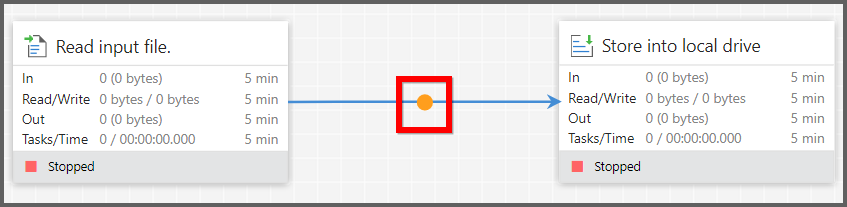

The connection icon color changes into orange which indicates that connection having flowfiles.

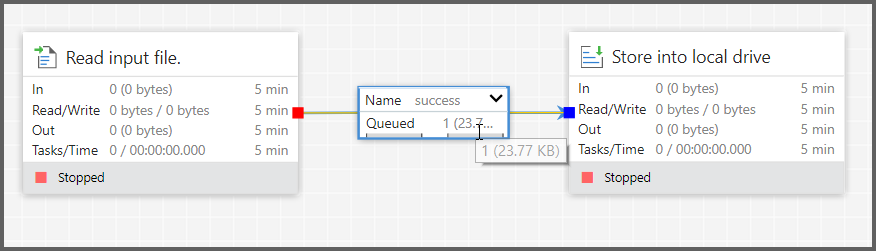

On clicking the connection ( ) icon expands connection area and it contains the information about flowfiles.

) icon expands connection area and it contains the information about flowfiles.

Details Tab

The Details Tab of the Create Connection dialog provides information about the source and destination components, including the component name, the component type, and the Process Group in which the component lives:

Additionally, this tab provides the ability to choose which Relationships should be included in this Connection. At least one Relationship must be selected. If only one Relationship is available, it is automatically selected.

IMPORTANT

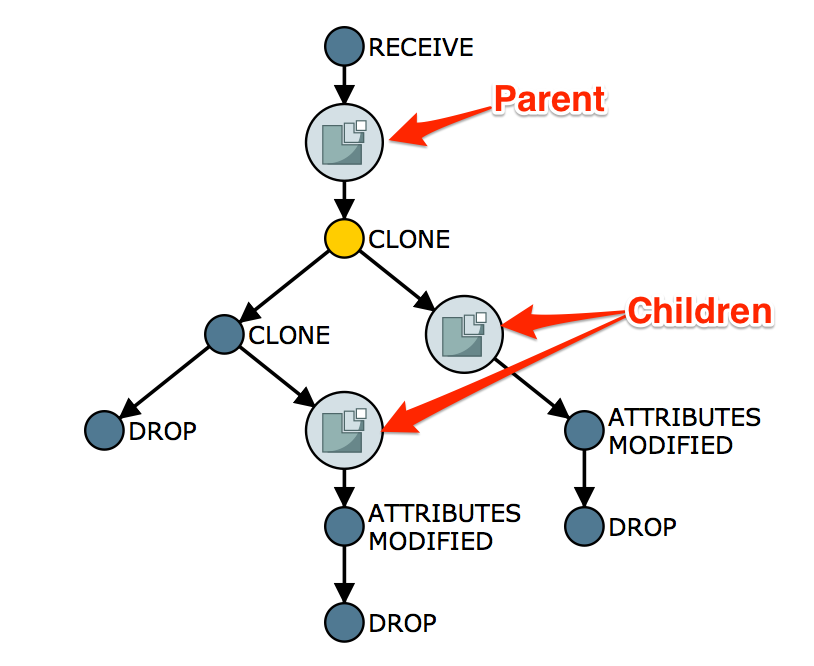

If multiple Connections are added with the same Relationship, any FlowFile that is routed to that Relationship will automatically be ‘cloned’, and a copy will be sent to each of those Connections.

Settings

The Settings Tab provides the ability to configure the Connection’s name, FlowFile expiration, Back Pressure thresholds and Prioritization.

The Connection name is optional. If not specified, the name shown for the Connection will be names of the Relationships that are active for the Connection.

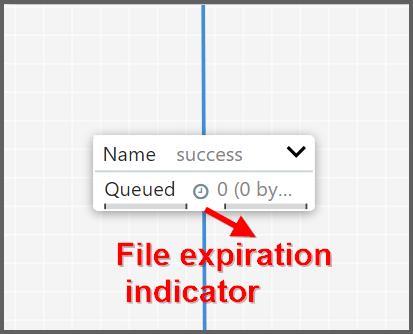

FlowFile expiration is a concept by which data that cannot be processed in a timely fashion can be automatically removed from the flow. This is useful, for example, when the volume of data is expected to exceed the volume that can be sent to a remote site. In this case, the expiration can be used in conjunction with Prioritizers to ensure that the highest priority data is processed first and then anything that cannot be processed within a certain time period (one hour, for example) can be dropped. The expiration period is based on the time that the data entered the NiFi instance. In other words, if the file expiration on a given connection is set to 1 hour, and a file that has been in the NiFi instance for one hour reaches that connection, it will expire. The default value of 0 sec indicates that the data will never expire. When a file expiration other than 0 sec is set, a small clock icon appears on the connection label, so the DFM can see it at-a-glance when looking at a flow on the canvas.

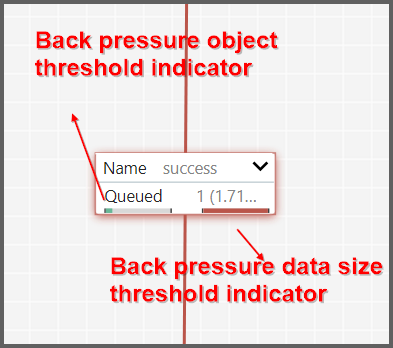

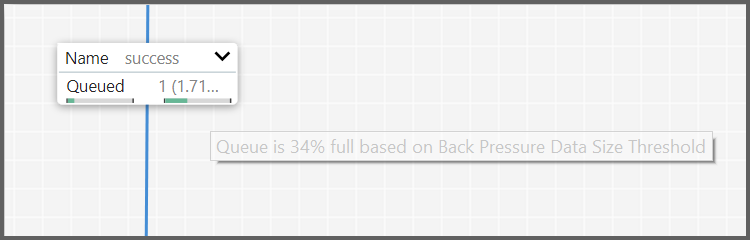

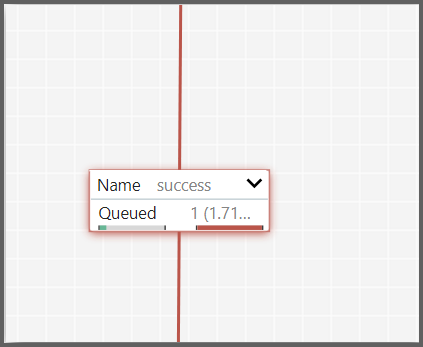

NiFi provides two configuration elements for Back Pressure. These thresholds indicate how much data should be allowed to exist in the queue before the component that is the source of the Connection is no longer scheduled to run. This allows the system to avoid being overrun with data. The first option provided is the “Back pressure object threshold.” This is the number of FlowFiles that can be in the queue before back pressure is applied. The second configuration option is the “Back pressure data size threshold.” This specifies the maximum amount of data (in size) that should be queued up before applying back pressure. This value is configured by entering a number followed by a data size (B for bytes,KB for kilobytes,MB for megabytes,GB for gigabytes, or TB for terabytes). When back pressure is enabled, small progress bars appear on the connection label, so the DFM can see it at-a-glance when looking at a flow on the canvas. The progress bars change color based on the queue percentage: Green (0-60%), Yellow (61-85%) and Red (86-100%).

Hovering your mouse over a bar displays the exact percentage.

When the queue is completely full, the Connection is highlighted in red.

The right-hand side of the tab provides the ability to prioritize the data in the queue so that higher priority data is processed first. Prioritizers can be dragged from the top (‘Available prioritizers’) to the bottom (‘Selected prioritizers’). Multiple prioritizers can be selected. The prioritizer that is at the top of the ‘Selected prioritizers’ list is the highest priority. If two FlowFiles have the same value according to this prioritizer, the second prioritizer will determine which FlowFile to process first, and so on. If a prioritizer is no longer desired, it can then be dragged from the ‘Selected prioritizers’ list to the ‘Available prioritizers’ list.

The following prioritizers are available:

-

FirstInFirstOutPrioritizer: Given two FlowFiles, the on that reached the connection first will be processed first.

-

NewestFlowFileFirstPrioritizer: Given two FlowFiles, the one that is newest in the dataflow will be processed first.

-

OldestFlowFileFirstPrioritizer: Given two FlowFiles, the on that is oldest in the dataflow will be processed first. This is the default scheme that is used if no prioritizers are selected.

-

PriorityAttributePrioritizer: Given two FlowFiles that both have a “priority” attribute, the one that has the highest priority value will be processed first. Note that an UpdateAttribute processor should be used to add the “priority” attribute to the FlowFiles before they reach a connection that has this prioritizer set. Values for the “priority” attribute may be alphanumeric, where “a” is a higher priority than “z”, and “1” is a higher priority than “9”, for example.

After a connection has been drawn between two components, the connection’s configuration may be changed, and the connection may be moved to a new destination; however, the processors on either side of the connection must be stopped before a configuration or destination change may be made.

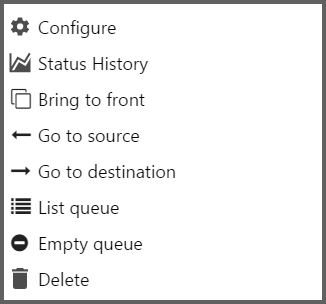

To change a connection’s configuration or interact with the connection in other ways, right-click on the connection to open the connection context menu.

The following options are available:

-

Configure: This option allows the user to change the configuration of the connection.

-

Status History: This option opens a graphical representation of the connection’s statistical information over time.

-

Bring to front: This option brings the connection to the front of the canvas if something else (such as another connection) is overlapping it.

-

Go to source: This option can be useful if there is a long distance between the connection’s source and destination components on the canvas. By clicking this option, the view of the canvas will jump to the source of the connection.

-

Go to destination: Similar to the “Go to source” option, this option changes the view to the destination component on the canvas and can be useful if there is a long distance between two connected components.

-

List queue: This option lists the queue of FlowFiles that may be waiting to be processed.

-

Empty queue: This option allows the DFM to clear the queue of FlowFiles that may be waiting to be processed. This option can be especially useful during testing, when the DFM is not concerned about deleting data from the queue. When this option is selected, users must confirm that they want to delete the data in the queue.

-

Delete: This option allows the DFM to delete a connection between two components. Note that the components on both sides of the connection must be stopped and the connection must be empty before it can be deleted.

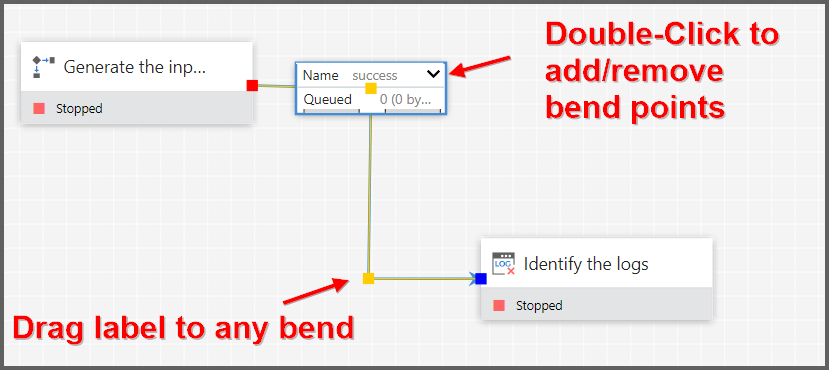

Bending Connections

To add a bend point (or elbow) to an existing connection, simply double-click on the connection in the spot where you want the bend point to be. Then, you can use the mouse to grab the bend point and drag it so that the connection is bent in the desired way. You can add as many bend points as you want. You can also use the mouse to drag and move the label on the connection to any existing bend point. To remove a bend point, simply double-click it again.

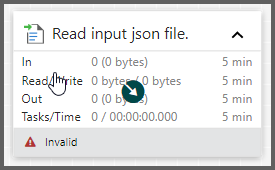

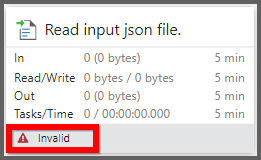

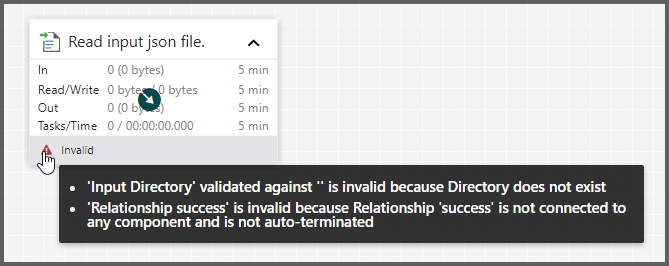

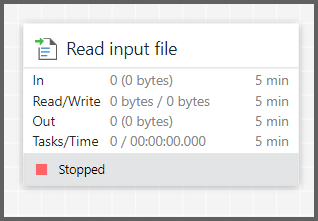

Processor Validation

Before trying to start a Processor, it’s important to make sure that the Processor’s configuration is valid. A status indicator is shown in the bottom-left of the Processor. If the Processor is invalid, the indicator will show a yellow Warning indicator with an exclamation mark indicating that there is a problem:

In this case, hovering over the indicator icon with the mouse will provide a tooltip showing all of the validation errors for the Processor.

Once all of the validation errors have been addressed, the status indicator will change to a Stop icon, indicating that the Processor is valid and ready to be started but currently is not running:

Site-to-Site

When sending data from one instance of NiFi to another, there are many different protocols that can be used. The preferred protocol, though, is the NiFi Site-to-Site Protocol. Site-to-Site makes it easy to securely and efficiently transfer data to/from nodes in one NiFi instance or data producing application to nodes in another NiFi instance or other consuming application.

Using Site-to-Site provides the following benefits:

-

Easy to configure

- After entering the URL of the remote NiFi instance, the available ports (endpoints) are automatically discovered and provided in a drop-down list

-

Secure

- Site-to-Site optionally makes use of Certificates in order to encrypt data and provide authentication and authorization. Each port can be configured to allow only specific users, and only those users will be able to see that the port even exists. For information on configuring the Certificates, see the [Security Configuration] section of the [Admin Guide].

-

Scalable

- As nodes in the remote cluster change, those changes are automatically detected and data is scaled out across all nodes in the cluster.

-

Efficient

- Site-to-Site allows batches of FlowFiles to be sent at once in order to avoid the overhead of establishing connections and making multiple round-trip requests between peers.

-

Reliable

- Checksums are automatically produced by both the sender and receiver and compared after the data has been transmitted, in order to ensure that no corruption has occurred. If the checksums don’t match, the transaction will simply be canceled and tried again.

-

Automatically load balanced

- As nodes come online or drop out of the remote cluster, or a node’s load becomes heavier or lighter, the amount of data that is directed to that node will automatically be adjusted.

-

FlowFiles maintain attributes

- When a FlowFile is transferred over this protocol, all of the FlowFile’s attributes are automatically transferred with it. This can be very advantageous in many situations, as all of the context and enrichment that has been determined by one instance of NiFi travels with the data, making for easy routing of the data and allowing users to easily inspect the data.

-

Adaptable

- As new technologies and ideas emerge, the protocol for handling Site-to-Site communications are able to change with them. When a connection is made to a remote NiFi instance, a handshake is performed in order to negotiate which protocol and which version of the protocol will be used. This allows new capabilities to be added while still maintaining backward compatibility with all older instances. Additionally, if a vulnerability or deficiency is ever discovered in a protocol, it allows a newer version of NiFi to forbid communication over the compromised versions of the protocol.

Site-to-Site is a protocol transferring data between two NiFi instances. Both end can be a standalone NiFi or a NiFi cluster. In this section, the NiFi instance initiates the communications is called Site-to-Site client NiFi instance and the other end as Site-to-Site server NiFi instance to clarify what configuration needed on each NiFi instances.

A NiFi instance can be both client and server for Site-to-Site protocol, however, it can only be a client or server within a specific Site-to-Site communication. For example, if there are three NiFi instances A, B and C. A pushes data to B, and B pulls data from C. A — push → B ← pull — C. Then B is not only a server in the communication between A and B, but also a client in B and C.

It is important to understand which NiFi instance will be the client or server in order to design your data flow, and configure each instance accordingly. Here is a summary of what components run on which side based on data flow direction:

-

Push: a client sends data to a Remote Process Group, the server receives it with an Input Port

-

Pull: a client receives data from a Remote Process Group, the server sends data through an Output Port

Configure Site-to-Site client NiFi instance

Remote Process Group: In order to communicate with a remote NiFi instance via Site-to-Site, simply drag a Remote Process Group onto the canvas and enter the URL of the remote NiFi instance (for more information on the components of a Remote Process Group, see Remote Process Group Transmission section of this guide.) The URL is the same URL you would use to go to that instance’s User Interface. At that point, you can drag a connection to or from the Remote Process Group in the same way you would drag a connection to or from a Processor or a local Process Group. When you drag the connection, you will have a chance to choose which Port to connect to. Note that it may take up to one minute for the Remote Process Group to determine which ports are available.

If the connection is dragged starting from the Remote Process Group, the ports shown will be the Output Ports of the remote group, as this indicates that you will be pulling data from the remote instance. If the connection instead ends on the Remote Process Group, the ports shown will be the Input Ports of the remote group, as this implies that you will be pushing data to the remote instance.

IMPORTANT

If the remote instance is configured to use secure data transmission, you will see only ports that you are authorized to communicate with. For information on configuring NiFi to run securely, see the System Administrator’s Guide.

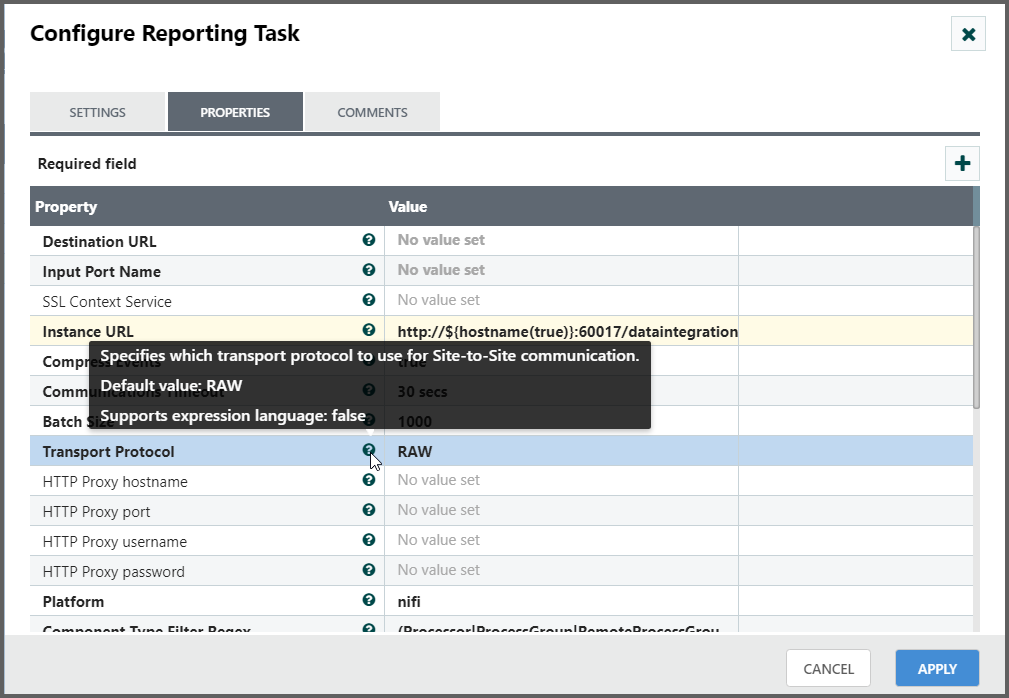

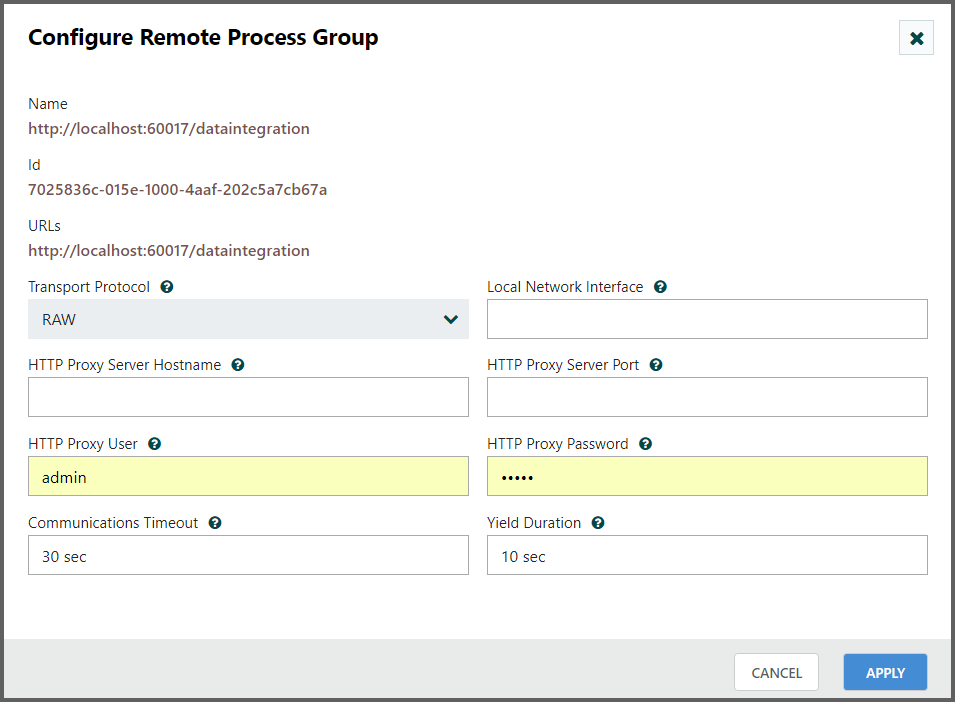

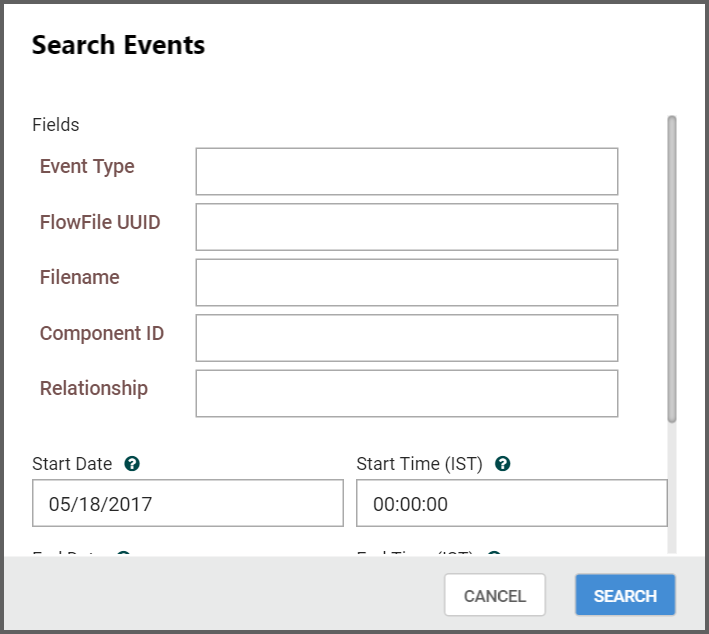

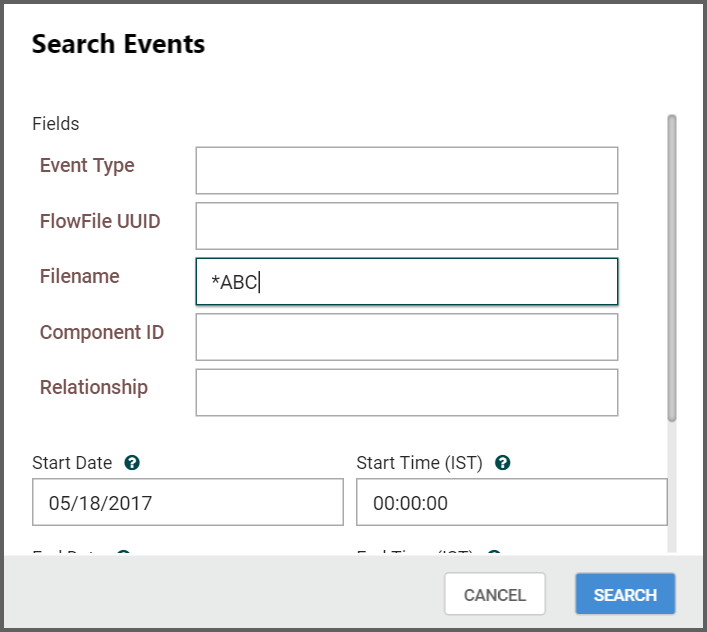

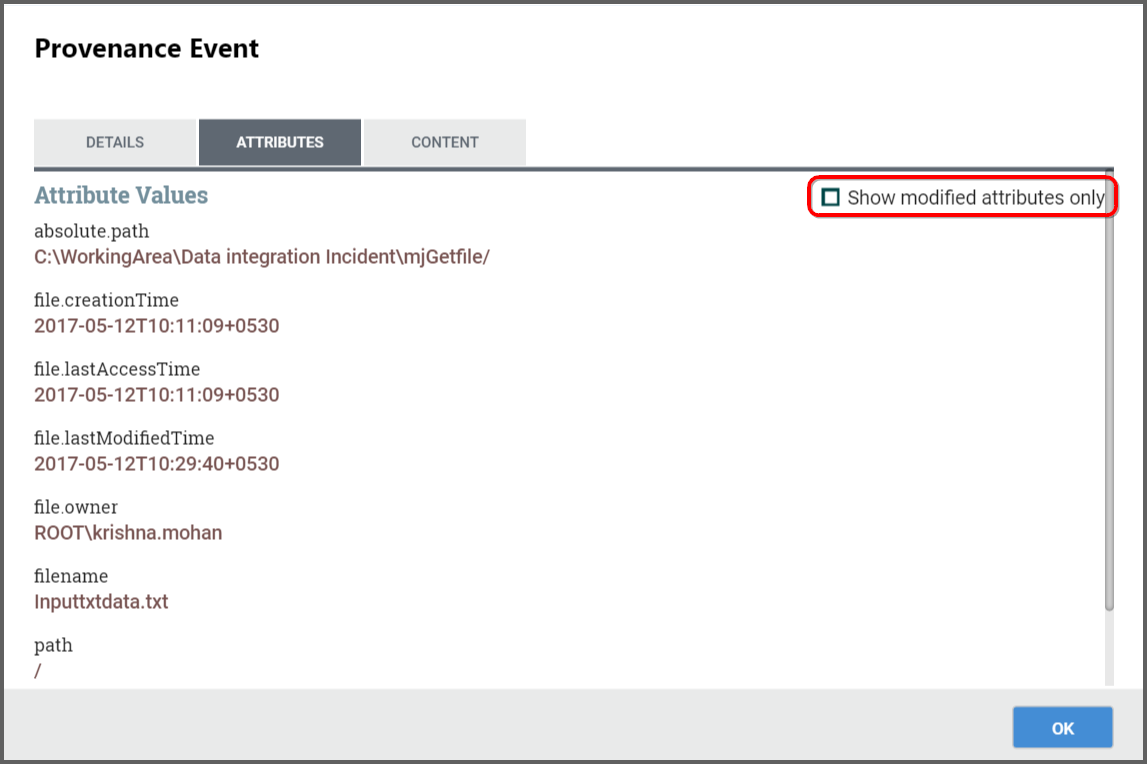

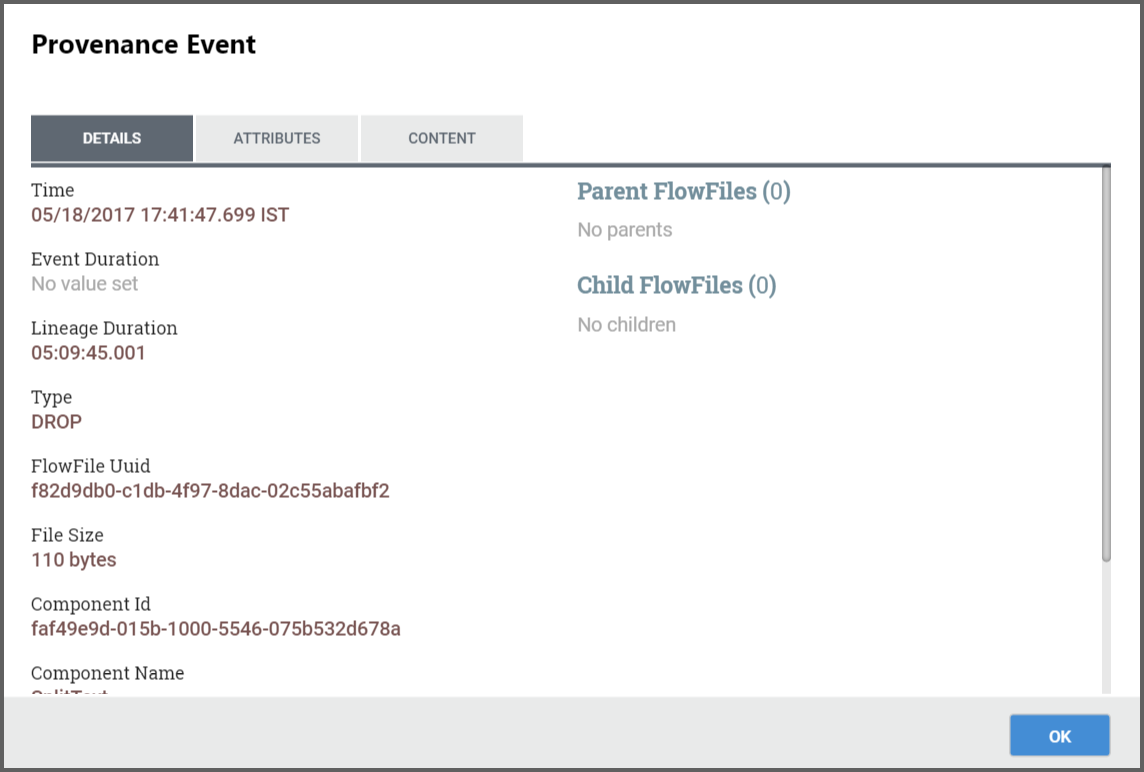

Transport Protocol: On a Remote Process Group creation or configuration dialog, you can choose Transport Protocol to use for Site-to-Site communication as shown in the following image: