Description and usage of ListS3:

Retrieves a listing of objects from an S3 bucket. For each object that is listed, creates a FlowFile that represents the object so that it can be fetched in conjunction with FetchS3Object. This Processor is designed to run on Primary Node only in a cluster. If the primary node changes, the new Primary Node will pick up where the previous node left off without duplicating all of the data.

Tags:

Amazon, S3, AWS, list

Properties:

In the list below, the names of required properties appear in bold. Any other properties (not in bold) are considered optional. The table also indicates any default values, whether a property supports the Expression Language Guide, and whether a property is considered “sensitive”, meaning that its value will be encrypted. Before entering a value in a sensitive property, ensure that the nifi.properties file has an entry for the property nifi.sensitive.props.key.

| Name | Default Value | Allowable Values | Description |

| Bucket |

The name of the S3 bucket. Supports Expression Language: true |

||

| Region | us-west-2 |

|

No Description Provided. |

| Access Key |

No Description Provided. Sensitive Property: true</br> Supports Expression Language: true |

||

| Secret Key |

No Description Provided. Sensitive Property: true</br> Supports Expression Language: true |

||

| Credentials File | Path to a file containing AWS access key and secret key in properties file format. | ||

| AWS Credentials Provider service |

Controller Service API: AWSCredentialsProviderService Implementation: AWSCredentialsProviderControllerService |

The Controller Service that is used to obtain aws credentials provider | |

| Communications Timeout | 30 secs | No Description Provided. | |

| SSL Context Service |

Controller Service API: SSLContextService Implementation: StandardSSLContextService |

Specifies an optional SSL Context Service that, if provided, will be used to create connections. | |

| Endpoint Override URL | Endpoint URL to use instead of the AWS default including scheme, host, port, and path. The AWS libraries select an endpoint URL based on the AWS region, but this property overrides the selected endpoint URL, allowing use with other S3-compatible endpoints. | ||

| Signer Override | Default Signature |

|

The AWS libraries use the default signer but this property allows you to specify a custom signer to support older S3-compatible services. |

| Proxy Host |

Proxy host name or IP Supports Expression Language: true |

||

| Proxy Host Port |

Proxy host port. Supports Expression Language: true |

||

| Delimiter |

The string used to delimit directories within the bucket. Please consult the AWS documentation for the correct use of this field.

The prefix used to filter the object list. In most cases, it should end with a forward slash ('/'). Supports Expression Language: true</br> |

||

| Prefix | The prefix used to filter the object list. In most cases, it should end with a forward slash ('/'). | ||

| Use Versions | false |

* true * false |

Specifies whether to use S3 versions, if applicable. If false, only the latest version of each object will be returned. |

Reads Attributes:

None specified.

Writes Attributes:

| Name | Description |

| s3.bucket | The name of the file. |

| s3.etag | The ETag that can be used to see if the file has changed |

| s3.isLatest | A boolean indicating if this is the latest version of the object |

| s3.lastModified | The last modified time in milliseconds since epoch in UTC time |

| s3.length | The size of the object in bytes |

| s3.storeClass | The storage class of the object |

| s3.version | The version of the object, if applicable. |

Relationships:

| Name | Description |

| success | FlowFiles are routed to success relationship |

State management:

| Scope | Description |

| CLUSTER | After performing a listing of keys, the timestamp of the newest key is stored, along with the keys that share that same timestamp. This allows the Processor to list only keys that have been added or modified after this date the next time that the Processor is run. State is stored across the cluster so that this Processor can be run on Primary Node only and if a new Primary Node is selected, the new node can pick up where the previous node left off, without duplicating the data. |

See Also:

FetchS3Object

How to configure?

Step 1: Drag and drop the ListS3 processor to canvas.

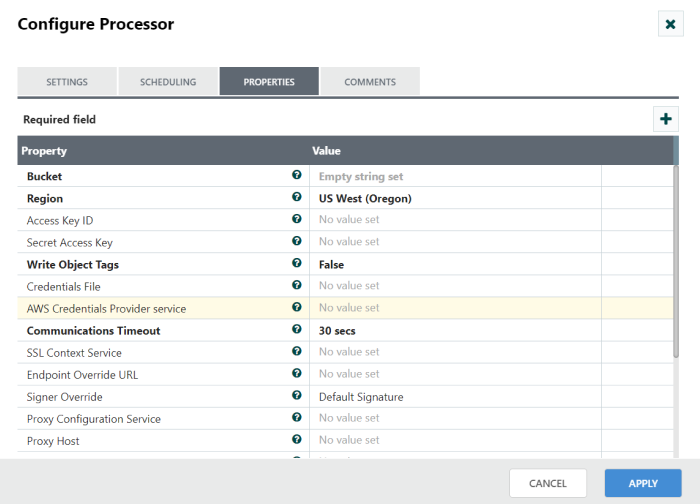

Step 2: Double click the processor to configure, the configuration dialog will be opened as follows,

Step 3: Check the usage of each property and update those values.

Properties and usage:

Bucket: part of the domain name in the URL.

Region: A physical location around the world where we cluster data centers.

Access Key: The Access key which used to sign programmatic requests that you make to AWS.

Secret Key: The Secret key which used to sign programmatic requests that you make to AWS.

Credentials File: Path to a file containing AWS access key and secret key in properties file format.

AWS Credentials Provider service: The controller service that is used to obtain AWS credentials provider.

Configure without AWS credentials provider controller service:

To create access keys for an IAM user

- Sign into the AWS Management Console and open the IAM console.

- In the navigation pane, choose Users option.

- Choose the name of the user whose access keys you want to create and choose the Security credentials tab.

- In the Access keys section, choose Create access key.

- To view the new access key pair, choose Show. You will not have access to the secret access key again after this dialog box closes. Your credentials will look something like this:

- Access key ID: BKKAIGSFODNM7EXAMPLE

- Secret access key: mmkkrXUtnFEMI/K7MDENG/bPxRfiCYEXAMPLEKEY

- To download the key pair, choose Download .csv file. Store the keys in a secure location. You will not have access to the secret access key again after this dialog box closes.

- Keep the keys confidential to protect your AWS account and never email them. Do not share them outside your organization, even if an inquiry appears to come from AWS or Amazon.com. No one who legitimately represents Amazon will ever ask you for your secret key.

- After you download the .csv file, choose Close. When you create an access key, the key pair is active by default, and you can use the pair right away.

Step 1: Drag and drop the ListS3 processor to canvas.

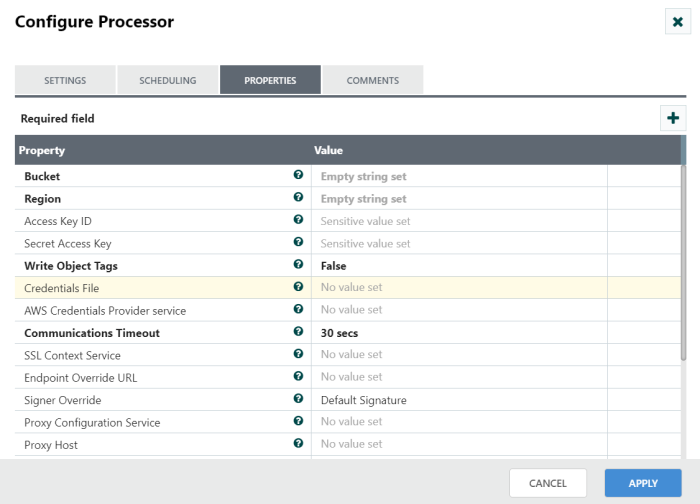

Step 2: Double click the processor to configure and Check usage of each property and update those values without AWS credentials provider controller service.

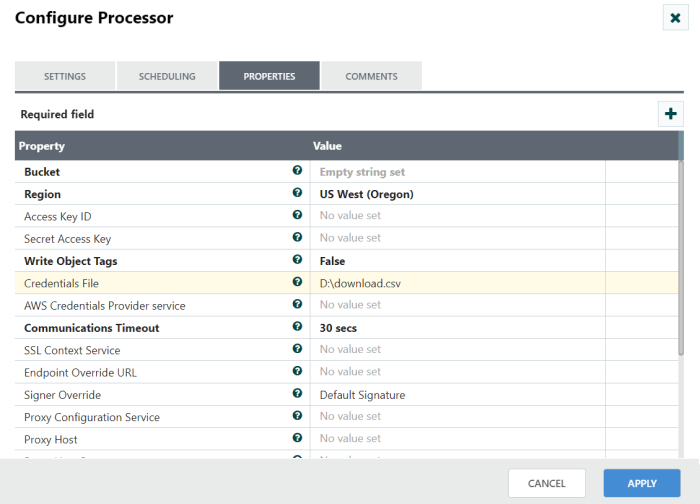

Step 3: We can also access AWS credentials by put the ‘download.csv’ file path location in credential file.

Configure with AWS credentials provider controller service:

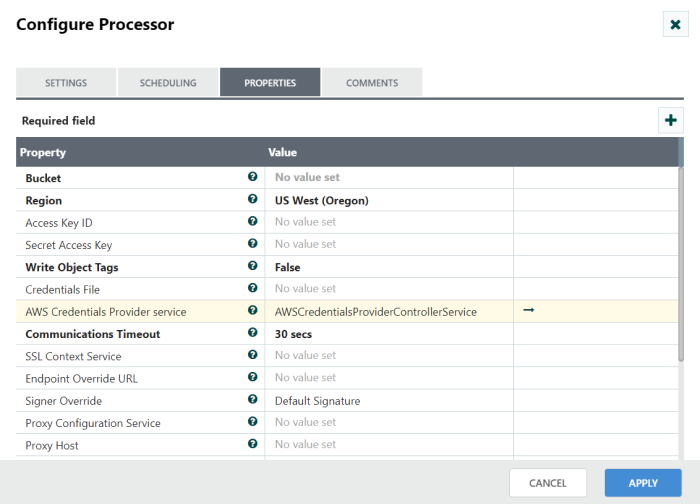

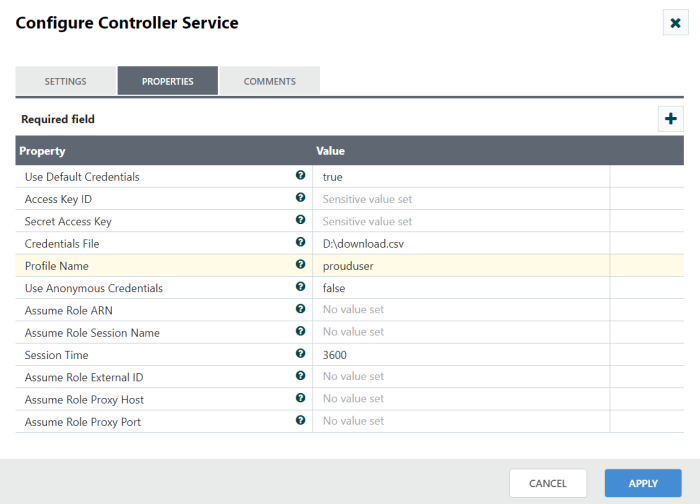

If you use AWS credentials with the AWSCredentialsProviderControllerService, you need to provide an Access Key and Secret Key, and then you can set the proper permissions to this key in the AWS Credential provider controller service.

Step 1: Click the AWS credentials provider configuration tab, Choose AWS credentials provider controller service and open the controller service configuration page.

Step 2: Check usage of each property and update those values without AWS credentials provider controller service.

We can also access AWS credentials by put the ‘download.csv’ file path location in credential file.

Profilename: Specify a profile profilename in AWS and use the credentials and settings stored under that name.

Example: $ aws s3 ls profile prouduser

Configure the controller service as below in the screenshot.

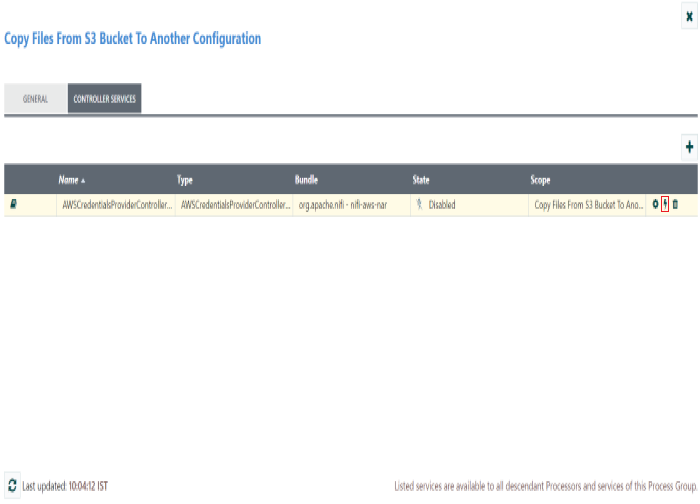

Step 3: After configured the controller service enable the controller service and start the processor.

Using Prefix and Delimiter in Apache NiFi’s ListS3 Processor

To filter and organize the objects in the S3 bucket, you can use the prefix and delimiter properties.

Prefix:

The prefix is an optional property that allows you to specify a common prefix for the objects you want to list in the S3 bucket. By providing a prefix, you can filter the objects based on a specific key prefix. Only the objects with keys that start with the specified prefix will be included in the listing result.

Example:

Consider a bucket with objects having keys like “documents/doc1.txt”, “documents/doc2.txt”, “images/image1.jpg”, “images/image2.jpg”. If you set the prefix to “documents/”, the ListS3 processor will only return the objects “documents/doc1.txt” and “documents/doc2.txt”.

Delimiter:

The delimiter is another optional property that helps organize the objects in the S3 bucket. It allows you to specify a character or string that acts as a logical hierarchy separator in the object keys. When the ListS3 processor retrieves the object listing, it groups the objects based on the delimiter and provides a hierarchical structure to the listing result.

Example:

Consider objects with keys like “documents/doc1.txt”, “documents/doc2.txt”, “documents/folder1/doc3.txt”, “documents/folder1/doc4.txt”, “documents/folder2/doc5.txt”. If you set the delimiter to “/”, the ListS3 processor will return a hierarchical listing with two levels: “documents/” and “documents/folder1/”.

By combining the prefix and delimiter properties, you can effectively filter and organize the objects in the S3 bucket as per your requirements. These features provide flexibility and control over the object listing process in the ListS3 processor, enabling you to process specific subsets of objects or work with hierarchical structures within your S3 bucket.