NiFi System Administrator’s Guide

How to install and start Data Integration

-

Windows

-

Double click on “Syncfusion Data Integration Platform.exe” executable.

-

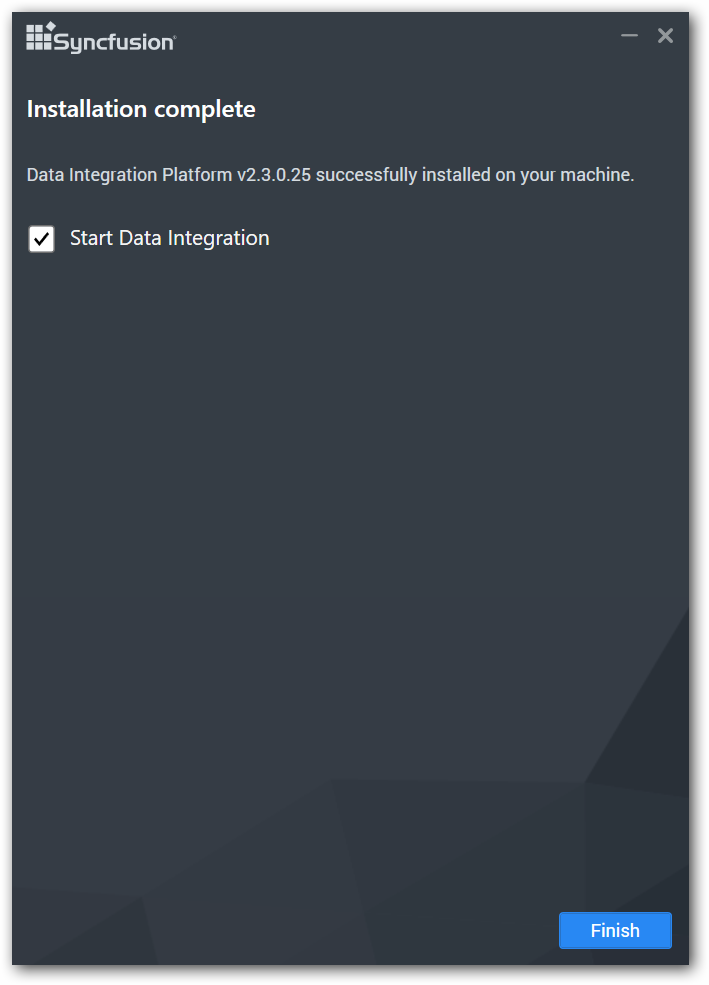

After installation complete, click “Start Data Integration” check box to start service and launch URL in default browser. For only starting service, uncheck the check box and click “Finish” button.

-

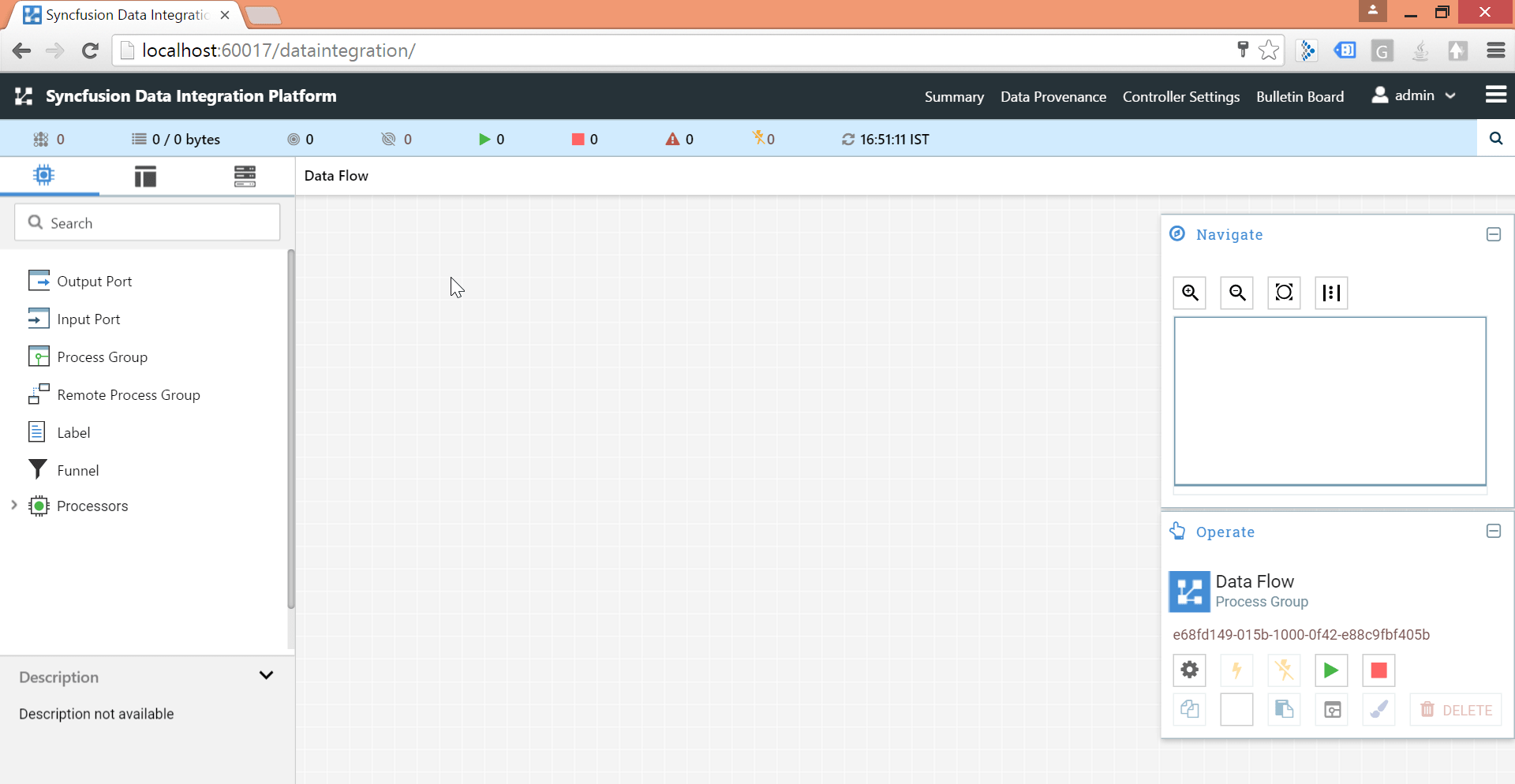

- If “Start Data Integration” check box is checked(refer above image), Syncfusion Data Integration Platform will be launched as below:

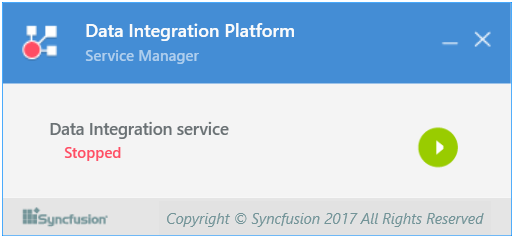

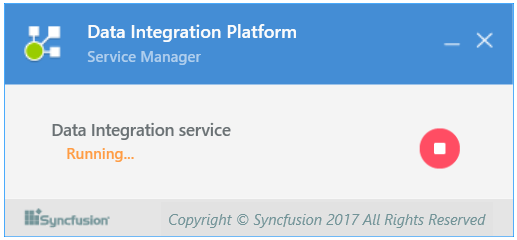

- Alternatively, to start or stop Data Integration in the background, click “Syncfusion Data Integration Manager” tray icon.

- To start Data Integration service, click stop option in “Syncfusion Data Integration Manager” window.

- To stop Data Integration service, click stop option in “Syncfusion Data Integration Manager” window.

When NiFi starts up, the following files and directories are created:

- content_repository

- database_repository

- flowfile_repository

- provenance_repository

- work directory

- logs directory

- Within the conf directory, the flow.xml.gz file and the templates directory are created

See the System Properties section of this guide for more information about configuring NiFi repositories and configuration files.

Security Configuration

NiFi provides several different configuration options for security purposes. The most important properties are those under the “security properties” heading in the nifi.properties file. In order to run securely, the following properties must be set:

| Property Name | Description |

|---|---|

| nifi.security.keystore | Filename of the Keystore that contains the server's private key. |

| nifi.security.keystoreType | The type of Keystore. Must be either PKCS12 or JKS. |

| nifi.security.keystorePasswd | The password for the Keystore. |

| nifi.security.keyPasswd | The password for the certificate in the Keystore. If not set, the value of nifi.security.keystorePasswd will be used. |

| nifi.security.truststore | Filename of the Truststore that will be used to authorize those connecting to NiFi. If not set, all who attempt to connect will be provided access as the *Anonymous* user. |

| nifi.security.truststoreType | The type of the Truststore. Must be either PKCS12 or JKS. |

| nifi.security.truststorePasswd | The password for the Truststore. |

| nifi.security.needClientAuth | Specifies whether or not connecting clients must authenticate themselves. Specifically this property is used by the NiFi cluster protocol. If the Truststore properties are not set, this must be false. Otherwise, a value of true indicates that nodes in the cluster will be authenticated and must have certificates that are trusted by the Truststores. |

Once the above properties have been configured, we can enable the User Interface to be accessed over HTTPS instead of HTTP. This is accomplished by setting the nifi.web.https.host and nifi.web.https.port properties. The nifi.web.https.host property indicates which hostname the server should run on. This allows admins to configure the application to run only on specific network interfaces. If it is desired that the HTTPS interface be accessible from all network interfaces, a value of 0.0.0.0 should be used.

IMPORTANT

It is important when enabling HTTPS that the

nifi.web.http.portproperty be unset.

Similar to nifi.security.needClientAuth, the web server can be configured to require certificate based client authentication for users accessing the User Interface. In order to do this it must be configured to not support username/password authentication (see below) and not grant access to anonymous users (see nifi.security.anonymous.authorities above). Either of these options will configure the web server to WANT certificate based client authentication. This will allow it to support users with certificates and those without that may be logging in with their credentials or those accessing anonymously. If username/password authentication and anonymous access are not configured, the web server will REQUIRE certificate based client authentication.

Now that the User Interface has been secured, we can easily secure Site-to-Site connections and inner-cluster communications, as well. This is accomplished by setting the nifi.remote.input.secure and nifi.cluster.protocol.is.secure properties, respectively, to true.

TLS Generation Toolkit

In order to facilitate the secure setup of NiFi, you can use the tls-toolkit command line utility to automatically generate the required keystores, truststore, and relevant configuration files. This is especially useful for securing multiple NiFi nodes, which can be a tedious and error-prone process.

NOTE

JKS keystores and truststores are recommended for NiFi. This tool allows the specification of other keystore types on the command line but will ignore a type of PKCS12 for use as the truststore because that format has some compatibility issues between BouncyCastle and Oracle implementations.

The tls-toolkit command line tool has two primary modes of operation:

-

Standalone — generates the certificate authority, keystores, truststores, and nifi.properties files in one command.

-

Client/Server mode — uses a Certificate Authority Server that accepts Certificate Signing Requests from clients, signs them, and sends the resulting certificates back. Both client and server validate the other’s identity through a shared secret.

Standalone

Standalone mode is invoked by running ./bin/tls-toolkit.sh standalone -h which prints the usage information along with descriptions of options that can be specified.

The most common options to specify are:

-

-n,--hostnamesThe comma-separated list of hostnames that you’d like to generate certificates for. It can be specified multiple times. Range and instance patterns are supported. See below for details. -

-C,--clientCertDnThe DN that you’d like to generate a client certificate for. It can be specified multiple times. -

-f,--nifiPropertiesFileThe base nifi.properties file that the tool will update for each host. -

-o,--outputDirectoryThe directory to use for the resulting Certificate Authority files and NiFi configurations. A subdirectory will be made for each host.

Hostname Patterns:

-

Square brackets can be used in order to easily specify a range of hostnames. Example: [01-20]

-

Parentheses can be used in order to specify that more than one NiFi instance will run on the given host(s). Example: (5)

Examples:

Create 4 sets of keystore, truststore, nifi.properties for localhost along with a client certificate with the given DN:

bin/tls-toolkit.sh standalone -n 'localhost(4)' -C 'CN=username,OU=NIFI'

Create keystore, truststore, nifi.properties for 10 NiFi hostnames in each of 4 subdomains:

bin/tls-toolkit.sh standalone -n 'nifi[01-10].subdomain[1-4].domain'

Create 2 sets of keystore, truststore, nifi.properties for 10 NiFi hostnames in each of 4 subdomains along with a client certificate with the given DN:

bin/tls-toolkit.sh standalone -n 'nifi[01-10].subdomain[1-4].domain(2)' -C 'CN=username,OU=NIFI'

Client/Server

Client/Server mode relies on a long-running Certificate Authority (CA) to issue certificates. The CA can be stopped when you’re not bringing nodes online.

Server

The CA server is invoked by running ./bin/tls-toolkit server -h prints the usage information along with descriptions of options that can be specified.

The most common options to specify are:

-

-f,--configJsonThe location of the JSON config (written after first run) -

-F,--useConfigJsonLoads all relevant configuration from the config JSON (configJson is the only other argument necessary) -

-t,--tokenThe token used to prevent man in the middle attacks (this should be a long, random value and needs to be known when invoking the client) -

-D,--dnThe DN for the CA

Client

The client can be used to request new Certificates from the CA. The client utility generates a key pair and Certificate Signing Request (CSR) and sends the CSR to the Certificate Authority. The client is invoked by running ./bin/tls-toolkit.sh client -h which prints the usage information along with descriptions of options that can be specified.

The most common options to specify are:

-

-f,--configJsonThe JSON config file -

-c,--certificateAuthorityHostnameThe hostname of the CA -

-D,--DNThe DN for the CSR (and Certificate) -

-t,--tokenThe token used to prevent man in the middle attacks (this should be a long, random value and needs to be the same one used to start the CA server) -

-T,--keyStoreTypeThe type of keystore to create (leave default for NiFi nodes, specify PKCS12 to create client cert)

After running the client you will have the CA’s certificate, a keystore, a truststore, and a config.json with information about them as well as their passwords.

For a client certificate that can be easily imported into the browser, specify: -T PKCS12

User Authentication

NiFi supports user authentication via client certificates or via username/password. Username/password authentication is performed by a Login Identity Provider. The Login Identity Provider is a pluggable mechanism for authenticating users via their username/password. Which Login Identity Provider to use is configured in two properties in the nifi.properties file.

The nifi.login.identity.provider.configuration.file property specifies the configuration file for Login Identity Providers. The nifi.security.user.login.identity.provider property indicates which of the configured Login Identity Provider should be used. If this property is not configured, NiFi will not support username/password authentication and will require client certificates for authenticating users over HTTPS. By default, this property is not configured meaning that username/password must be explicity enabled.

NiFi does not perform user authentication over HTTP. Using HTTP all users will be granted all roles.

Lightweight Directory Access Protocol (LDAP)

Below is an example and description of configuring a Login Identity Provider that integrates with a Directory Server to authenticate users.

<provider>

<identifier>ldap-provider</identifier>

<class>org.apache.nifi.ldap.LdapProvider</class>

<property name="Authentication Strategy">START_TLS</property>

<property name="Manager DN"></property>

<property name="Manager Password"></property>

<property name="TLS - Keystore"></property>

<property name="TLS - Keystore Password"></property>

<property name="TLS - Keystore Type"></property>

<property name="TLS - Truststore"></property>

<property name="TLS - Truststore Password"></property>

<property name="TLS - Truststore Type"></property>

<property name="TLS - Client Auth"></property>

<property name="TLS - Protocol"></property>

<property name="TLS - Shutdown Gracefully"></property>

<property name="Referral Strategy">FOLLOW</property>

<property name="Connect Timeout">10 secs</property>

<property name="Read Timeout">10 secs</property>

<property name="Url"></property>

<property name="User Search Base"></property>

<property name="User Search Filter"></property>

<property name="Authentication Expiration">12 hours</property>

</provider>

With this configuration, username/password authentication can be enabled by referencing this provider in nifi.properties.

nifi.security.user.login.identity.provider=ldap-provider

| Property Name | Description |

|---|---|

| Authentication Strategy | How the connection to the LDAP server is authenticated. Possible values are ANONYMOUS, SIMPLE, or START_TLS. |

| Manager DN | The DN of the manager that is used to bind to the LDAP server to search for users. |

| Manager Password | The password of the manager that is used to bind to the LDAP server to search for users. |

| TLS - Keystore | Path to the Keystore that is used when connecting to LDAP using START_TLS. |

| TLS - Keystore Password | Password for the Keystore that is used when connecting to LDAP using START_TLS. |

| TLS - Keystore Type | Type of the Keystore that is used when connecting to LDAP using START_TLS (i.e. JKS or PKCS12). |

| TLS - Truststore | Path to the Truststore that is used when connecting to LDAP using START_TLS. |

| TLS - Truststore Password | Password for the Truststore that is used when connecting to LDAP using START_TLS. |

| TLS - Truststore Type | Type of the Truststore that is used when connecting to LDAP using START_TLS (i.e. JKS or PKCS12). |

| TLS - Client Auth | Client authentication policy when connecting to LDAP using START_TLS. Possible values are REQUIRED, WANT, NONE. |

| TLS - Protocol | Protocol to use when connecting to LDAP using START_TLS. (i.e. TLS, TLSv1.1, TLSv1.2, etc). |

| TLS - Shutdown Gracefully | Specifies whether the TLS should be shut down gracefully before the target context is closed. Defaults to false. |

| Referral Strategy | Strategy for handling referrals. Possible values are FOLLOW, IGNORE, THROW. |

| Connect Timeout | Duration of connect timeout. (i.e. 10 secs). |

| Read Timeout | Duration of read timeout. (i.e. 10 secs). |

| Url | Url of the LDAP servier (i.e. ldap://<hostname>:<port>). |

| User Search Base | Base DN for searching for users (i.e. CN=Users,DC=example,DC=com). |

| User Search Filter | Filter for searching for users against the User Search Base. (i.e. sAMAccountName={0}). The user specified name is inserted into {0}. |

| Authentication Expiration | The duration of how long the user authentication is valid for. If the user never logs out, they will be required to log back in following this duration. |

Kerberos

Below is an example and description of configuring a Login Identity Provider that integrates with a Kerberos Key Distribution Center (KDC) to authenticate users.

<provider>

<identifier>kerberos-provider</identifier>

<class>org.apache.nifi.kerberos.KerberosProvider</class>

<property name="Default Realm">NIFI.APACHE.ORG</property>

<property name="Kerberos Config File">/etc/krb5.conf</property>

<property name="Authentication Expiration">12 hours</property>

</provider>

With this configuration, username/password authentication can be enabled by referencing this provider in nifi.properties.

nifi.security.user.login.identity.provider=kerberos-provider

| Property Name | Description |

|---|---|

| Authentication Expiration | The duration of how long the user authentication is valid for. If the user never logs out, they will be required to log back in following this duration. |

| Default Realm | Default realm to provide when user enters incomplete user principal (i.e. NIFI.APACHE.ORG). |

| Kerberos Config File | Absolute path to Kerberos client configuration file. |

See also Kerberos Service to allow Single Sign-On access via client Kerberos tickets.

OpenId Connect

To enable authentication via OpenId Connect the following properties must be configured in nifi.properties.

| Property Name | Description |

|---|---|

| nifi.security.user.oidc.preferred.jwsalgorithm | The preferred algorithm for for validating identity tokens. If this value is blank, it will default to RS256 which is required to be supported by the OpenId Connect Provider according to the specification. If this value is HS256, HS384, or HS512, NiFi will attempt to validate HMAC protected tokens using the specified client secret. If this value is none, NiFi will attempt to validate unsecured/plain tokens. Other values for this algorithm will attempt to parse as an RSA or EC algorithm to be used in conjunction with the JSON Web Key (JWK) provided through the jwks_uri in the metadata found at the discovery URL. |

| nifi.security.user.oidc.discovery.url | The discovery URL for the desired OpenId Connect Provider (http://openid.net/specs/openid-connect-discovery-1_0.html). |

| nifi.security.user.oidc.connect.timeout | Connect timeout when communicating with the OpenId Connect Provider. |

| nifi.security.user.oidc.read.timeout | Read timeout when communicating with the OpenId Connect Provider. |

| nifi.security.user.oidc.client.id | The client id for NiFi after registration with the OpenId Connect Provider. |

| nifi.security.user.oidc.client.secret | The client secret for NiFi after registration with the OpenId Connect Provider. |

Apache Knox

To enable authentication via Apache Knox the following properties must be configured in nifi.properties.

| Property Name | Description |

|---|---|

| nifi.security.user.knox.audiences | Optional. A comma separate listed of allowed audiences. If set, the audience in the token must be present in this listing. The audience that is populated in the token can be configured in Knox. |

Multi-Tenant Authorization

After you have configured NiFi to run securely and with an authentication mechanism, you must configure who has access to the system, and the level of their access. You can do this using multi-tenant authorization. Multi-tenant authorization enables multiple groups of users (tenants) to command, control, and observe different parts of the dataflow, with varying levels of authorization. When an authenticated user attempts to view or modify a NiFi resource, the system checks whether the user has privileges to perform that action. These privileges are defined by policies that you can apply system-wide or to individual components.

Authorizer Configuration

An authorizer grants users the privileges to manage users and policies by creating preliminary authorizations at startup.

Authorizers are configured using two properties in the nifi.properties file:

-

The

nifi.authorizer.configuration.fileproperty specifies the configuration file where authorizers are defined. By default, the authorizers.xml file located in the root installation conf directory is selected. -

The

nifi.security.user.authorizerproperty indicates which of the configured authorizers in the authorizers.xml file to use.

Authorizers.xml Setup

The authorizers.xml file is used to define and configure available authorizers. The default authorizer is the StandardManagedAuthorizer. The managed authorizer is comprised of a UserGroupProvider and a AccessPolicyProvider. The users, group, and access policies will be loaded and optionally configured through these providers. The managed authorizer will make all access decisions based on these provided users, groups, and access policies.

During startup there is a check to ensure that there are no two users/groups with the same identity/name. This check is executed regardless of the configured implementation. This is necessary because this is how users/groups are identified and authorized during access decisions.

The default UserGroupProvider is the FileUserGroupProvider, however, you can develop additional UserGroupProviders as extensions. The FileUserGroupProvider has the following properties:

-

Users File - The file where the FileAuthorizer stores users and groups. By default, the users.xml in the conf directory is chosen.

-

Initial Admin Identity - The identity of an initial admin user that is granted access to the UI and given the ability to create additional users, groups, and policies. This property is only used when there are no other users, groups, and policies defined.

-

Legacy Authorized Users File - The full path to an existing authorized-users.xml that is automatically converted to the multi-tenant authorization model. This property is only used when there are no other users, groups, and policies defined.

-

Node Identity - The identity of a NiFi cluster node. When clustered, a property for each node should be defined, so that every node knows about every other node. If not clustered, these properties can be ignored.

-

Initial User Identity - The identity of a users and systems to seed the Users File. The name of each property must be unique, for example: “Initial User Identity A”, “Initial User Identity B”, “Initial User Identity C” or “Initial User Identity 1”, “Initial User Identity 2”, “Initial User Identity 3”

Another option for the UserGroupProvider is the LdapUserGroupProvider. By default, this option is commented out but can be configured in lieu of the FileUserGroupProvider. This will sync users and groups from a directory server and will present them in NiFi UI in read only form. The LdapUserGroupProvider has the following properties:

-

Authentication Strategy - How the connection to the LDAP server is authenticated. Possible values are ANONYMOUS, SIMPLE, LDAPS, or START_TLS

-

Manager DN - The DN of the manager that is used to bind to the LDAP server to search for users.

-

Manager Password - The password of the manager that is used to bind to the LDAP server to search for users.

-

TLS - Keystore - Path to the Keystore that is used when connecting to LDAP using LDAPS or START_TLS.

-

TLS - Keystore Password - Password for the Keystore that is used when connecting to LDAP using LDAPS or START_TLS.

-

TLS - Keystore Type - Type of the Keystore that is used when connecting to LDAP using LDAPS or START_TLS (i.e. JKS or PKCS12).

-

TLS - Truststore - Path to the Truststore that is used when connecting to LDAP using LDAPS or START_TLS.

-

TLS - Truststore Password - Password for the Truststore that is used when connecting to LDAP using LDAPS or START_TLS.

-

TLS - Truststore Type - Type of the Truststore that is used when connecting to LDAP using LDAPS or START_TLS (i.e. JKS or PKCS12).

-

TLS - Client Auth - Client authentication policy when connecting to LDAP using LDAPS or START_TLS. Possible values are REQUIRED, WANT, NONE.

-

TLS - Protocol - Protocol to use when connecting to LDAP using LDAPS or START_TLS. (i.e. TLS, TLSv1.1, TLSv1.2, etc).

-

TLS - Shutdown Gracefully - Specifies whether the TLS should be shut down gracefully before the target context is closed. Defaults to false.

-

Referral Strategy - Strategy for handling referrals. Possible values are FOLLOW, IGNORE, THROW.

-

Connect Timeout - Duration of connect timeout. (i.e. 10 secs).

-

Read Timeout - Duration of read timeout. (i.e. 10 secs).

-

Url - Space-separated list of URLs of the LDAP servers (i.e. ldap://

: ). -

Page Size - Sets the page size when retrieving users and groups. If not specified, no paging is performed.

-

Sync Interval - Duration of time between syncing users and groups. (i.e. 30 mins).

-

User Search Base - Base DN for searching for users (i.e. ou=users,o=nifi). Required to search users.

-

User Object Class - Object class for identifying users (i.e. person). Required if searching users.

-

User Search Scope - Search scope for searching users (ONE_LEVEL, OBJECT, or SUBTREE). Required if searching users.

-

User Search Filter - Filter for searching for users against the User Search Base (i.e. (member of=cn=team1,ou=groups,o=nifi) ). Optional.

-

User Identity Attribute - Attribute to use to extract user identity (i.e. cn). Optional. If not set, the entire DN is used.

-

User Group Name Attribute - Attribute to use to define group membership (i.e. member of). Optional. If not set group membership will not be calculated through the users. Will rely on group membership being defined through Group Member Attribute if set.

-

Group Search Base - Base DN for searching for groups (i.e. ou=groups,o=nifi). Required to search groups.

-

Group Object Class - Object class for identifying groups (i.e. groupOfNames). Required if searching groups.

-

Group Search Scope - Search scope for searching groups (ONE_LEVEL, OBJECT, or SUBTREE). Required if searching groups.

-

Group Search Filter - Filter for searching for groups against the Group Search Base. Optional.

-

Group Name Attribute - Attribute to use to extract group name (i.e. cn). Optional. If not set, the entire DN is used.

-

Group Member Attribute - Attribute to use to define group membership (i.e. member). Optional. If not set group membership will not be calculated through the groups. Will rely on group member being defined through User Group Name Attribute if set.

Another option for the UserGroupProvider are composite implementations. This means that multiple sources/implementations can be configured and composed. For instance, an admin can configure users/groups to be loaded from a file and a directory server. There are two composite implementations, one that supports multiple UserGroupProviders and one that supports multiple UserGroupProviders and a single configurable UserGroupProvider.

The CompositeUserGroupProvider will provide support for retrieving users and groups from multiple sources. The CompositeUserGroupProvider has the following properties:

- User Group Provider - The identifier of user group providers to load from. The name of each property must be unique, for example: “User Group Provider A”, “User Group Provider B”, “User Group Provider C” or “User Group Provider 1”, “User Group Provider 2”, “User Group Provider 3”

The CompositeConfigurableUserGroupProvider will provide support for retrieving users and groups from multiple sources. Additionally, a single configurable user group provider is required. Users from the configurable user group provider are configurable, however users loaded from one of the User Group Provider [unique key] will not be. The CompositeConfigurableUserGroupProvider has the following properties:

-

Configurable User Group Provider - A configurable user group provider.

-

User Group Provider - The identifier of user group providers to load from. The name of each property must be unique, for example: “User Group Provider A”, “User Group Provider B”, “User Group Provider C” or “User Group Provider 1”, “User Group Provider 2”, “User Group Provider 3”

The default AccessPolicyProvider is the FileAccessPolicyProvider, however, you can develop additional AccessPolicyProvider as extensions. The FileAccessPolicyProvider has the following properties:

-

User Group Provider - The identifier for an User Group Provider defined above that will be used to access users and groups for use in the managed access policies.

-

Authorizations File - The file where the FileAccessPolicyProvider will store policies.

-

Initial Admin Identity - The identity of an initial admin user that will be granted access to the UI and given the ability to create additional users, groups, and policies. The value of this property could be a DN when using certificates or LDAP, or a Kerberos principal. This property will only be used when there are no other policies defined. If this property is specified then a Legacy Authorized Users File can not be specified.

-

Legacy Authorized Users File - The full path to an existing authorized-users.xml that will be automatically converted to the new authorizations model. If this property is specified then an Initial Admin Identity can not be specified, and this property will only be used when there are no other users, groups, and policies defined.

-

Node Identity - The identity of a NiFi cluster node. When clustered, a property for each node should be defined, so that every node knows about every other node. If not clustered these properties can be ignored. The name of each property must be unique, for example for a three node cluster: “Node Identity A”, “Node Identity B”, “Node Identity C” or “Node Identity 1”, “Node Identity 2”, “Node Identity 3”

The identities configured in the Initial Admin Identity, the Node Identity properties, or discovered in a Legacy Authorized Users File must be available in the configured User Group Provider.

The default authorizer is the StandardManagedAuthorizer, however, you can develop additional authorizers as extensions. The StandardManagedAuthorizer has the following properties:

- Access Policy Provider - The identifier for an Access Policy Provider defined above.

The FileAuthorizer has been replaced with the more granular StandardManagedAuthorizer approach described above. However, it is still available for backwards compatibility reasons. The FileAuthorizer has the following properties.

-

Authorizations File - The file where the FileAuthorizer stores policies. By default, the authorizations.xml in the conf directory is chosen.

-

Users File - The file where the FileAuthorizer stores users and groups. By default, the users.xml in the conf directory is chosen.

-

Initial Admin Identity - The identity of an initial admin user that is granted access to the UI and given the ability to create additional users, groups, and policies. This property is only used when there are no other users, groups, and policies defined.

-

Legacy Authorized Users File - The full path to an existing authorized-users.xml that is automatically converted to the multi-tenant authorization model. This property is only used when there are no other users, groups, and policies defined.

-

Node Identity - The identity of a NiFi cluster node. When clustered, a property for each node should be defined, so that every node knows about every other node. If not clustered, these properties can be ignored.

Initial Admin Identity (New NiFi Instance)

If you are setting up a secured NiFi instance for the first time, you must manually designate an “Initial Admin Identity” in the authorizers.xml file. This initial admin user is granted access to the UI and given the ability to create additional users, groups, and policies. The value of this property could be a DN (when using certificates or LDAP) or a Kerberos principal. If you are the NiFi administrator, add yourself as the “Initial Admin Identity”.

Here is an example LDAP entry using the name John Smith:

<authorizer>

<identifier>file-provider</identifier>

<class>org.apache.nifi.authorization.FileAuthorizer</class>

<property name="Authorizations File">./conf/authorizations.xml</property>

<property name="Users File">./conf/users.xml</property>

<property name="Initial Admin Identity">cn=John Smith,ou=people,dc=example,dc=com</property>

<property name="Legacy Authorized Users File"></property>

<!--

<property name="Node Identity 1"></property>

<property name="Node Identity 2"></property>

-->

</authorizer>

</authorizers>

Here is an example Kerberos entry using the name John Smith and realm NIFI.APACHE.ORG:

<authorizer>

<identifier>file-provider</identifier>

<class>org.apache.nifi.authorization.FileAuthorizer</class>

<property name="Authorizations File">./conf/authorizations.xml</property>

<property name="Users File">./conf/users.xml</property>

<property name="Initial Admin Identity">example_user@NIFI.APACHE.ORG</property>

<property name="Legacy Authorized Users File"></property>

<!--

<property name="Node Identity 1"></property>

<property name="Node Identity 2"></property>

-->

</authorizer>

</authorizers>

After you have edited and saved the authorizers.xml file, restart NiFi. The “Initial Admin Identity” user and administrative policies are added to the users.xml and authorizations.xml files during restart. Once NiFi starts, the “Initial Admin Identity” user is able to access the UI and begin managing users, groups, and policies.

IMPORTANT

For a brand new secure flow, providing the “Initial Admin Identity” gives that user access to get into the UI and to manage users, groups and policies. But if that user wants to start modifying the flow, they need to grant themselves policies for the root process group. The system is unable to do this automatically because in a new flow the UUID of the root process group is not permanent until the flow.xml.gz is generated. If the NiFi instance is an upgrade from an existing flow.xml.gz or a 1.x instance going from unsecured to secure, then the “Initial Admin Identity” user is automatically given the privileges to modify the flow.

Legacy Authorized Users (NiFi Instance Upgrade)

If you are upgrading from a 0.x NiFi instance, you can convert your previously configured users and roles to the multi-tenant authorization model. In the authorizers.xml file, specify the location of your existing authorized-users.xml file in the “Legacy Authorized Users File” property.

Here is an example entry:

<authorizers>

<authorizer>

<identifier>file-provider</identifier>

<class>org.apache.nifi.authorization.FileAuthorizer</class>

<property name="Authorizations File">./conf/authorizations.xml</property>

<property name="Users File">./conf/users.xml</property>

<property name="Initial Admin Identity"></property>

<property name="Legacy Authorized Users File">/Users/example_user/config_files/authorized-users.xml</property>

</authorizer>

</authorizers>

After you have edited and saved the authorizers.xml file, restart NiFi. Users and roles from the authorized-users.xml file are converted and added as identities and policies in the users.xml and authorizations.xml files. Once the application starts, users who previously had a legacy Administrator role can access the UI and begin managing users, groups, and policies.

Here is a summary of policies assigned to each legacy role if the NiFi instance has an existing flow.xml.gz:

Global Access Policies

| Admin | DFM | Monitor | Provenance | NiFi | Proxy | |

|---|---|---|---|---|---|---|

| view the UI | * | * | * | |||

| access the controller - view | * | * | * | * | ||

| access the controller - modify | * | |||||

| query provenance | * | |||||

| access restricted components | * | |||||

| access all policies - view | * | |||||

| access all policies - modify | * | |||||

| access users/user groups - view | * | |||||

| access users/user groups - modify | * | |||||

| retrieve site-to-site details | * | |||||

| view system diagnostics | * | * | ||||

| proxy user requests | * | |||||

| access counters |

Component Access Policies on the Root Process Group

| Admin | DFM | Monitor | Provenance | NiFi | Proxy | |

|---|---|---|---|---|---|---|

| view the component | * | * | * | |||

| modify the component | * | |||||

| send proxy user requests | * |

For details on the policies in the table, see Access Policies.

IMPORTANT

NiFi fails to restart if values exist for both the “Initial Admin Identity” and “Legacy Authorized Users File” properties. You can specify only one of these values to initialize authorizations.

IMPORTANT

Do not manually edit the authorizations.xml file. Create authorizations only during initial setup and afterwards using the NiFi UI.

Cluster Node Identities

If you are running NiFi in a clustered environment, you must specify the identities for each node. The authorization policies required for the nodes to communicate are created during startup

For example, if you are setting up a 2 node cluster with the following DNs for each node:

cn=nifi-1,ou=people,dc=example,dc=com

cn=nifi-2,ou=people,dc=example,dc=com

<authorizer>

<identifier>file-provider</identifier>

<class>org.apache.nifi.authorization.FileAuthorizer</class>

<property name="Authorizations File">./conf/authorizations.xml</property>

<property name="Users File">./conf/users.xml</property>

<property name="Initial Admin Identity">example_user@NIFI.APACHE.ORG</property>

<property name="Legacy Authorized Users File"></property>

<property name="Node Identity 1">cn=nifi-1,ou=people,dc=example,dc=com</property>

<property name="Node Identity 2">cn=nifi-2,ou=people,dc=example,dc=com</property>

</authorizer>

</authorizers>

IMPORTANT

In a cluster, all nodes must have the same authorizations.xml. If a node has a different authorizations.xml, it cannot join the cluster. The only exception is if a node has an empty authorizations.xml. In this scenario, the node inherits the authorizations.xml from the cluster.

Now that initial authorizations have been created, additional users, groups and authorizations can be created and managed in the NiFi UI.

Configuring Users & Access Policies

This section describes:

-

How to create users and groups

-

How access policies are used to define authorizations

-

How to view policies that are set on a user

-

How to configure access policies by walking through specific examples

IMPORTANT

Instructions requiring interaction with the UI assume the application is being accessed by User1, a user with administrator privileges, such as the “Initial Admin Identity” user or a converted legacy admin user (see Authorizers.xml Setup).

Access Policies

You can manage the ability for users and groups to view or modify NiFi resources using access policies. There are two types of access policies that can be applied to a resource:

-

View - If a view policy is created for a resource, only the users or groups that are added to that policy are able to see the details of that resource.

-

Modify - If a resource has a modify policy, only the users or groups that are added to that policy can change the configuration of that resource.

You can create and apply access policies on both global and component levels.

Global Access Policies

Global access policies govern the following system level authorizations:

| Policy | Privilege | Global Menu Selection |

|---|---|---|

| view the UI | Allow users to view the UI | N/A |

| access the controller | Allows users to view/modify the controller including Reporting Tasks, Controller Services, and Nodes in the Cluster | Controller Settings |

| query provenance | Allows users to submit a Provenance Search and request Event Lineage | Data Provenance |

| access restricted components | Allows users to create/modify restricted components assuming otherwise sufficient permissions | N/A |

| access all policies | Allows users to view/modify the policies for all components | Policies |

| access users/user groups | Allows users to view/modify the users and user groups | Users |

| retrieve site-to-site details | Allows other NiFi instances to retrieve Site-To-Site details | N/A |

| view system diagnostics | Allows users to view System Diagnostics | Summary |

| proxy user requests | Allows proxy machines to send requests on the behalf of others | N/A |

| access counters | Allows users to view/modify Counters | Counters |

Component Level Access Policies

Component level access policies govern the following component level authorizations:

| Policy | Privilege |

|---|---|

| view the component | Allows users to view component configuration details |

| modify the component | Allows users to modify component configuration details |

| view the data | Allows user to view metadata and content for this component through provenance data and flowfile queues in outbound connections |

| modify the data | Allows user to empty flowfile queues in outbound connections and submit replays |

| view the policies | Allows users to view the list of users who can view/modify a component |

| modify the policies | Allows users to modify the list of users who can view/modify a component |

| retrieve data via site-to-site | Allows a port to receive data from NiFi instances |

| send data via site-to-site | Allows a port to send data from NiFi instances |

IMPORTANT

You can apply access policies to all component types except connections. Connection authorizations are inferred by the individual access policies on the source and destination components of the connection, as well as the access policy of the process group containing the components. This is discussed in more detail in the Creating a Connection and Editing a Connection examples below.

Access Policy Inheritance

An administrator does not need to manually create policies for every component in the dataflow. To reduce the amount of time admins spend on authorization management, policies are inherited from parent resource to child resource. For example, if a user is given access to view and modify a process group, that user can also view and modify the components in the process group. Policy inheritance enables an administrator to assign policies at one time and have the policies apply throughout the entire dataflow.

You can override an inherited policy (as described in the Moving a Processor example below). Overriding a policy removes the inherited policy, breaking the chain of inheritance from parent to child, and creates a replacement policy to add users as desired. Inherited policies and their users can be restored by deleting the replacement policy.

IMPORTANT

“View the policies” and “modify the policies” component-level access policies are an exception to this inherited behavior. When a user is added to either policy, they are added to the current list of administrators. They do not override higher level administrators. For this reason, only component specific administrators are displayed for the “view the policies” and “modify the policies” access policies.

IMPORTANT

You cannot modify the users/groups on an inherited policy. Users and groups can only be added or removed from a parent policy or an override policy.

Access Policy Configuration Examples

The most effective way to understand how to create and apply access policies is to walk through some common examples. The following scenarios assume User1 is an administrator and User2 is a newly added user that has only been given access to the UI.

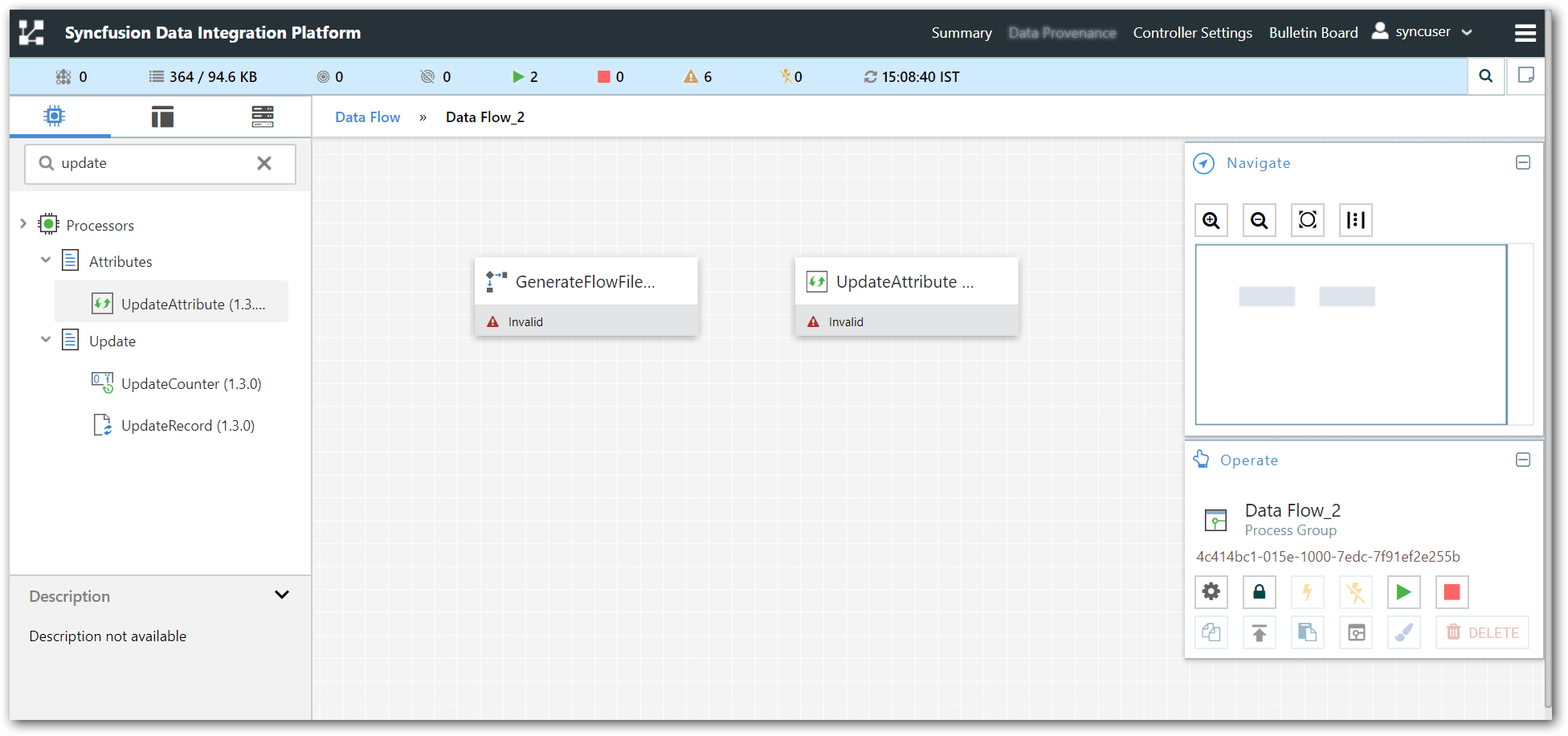

Let’s begin with two processors on the canvas as our starting point: GenerateFlowFile and UpdateAttribute.

User1 can add components to the dataflow and is able to move, edit and connect all processors. The details and properties of the root process group and processors are visible to User1.

User1 wants to maintain their current privileges to the dataflow and its components.

User2 is unable to add components to the dataflow or move, edit, or connect components. The details and properties of the root process group and processors are hidden from User2.

Moving a Processor

To allow User2 to move the GenerateFlowFile processor in the dataflow and only that processor, User1 performs the following steps:

-

Select the GenerateFlowFile processor so that it is highlighted.

-

Select the Access Policies icon (

) from the Operate palette and the Access Policies dialog opens.

) from the Operate palette and the Access Policies dialog opens. -

Select “modify the component” from the policy drop-down. The “modify the component” policy that currently exists on the processor (child) is the “modify the component” policy inherited from the root process group (parent) on which User1 has privileges.

-

Select the Override link in the policy inheritance message. When creating the replacement policy, you are given a choice to override with a copy of the inherited policy or an empty policy.

Select the Override button to create a copy.

- On the replacement policy that is created, select the Add User icon (

). Find or enter User2 in the User Identity field and select OK.

). Find or enter User2 in the User Identity field and select OK.

With these changes, User1 maintains the ability to move both processors on the canvas. User2 can now move the GenerateFlowFile processor but cannot move the UpdateAttribute processor.

Editing a Processor

In the “Moving a Processor” example above, User2 was added to the “modify the component” policy for GenerateFlowFile. Without the ability to view the processor properties, User2 is unable to modify the processor’s configuration. In order to edit a component, a user must be on both the “view the component” and “modify the component” policies. To implement this, User1 performs the following steps:

-

Select the GenerateFlowFile processor.

-

Select the Access Policies icon (

) from the Operate palette and the Access Policies dialog opens.

) from the Operate palette and the Access Policies dialog opens. -

Select “view the component” from the policy drop-down. The view the component” policy that currently exists on the processor (child) is the “view the component” policy inherited from the root process group (parent) on which User1 has privileges.

-

Select the Override link in the policy inheritance message, keep the default of Copy policy and select the Override button.

-

On the override policy that is created, select the Add User icon (

). Find or enter User2 in the User Identity field and select OK.

). Find or enter User2 in the User Identity field and select OK.

With these changes, User1 maintains the ability to view and edit the processors on the canvas. User2 can now view and edit the GenerateFlowFile processor.

Creating a Connection

With the access policies configured as discussed in the previous two examples, User1 is able to connect GenerateFlowFile to LogAttribute:

User2 cannot make the connection:

This is because:

-

User2 does not have modify access on the process group.

-

Even though User2 has view and modify access to the source component (GenerateFlowFile), User2 does not have an access policy on the destination component (UpdateAttribute).

To allow User2 to connect GenerateFlowFile to UpdateAttribute, as User1:

-

Select the root process group. The Operate palette is updated with details for the root process group.

-

Select the Access Policies icon (

) from the Operate palette and the Access Policies dialog opens.

) from the Operate palette and the Access Policies dialog opens. -

Select “modify the component” from the policy drop-down.

-

Select the Add User icon (

). Find or enter User2 and select OK.

). Find or enter User2 and select OK.

By adding User2 to the “modify the component” policy on the process group, User2 is added to the “modify the component” policy on the LogAttribute processor by policy inheritance. To confirm this, highlight the LogAttribute processor and select the Access Policies icon ( ) from the Operate palette:

) from the Operate palette:

With these changes, User2 can now connect the GenerateFlowFile processor to the UpdateAttribute processor.

Editing a Connection

Assume User1 or User2 adds a ReplaceText processor to the root process group:

User1 can select and change the existing connection (between GenerateFlowFile to LogAttribute) to now connect GenerateFlowFile to ReplaceText:

User 2 is unable to perform this action.

To allow User2 to connect GenerateFlowFile to ReplaceText, as User1:

-

Select the root process group. The Operate palette is updated with details for the root process group.

-

Select the Access Policies icon (

).

). -

Select “view the component” from the policy drop-down.

-

Select the Add User icon (

). Find or enter User2 and select OK.

). Find or enter User2 and select OK.

Being added to both the view and modify policies for the process group, User2 can now connect the GenerateFlowFile processor to the ReplaceText processor.

Encryption Configuration

This section provides an overview of the capabilities of NiFi to encrypt and decrypt data.

The EncryptContent processor allows for the encryption and decryption of data, both internal to NiFi and integrated with external systems, such as openssl and other data sources and consumers.

Key Derivation Functions

Key Derivation Functions (KDF) are mechanisms by which human-readable information, usually a password or other secret information, is translated into a cryptographic key suitable for data protection. For further information, read the Wikipedia entry on Key Derivation Functions. Currently, KDFs are ingested by CipherProvider implementations and return a fully-initialized Cipher object to be used for encryption or decryption. Due to the use of a CipherProviderFactory, the KDFs are not customizable at this time. Future enhancements will include the ability to provide custom cost parameters to the KDF at initialization time. As a work-around, CipherProvider instances can be initialized with custom cost parameters in the constructor but this is not currently supported by the CipherProviderFactory. Here are the KDFs currently supported by NiFi (primarily in the EncryptContent processor for password-based encryption (PBE)) and relevant notes:

-

NiFi Legacy KDF

-

The original KDF used by NiFi for internal key derivation for PBE, this is 1000 iterations of the MD5 digest over the concatenation of the password and 8 or 16 bytes of random salt (the salt length depends on the selected cipher block size).

-

This KDF is deprecated as of NiFi 0.5.0 and should only be used for backwards compatibility to decrypt data that was previously encrypted by a legacy version of NiFi.

-

-

OpenSSL PKCS#5 v1.5 EVP_BytesToKey

-

This KDF was added in v0.4.0.

-

This KDF is provided for compatibility with data encrypted using OpenSSL’s default PBE, known as

EVP\_BytesToKey. This is a single iteration of MD5 over the concatenation of the password and 8 bytes of random ASCII salt. OpenSSL recommends usingPBKDF2for key derivation but does not expose the library method necessary to the command-line tool, so this KDF is still the de facto default for command-line encryption.

-

-

Bcrypt

-

This KDF was added in v0.5.0.

-

Bcrypt is an adaptive function based on the Blowfish cipher. This KDF is strongly recommended as it automatically incorporates a random 16 byte salt, configurable cost parameter (or “work factor”), and is hardened against brute-force attacks using GPGPU (which share memory between cores) by requiring access to “large” blocks of memory during the key derivation. It is less resistant to FPGA brute-force attacks where the gate arrays have access to individual embedded RAM blocks.

-

Because the length of a Bcrypt-derived key is always 184 bits, the complete output is then fed to a

SHA-512digest and truncated to the desired key length. This provides the benefit of the avalanche effect on the formatted input. -

The recommended minimum work factor is 12 (212 key derivation rounds) (as of 2/1/2016 on commodity hardware) and should be increased to the threshold at which legitimate systems will encounter detrimental delays (see schedule below or use

BcryptCipherProviderGroovyTest#testDefaultConstructorShouldProvideStrongWorkFactor()to calculate safe minimums). -

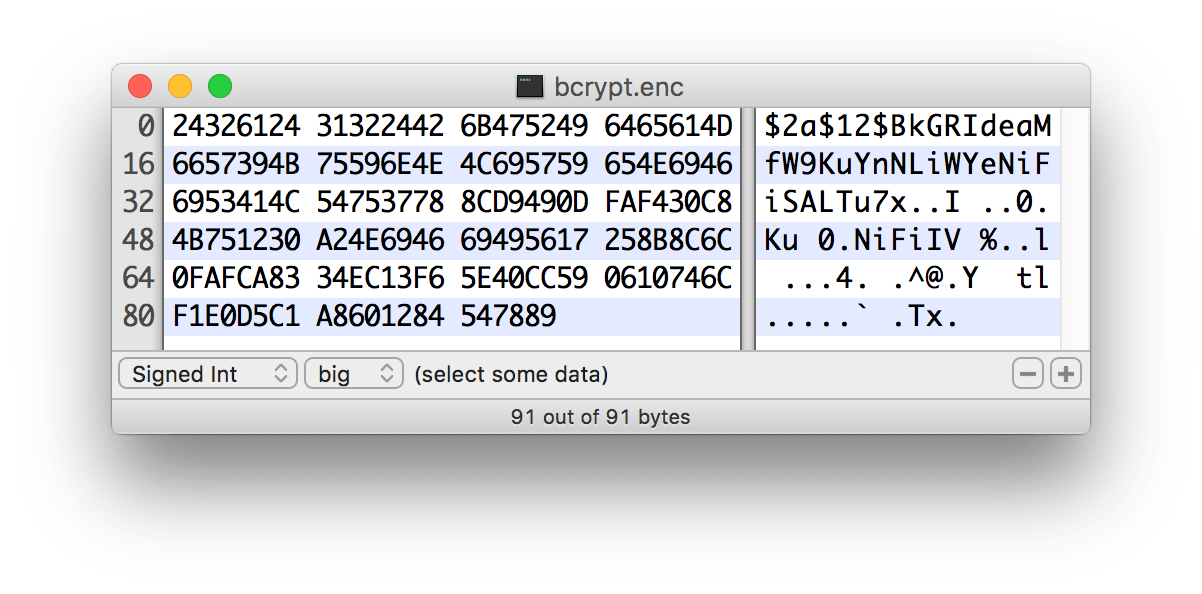

The salt format is

$2a$10$ABCDEFGHIJKLMNOPQRSTUV. The salt is delimited by $ and the three sections are as follows:-

2a- the version of the format. NiFi currently uses2afor all salts generated internally. -

10 - the work factor. This is actually the log2 value, so the total iteration count would be 210 in this case.

-

ABCDEFGHIJKLMNOPQRSTUV- the 22 character, Base64-encoded, unpadded, raw salt value. This decodes to a 16 byte salt used in the key derivation.

-

-

-

Scrypt

-

This KDF was added in v0.5.0.

-

Scrypt is an adaptive function designed in response to

bcrypt. This KDF is recommended as it requires relatively large amounts of memory for each derivation, making it resistant to hardware brute-force attacks. -

The recommended minimum cost is N=214, r=8, p=1 (as of 2/1/2016 on commodity hardware) and should be increased to the threshold at which legitimate systems will encounter detrimental delays (see schedule below or use

ScryptCipherProviderGroovyTest#testDefaultConstructorShouldProvideStrongParameters()to calculate safe minimums). -

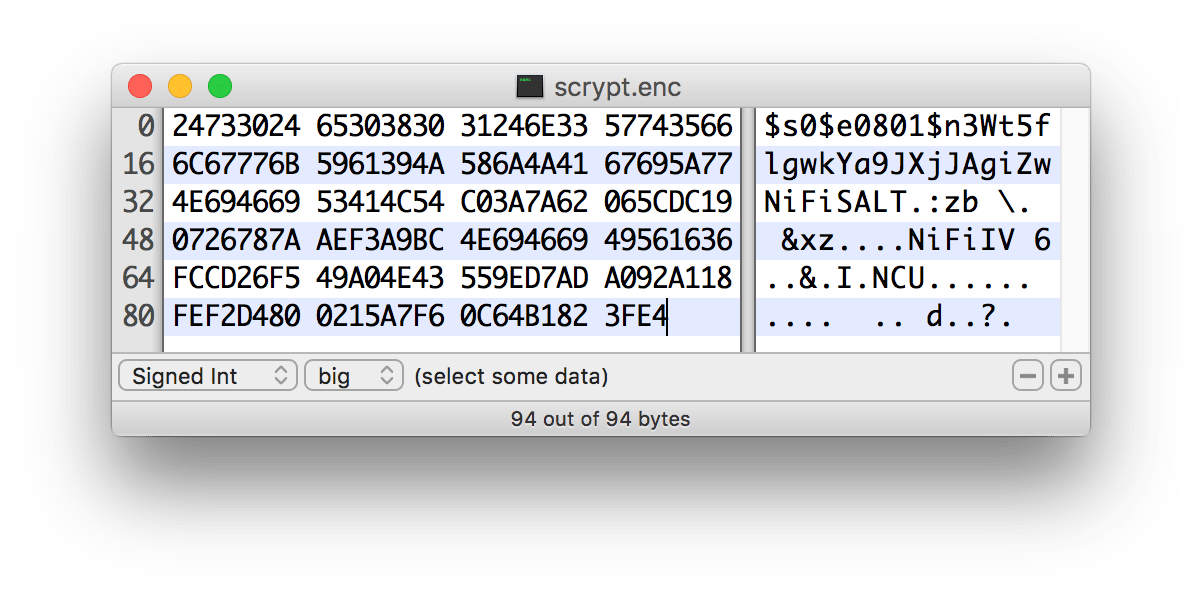

The salt format is

$s0$e0101$ABCDEFGHIJKLMNOPQRSTUV. The salt is delimited by $ and the three sections are as follows:-

s0- the version of the format. NiFi currently uses s0 for all salts generated internally. -

e0101- the cost parameters. This is actually a hexadecimal encoding of N, r, p using shifts. This can be formed/parsed usingScrypt#encodeParams()andScrypt#parseParameters().- Some external libraries encode N, r, and p separately in the form $400$1$1$. A utility method is available at ScryptCipherProvider#translateSalt() which will convert the external form to the internal form.

-

ABCDEFGHIJKLMNOPQRSTUV- the 12-44 character, Base64-encoded, unpadded, raw salt value. This decodes to a 8-32 byte salt used in the key derivation.

-

-

-

PBKDF2

-

This KDF was added in v0.5.0.

-

Password-Based Key Derivation Function 2 is an adaptive derivation function which uses an internal pseudorandom function (PRF) and iterates it many times over a password and salt (at least 16 bytes).

-

The PRF is recommended to be

HMAC/SHA-256orHMAC/SHA-512. The use of an HMAC cryptographic hash function mitigates a length extension attack. -

The recommended minimum number of iterations is 160,000 (as of 2/1/2016 on commodity hardware). This number should be doubled every two years (see schedule below or use

PBKDF2CipherProviderGroovyTest#testDefaultConstructorShouldProvideStrongIterationCount()to calculate safe minimums). -

This KDF is not memory-hard (can be parallelized massively with commodity hardware) but is still recommended as sufficient by NIST SP 800-132 and many cryptographers (when used with a proper iteration count and HMAC cryptographic hash function).

-

-

None

-

This KDF was added in v0.5.0.

-

This KDF performs no operation on the input and is a marker to indicate the raw key is provided to the cipher. The key must be provided in hexadecimal encoding and be of a valid length for the associated cipher/algorithm.

-

Additional Resources

- Explanation of optimal scrypt cost parameters and relationships

- NIST Special Publication 800-132

- OWASP Password Storage Work Factor Calculations

- PBKDF2 rounds calculations

- Scrypt as KDF vs password storage vulnerabilities

- Scrypt vs. Bcrypt (as of 2010)

- Bcrypt vs PBKDF2

- Choosing a work factor for Bcrypt

- Spring Security Bcrypt

- OpenSSL KDF flaws description

Salt and IV Encoding

Initially, the EncryptContent processor had a single method of deriving the encryption key from a user-provided password. This is now referred to as NiFiLegacy mode, effectively MD5 digest, 1000 iterations. In v0.4.0, another method of deriving the key, OpenSSL PKCS#5 v1.5 EVP_BytesToKey was added for compatibility with content encrypted outside of NiFi using the openssl command-line tool. Both of these Key Derivation Functions (KDF) had hard-coded digest functions and iteration counts, and the salt format was also hard-coded. With v0.5.0, additional KDFs are introduced with variable iteration counts, work factors, and salt formats. In addition, raw keyed encryption was also introduced. This required the capacity to encode arbitrary salts and Initialization Vectors (IV) into the cipher stream in order to be recovered by NiFi or a follow-on system to decrypt these messages.

For the existing KDFs, the salt format has not changed.

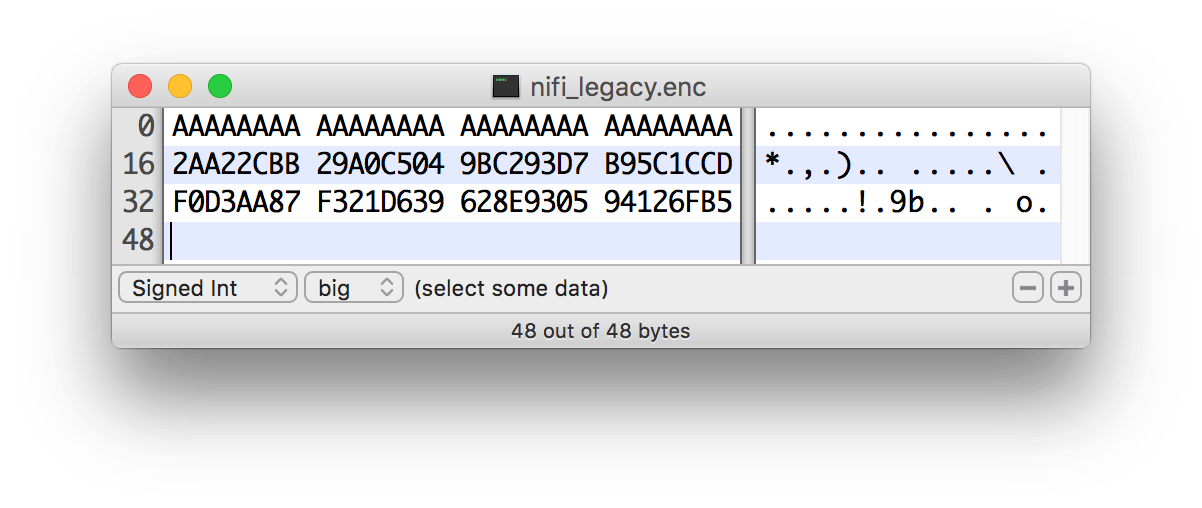

NiFi Legacy

The first 8 or 16 bytes of the input are the salt. The salt length is determined based on the selected algorithm’s cipher block length. If the cipher block size cannot be determined (such as with a stream cipher like RC4), the default value of 8 bytes is used. On decryption, the salt is read in and combined with the password to derive the encryption key and IV.

OpenSSL PKCS#5 v1.5 EVP_BytesToKey

OpenSSL allows for salted or unsalted key derivation. *Unsalted key derivation is a security risk and is not recommended. *If a salt is present, the first 8 bytes of the input are the ASCII string "Salted" (0x53 61 6C 74 65 64 5F 5F) and the next 8 bytes are the ASCII-encoded salt. On decryption, the salt is read in and combined with the password to derive the encryption key and IV. If there is no salt header, the entire input is considered to be the cipher text.

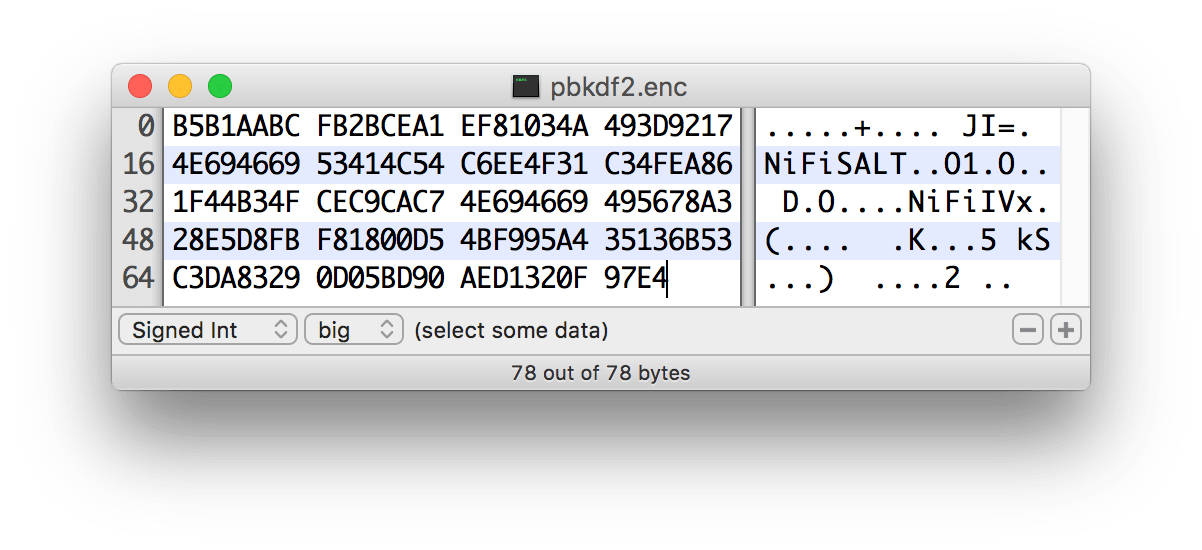

For new KDFs, each of which allow for non-deterministic IVs, the IV must be stored alongside the cipher text. This is not a vulnerability, as the IV is not required to be secret, but simply to be unique for messages encrypted using the same key to reduce the success of cryptographic attacks. For these KDFs, the output consists of the salt, followed by the salt delimiter, UTF-8 string "NiFiSALT" (0x4E 69 46 69 53 41 4C 54) and then the IV, followed by the IV delimiter, UTF-8 string "NiFiIV" (0x4E 69 46 69 49 56), followed by the cipher text.

Bcrypt, Scrypt, PBKDF2

Java Cryptography Extension (JCE) Limited Strength Jurisdiction Policies

Because of US export regulations, default JVMs have limits imposed on the strength of cryptographic operations available to them. For example, AES operations are limited to 128 bit keys by default. While AES-128 is cryptographically safe, this can have unintended consequences, specifically on Password-based Encryption (PBE).

PBE is the process of deriving a cryptographic key for encryption or decryption from user-provided secret material, usually a password. Rather than a human remembering a (random-appearing) 32 or 64 character hexadecimal string, a password or passphrase is used.

A number of PBE algorithms provided by NiFi impose strict limits on the length of the password due to the underlying key length checks. Below is a table listing the maximum password length on a JVM with limited cryptographic strength.

Table 1. Maximum Password Length on Limited Cryptographic Strength JVM

| Algorithm | Max Password Length |

| PBEWITHMD5AND128BITAES-CBC-OPENSSL | 16 |

| PBEWITHMD5AND192BITAES-CBC-OPENSSL | 16 |

| PBEWITHMD5AND256BITAES-CBC-OPENSSL | 16 |

| PBEWITHMD5ANDDES | 16 |

| PBEWITHMD5ANDRC2 | 16 |

| PBEWITHSHA1ANDRC2 | 16 |

| PBEWITHSHA1ANDDES | 16 |

| PBEWITHSHAAND128BITAES-CBC-BC | 7 |

| PBEWITHSHAAND192BITAES-CBC-BC | 7 |

| PBEWITHSHAAND256BITAES-CBC-BC | 7 |

| PBEWITHSHAAND40BITRC2-CBC | 7 |

| PBEWITHSHAAND128BITRC2-CBC | 7 |

| PBEWITHSHAAND40BITRC4 | 7 |

| PBEWITHSHAAND128BITRC4 | 7 |

| PBEWITHSHA256AND128BITAES-CBC-BC | 7 |

| PBEWITHSHA256AND192BITAES-CBC-BC | 7 |

| PBEWITHSHA256AND256BITAES-CBC-BC | 7 |

| PBEWITHSHAAND2-KEYTRIPLEDES-CBC | 7 |

| PBEWITHSHAAND3-KEYTRIPLEDES-CBC | 7 |

| PBEWITHSHAANDTWOFISH-CBC | 7 |

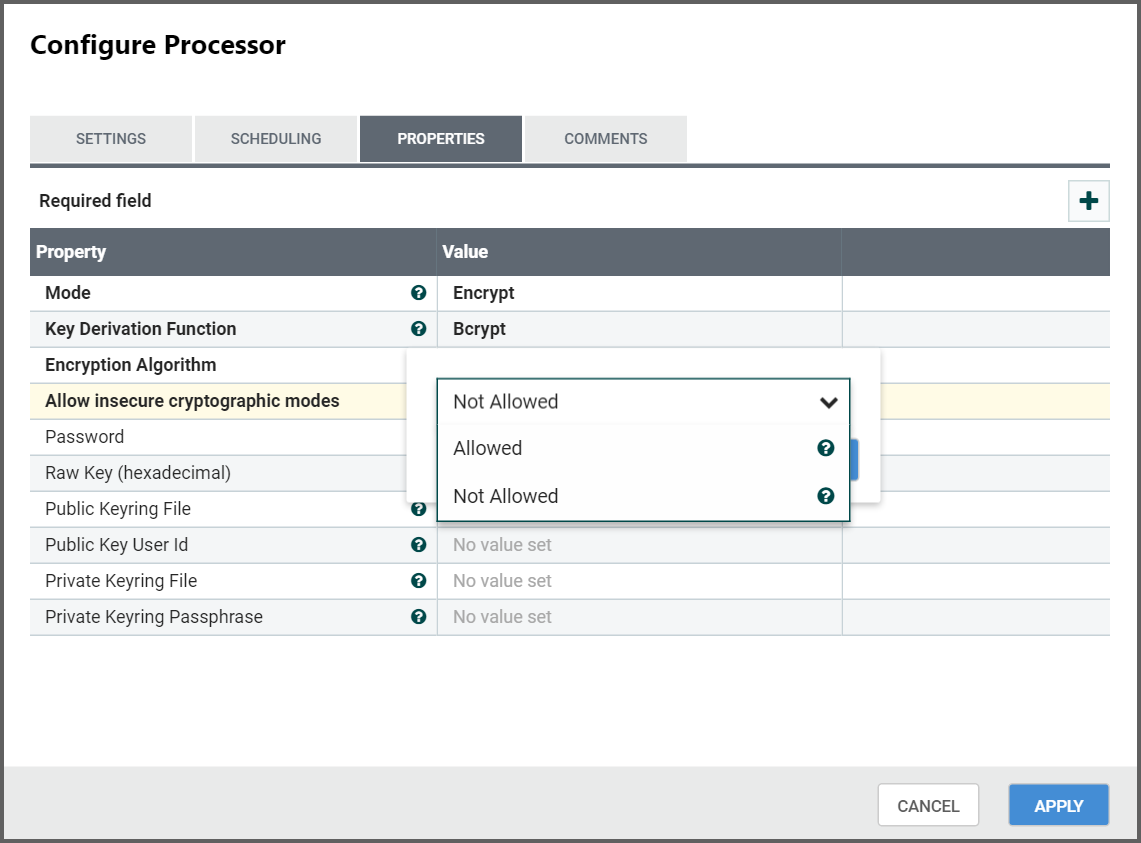

Allow Insecure Cryptographic Modes

By default, the Allow Insecure Cryptographic Modes property in EncryptContent processor settings is set to not-allowed. This means that if a password of fewer than 10 characters is provided, a validation error will occur. 10 characters is a conservative estimate and does not take into consideration full entropy calculations, patterns, etc.

On a JVM with limited strength cryptography, some PBE algorithms limit the maximum password length to 7, and in this case it will not be possible to provide a “safe” password. It is recommended to install the JCE Unlimited Strength Jurisdiction Policy files for the JVM to mitigate this issue.

If on a system where the unlimited strength policies cannot be installed, it is recommended to switch to an algorithm that supports longer passwords (see table above).

IMPORTANT

Allowing Weak Crypto

If it is not possible to install the unlimited strength jurisdiction policies, theAllow Weak Cryptosetting can be changed toallowed, but this is not recommended. Changing this setting explicitly acknowledges the inherent risk in using weak cryptographic configurations.

It is preferable to request upstream/downstream systems to switch to keyed encryption or use a “strong” Key Derivation Function (KDF) supported by NiFi .

Encrypted Passwords in Configuration Files

In order to facilitate the secure setup of NiFi, you can use the encrypt-config command line utility to encrypt raw configuration values that NiFi decrypts in memory on startup. This extensible protection scheme transparently allows NiFi to use raw values in operation, while protecting them at rest. In the future, hardware security modules (HSM) and external secure storage mechanisms will be integrated, but for now, an AES encryption provider is the default implementation.

This is a change in behavior; prior to 1.0, all configuration values were stored in plaintext on the file system. POSIX file permissions were recommended to limit unauthorized access to these files

If no administrator action is taken, the configuration values remain unencrypted.

Encrypt-Config Tool

The encrypt-config command line tool (invoked as ./bin/encrypt-config.sh or bin\encrypt-config.bat) reads from a nifi.properties file with plaintext sensitive configuration values, prompts for a master password or raw hexadecimal key, and encrypts each value. It replaces the plain values with the protected value in the same file, or writes to a new nifi.properties file if specified.

The default encryption algorithm utilized is AES/GCM 128/256-bit. 128-bit is used if the JCE Unlimited Strength Cryptographic Jurisdiction Policy files are not installed, and 256-bit is used if they are installed.

You can use the following command line options with the encrypt-config tool:

-

-A,--newFlowAlgorithm <arg>The algorithm to use to encrypt the sensitive processor properties in flow.xml.gz -

-b,--bootstrapConf <arg>The bootstrap.conf file to persist master key -

-e,--oldKey <arg>The old raw hexadecimal key to use during key migration -

-f,--flowXml <arg>The flow.xml.gz file currently protected with old password (will be overwritten) -

-g,--outputFlowXml <arg>The destination flow.xml.gz file containing protected config values (will not modify input flow.xml.gz) -

-h,--helpPrints this usage message -

-i,--outputLoginIdentityProviders <arg>The destination login-identity-providers.xml file containing protected config values (will not modify input login-identity-providers.xml) -

-k,--key <arg>The raw hexadecimal key to use to encrypt the sensitive properties -

-l,--loginIdentityProviders <arg>The login-identity-providers.xml file containing unprotected config values (will be overwritten) -

-m,--migrateIf provided, the nifi.properties and/or login-identity-providers.xml sensitive properties will be re-encrypted with a new key -

-n,--niFiProperties <arg>The nifi.properties file containing unprotected config values (will be overwritten) -

-o,--outputNiFiProperties <arg>The destination nifi.properties file containing protected config values (will not modify input nifi.properties) -

-p,--password <arg>The password from which to derive the key to use to encrypt the sensitive properties -

-P,--newFlowProvider <arg>The security provider to use to encrypt the sensitive processor properties in flow.xml.gz -

-r,--useRawKeyIf provided, the secure console will prompt for the raw key value in hexadecimal form -

-s,--propsKey <arg>The password or key to use to encrypt the sensitive processor properties in flow.xml.gz -

-v,--verboseSets verbose mode (default false) -

-w,--oldPassword <arg>The old password from which to derive the key during migration -

-x,--encryptFlowXmlOnlyIf provided, the properties in flow.xml.gz will be re-encrypted with a new key but the nifi.properties and/or login-identity-providers.xml files will not be modified

As an example of how the tool works, assume that you have installed the tool on a machine supporting 256-bit encryption and with the following existing values in the nifi.properties file:

# security properties #

nifi.sensitive.props.key=thisIsABadSensitiveKeyPassword

nifi.sensitive.props.algorithm=PBEWITHMD5AND256BITAES-CBC-OPENSSL

nifi.sensitive.props.provider=BC

nifi.sensitive.props.additional.keys=

nifi.security.keystore=/path/to/keystore.jks

nifi.security.keystoreType=JKS

nifi.security.keystorePasswd=thisIsABadKeystorePassword

nifi.security.keyPasswd=thisIsABadKeyPassword

nifi.security.truststore=

nifi.security.truststoreType=

nifi.security.truststorePasswd=

Enter the following arguments when using the tool:

encrypt-config.sh

-b bootstrap.conf

-k 0123456789ABCDEFFEDCBA98765432100123456789ABCDEFFEDCBA9876543210

-n nifi.properties

As a result, the nifi.properties file is overwritten with protected properties and sibling encryption identifiers (aes/gcm/256, the currently supported algorithm):

# security properties #

nifi.sensitive.props.key=n2z+tTTbHuZ4V4V2||uWhdasyDXD4ZG2lMAes/vqh6u4vaz4xgL4aEbF4Y/dXevqk3ulRcOwf1vc4RDQ==

nifi.sensitive.props.key.protected=aes/gcm/256

nifi.sensitive.props.algorithm=PBEWITHMD5AND256BITAES-CBC-OPENSSL

nifi.sensitive.props.provider=BC

nifi.sensitive.props.additional.keys=

nifi.security.keystore=/path/to/keystore.jks

nifi.security.keystoreType=JKS

nifi.security.keystorePasswd=oBjT92hIGRElIGOh||MZ6uYuWNBrOA6usq/Jt3DaD2e4otNirZDytac/w/KFe0HOkrJR03vcbo

nifi.security.keystorePasswd.protected=aes/gcm/256

nifi.security.keyPasswd=ac/BaE35SL/esLiJ||+ULRvRLYdIDA2VqpE0eQXDEMjaLBMG2kbKOdOwBk/hGebDKlVg==

nifi.security.keyPasswd.protected=aes/gcm/256

nifi.security.truststore=

nifi.security.truststoreType=

nifi.security.truststorePasswd=

Additionally, the bootstrap.conf file is updated with the encryption key as follows:

# Master key in hexadecimal format for encrypted sensitive configuration values

nifi.bootstrap.sensitive.key=0123456789ABCDEFFEDCBA98765432100123456789ABCDEFFEDCBA9876543210

Sensitive configuration values are encrypted by the tool by default, however you can encrypt any additional properties, if desired. To encrypt additional properties, specify them as comma-separated values in the nifi.sensitive.props.additional.keys property.

If the nifi.properties file already has valid protected values, those property values are not modified by the tool.

When applied to login-identity-providers.xml, the property elements are updated with an encryption attribute:

<!-- LDAP Provider -->

<provider>

<identifier>ldap-provider</identifier>

<class>org.apache.nifi.ldap.LdapProvider</class>

<property name="Authentication Strategy">START_TLS</property>

<property name="Manager DN">some user</property>

<property name="Manager Password" encryption="aes/gcm/128">q4r7WIgN0MaxdAKM||SGgdCTPGSFEcuH4RraMYEdeyVbOx93abdWTVSWvh1w+klA</property>

<property name="TLS - Keystore"></property>

<property name="TLS - Keystore Password" encryption="aes/gcm/128">Uah59TWX+Ru5GY5p||B44RT/LJtC08QWA5ehQf01JxIpf0qSJUzug25UwkF5a50g</property>

<property name="TLS - Keystore Type"></property>

...

</provider> In order to change the key used to encrypt the sensitive values, indicate **migration mode** using the `-m` or `--migrate` flag, provide the new key or password using the `-k` or `-p` flags as usual, and provide the existing key or password using `-e` or `-w` respectively. This will allow the toolkit to decrypt the existing values and re-encrypt them, and update `bootstrap.conf` with the new key. Only one of the key or password needs to be specified for each phase (old vs. new), and any combination is sufficient:

-

old key → new key

-

old key → new password

-

old password → new key

-

old password → new password

Sensitive Property Key Migration

In order to change the key used to encrypt the sensitive values, indicate migration mode using the -m or –migrate flag, provide the new key or password using the -k or -p flags as usual, and provide the existing key or password using -e or -w respectively. This will allow the toolkit to decrypt the existing values and re-encrypt them, and update bootstrap.conf with the new key. Only one of the key or password needs to be specified for each phase (old vs. new), and any combination is sufficient:

-

old key → new key

-

old key → new password

-

old password → new key

-

old password → new password

Existing Flow Migration

This tool can also be used to change the value of nifi.sensitive.props.key for an existing flow. The tool will read the existing flow.xml.gz and decrypt any sensitive component properties using the original key, then re-encrypt the sensitive properties with the new key, and write out a new version of the flow.xml.gz, or overwrite the existing one.

The current sensitive properties key is not provided as a command-line argument, as it is read directly from nifi.properties. As this file is a required parameter, the -x/–encryptFlowXmlOnly flags tell the tool not to attempt to encrypt the properties in nifi.properties, but rather to only update the nifi.sensitive.props.key value with the new key. The exception to this is if the nifi.properties is already encrypted, the new sensitive property key will also be encrypted before being written to nifi.properties.

The following command would migrate the sensitive properties key in place, meaning it would overwrite the existing flow.xml.gz and nifi.properties:

./encrypt-config.sh -f /path/to/flow.xml.gz -n ./path/to/nifi.properties -s newpassword -x

The following command would migrate the sensitive properties key and write out a separate flow.xml.gz and nifi.properties:

./encrypt-config.sh -f ./path/to/src/flow.xml.gz -g /path/to/dest/flow.xml.gz -n /path/to/src/nifi.properties -o /path/to/dest/nifi.properties -s newpassword -x

Password Key Derivation

Instead of providing a 32 or 64 character raw hexadecimal key, you can provide a password from which the key will be derived. As of 1.0.0, the password must be at least 12 characters, and the key will be derived using SCrypt with the parameters:

-

pw- the password bytes inUTF-8 -

salt- the fixed salt value (NIFI_SCRYPT_SALT) bytes inUTF-8 -

N- 216 -

r- 8 -

p- 1 -

dkLen- determined by the JCE policies available

As of August 2016, these values are determined to be strong for this threat model but may change in future versions.

IMPORTANT

While fixed salts are counter to best practices, a static salt is necessary for deterministic key derivation without additional storage of the salt value.

Secure Prompt

If you prefer not to provide the password or raw key in the command-line invocation of the tool, leaving these arguments absent will prompt a secure console read of the password (by default) or raw key (if the -r flag is provided at invocation).

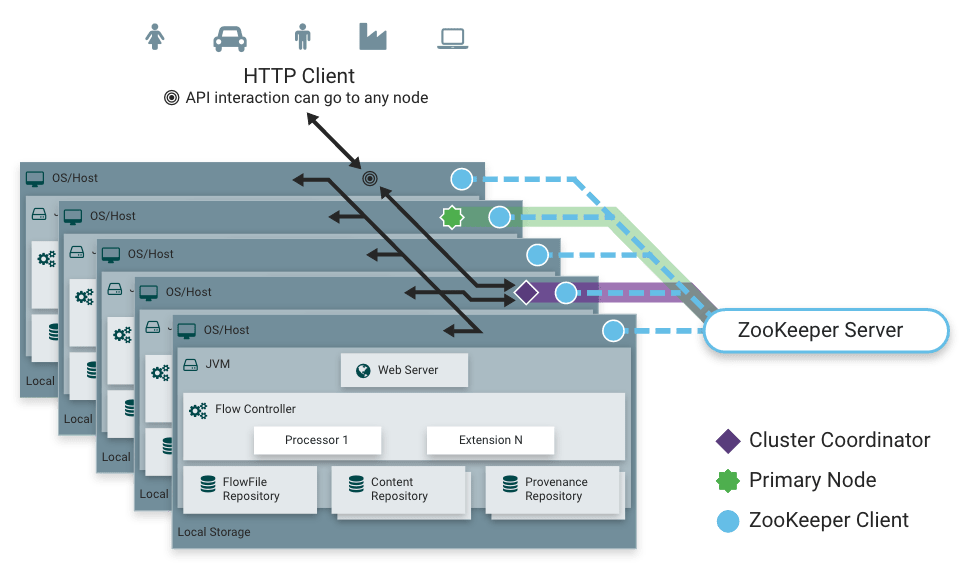

Clustering Configuration

This section provides a quick overview of NiFi Clustering and instructions on how to set up a basic cluster. In the future, we hope to provide supplemental documentation that covers the NiFi Cluster Architecture in depth.

( )

)

NiFi employs a Zero-Master Clustering paradigm. Each node in the cluster performs the same tasks on the data, but each operates on a different set of data. One of the nodes is automatically elected (via ZooKeeper) as the Cluster Coordinator. All nodes in the cluster will then send heartbeat/status information to this node, and this node is responsible for disconnecting nodes that do not report any heartbeat status for some amount of time. Additionally, when a new node elects to join the cluster, the new node must first connect to the currently-elected Cluster Coordinator in order to obtain the most up-to-date flow. If the Cluster Coordinator determines that the node is allowed to join (based on its configured Firewall file), the current flow is provided to that node, and that node is able to join the cluster, assuming that the node’s copy of the flow matches the copy provided by the Cluster Coordinator. If the node’s version of the flow configuration differs from that of the Cluster Coordinator’s, the node will not join the cluster.

Why Cluster?

NiFi Administrators or Dataflow Managers (DFMs) may find that using one instance of NiFi on a single server is not enough to process the amount of data they have. So, one solution is to run the same dataflow on multiple NiFi servers. However, this creates a management problem, because each time DFMs want to change or update the dataflow, they must make those changes on each server and then monitor each server individually. By clustering the NiFi servers, it’s possible to have that increased processing capability along with a single interface through which to make dataflow changes and monitor the dataflow. Clustering allows the DFM to make each change only once, and that change is then replicated to all the nodes of the cluster. Through the single interface, the DFM may also monitor the health and status of all the nodes.

NiFi Clustering is unique and has its own terminology. It’s important to understand the following terms before setting up a cluster.

Terminology

NiFi Cluster Manager: A NiFi Cluster Cluster Coordinator is the node in a NiFi cluster that is responsible for carrying out tasks to manage which nodes are allowed in the cluster and providing the most up-to-date flow to newly joining nodes. When a DataFlow Manager manages a dataflow in a cluster, they are able to do so through the User Interface of any node in the cluster. Any change made is then replicated to all nodes in the cluster.

Nodes: Each cluster is made up of the NCM and one or more nodes. The nodes do the actual data processing.

Primary Node: Every cluster has one Primary Node. On this node, it is possible to run “Isolated Processors” (see below). ZooKeeper is used to automatically elect a Primary Node. If that node disconnects from the cluster for any reason, a new Primary Node will automatically be elected. Users can determine which node is currently elected as the Primary Node by looking at the Cluster Management page of the User Interface.

Isolated Processors: In a NiFi cluster, the same dataflow runs on all the nodes. As a result, every component in the flow runs on every node. However, there may be cases when the DFM would not want every processor to run on every node. The most common case is when using a processor that communicates with an external service using a protocol that does not scale well. For example, the GetSFTP processor pulls from a remote directory, and if the GetSFTP Processor runs on every node in the cluster tries simultaneously to pull from the same remote directory, there could be race conditions. Therefore, the DFM could configure the GetSFTP on the Primary Node to run in isolation, meaning that it only runs on that node. It could pull in data and - with the proper dataflow configuration - load-balance it across the rest of the nodes in the cluster. Note that while this feature exists, it is also very common to simply use a standalone NiFi instance to pull data and feed it to the cluster. It just depends on the resources available and how the Administrator decides to configure the cluster.

Heartbeats: The nodes communicate their health and status to the currently elected Cluster Coordinator via “heartbeats”, which let the Coordinator know they are still connected to the cluster and working properly. By default, the nodes emit heartbeats every 5 seconds, and if the Cluster Coordinator does not receive a heartbeat from a node within 40 seconds, it disconnects the node due to “lack of heartbeat”. (The 5-second setting is configurable in the nifi.properties file. See the System Properties section of this document for more information.) The reason that the Cluster Coordinator disconnects the node is because the Coordinator needs to ensure that every node in the cluster is in sync, and if a node is not heard from regularly, the Coordinator cannot be sure it is still in sync with the rest of the cluster. If, after 40 seconds, the node does send a new heartbeat, the Coordinator will automatically request that the node re-join the cluster, to include the re-validation of the node’s flow. Both the disconnection due to lack of heartbeat and the reconnection once a heartbeat is received are reported to the DFM in the User Interface.

Communication within the Cluster

As noted, the nodes communicate with the Cluster Coordinator via heartbeats. When a Cluster Coordinator is elected, it updates a well-known ZNode in ZooKeeper with its connection information so that nodes understand where to send heartbeats. If one of the nodes goes down, the other nodes in the cluster will not automatically pick up the load of the missing node. It is possible for the DFM to configure the dataflow for failover contingencies; however, this is dependent on the dataflow design and does not happen automatically.

When the DFM makes changes to the dataflow, the node that receives the request to change the flow communicates those changes to all nodes and waits for each node to respond, indicating that it has made the change on its local flow.

Dealing with Disconnected Nodes